AI can copy a human's personality in just two hours of conversation

Advanced generative AI can change its 'behavior' depending on the design, and there are already several services that can pretend to be a human's

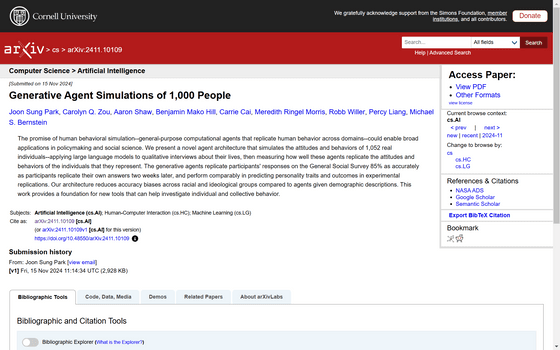

[2411.10109] Generative Agent Simulations of 1,000 People

https://arxiv.org/abs/2411.10109

AI can create a reasonable facsimile of a person's personality after two-hour interview

Scientists teach AI to recreate human personality after a two-hour interview

https://itc.ua/en/news/scientists-teach-ai-to-recreate-human-personality-after-a-two-hour-interview/

OpenAI's GPT-4o Makes AI Clones of Real People With Surprising Ease

https://singularityhub.com/2024/11/29/openais-gpt-4o-makes-ai-clones-of-real-people-with-surprising-ease/

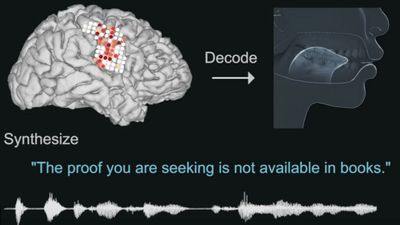

In November 2024, Stanford University computer scientist Michael Bernstein and researchers at Google's AI research team, Google DeepMind, announced that they had designed an architecture that simulates human attitudes and behaviors and incorporated them into a conversational AI to mimic the person they were speaking to.

The AI works by asking humans a series of questions and listening to their answers. The questions were adapted from the American Voices Project , which was created to explore the daily lives of Americans, and were primarily sociologically significant, such as 'Tell me your life story, including your experiences from childhood to adulthood, your family and relationships, and the major events that have happened to you,' and 'How have you responded to the growing attention being given to race, racism, and police activity?'

In addition to asking pre-set questions, the AI also asked follow-up questions based on people's responses. The AI interviewed 1,052 Americans for about two hours, analyzing and simulating the personalities of the people it was speaking to, creating a mock personality for each of them.

Based on this, Bernstein and his colleagues asked the same questions to both the AI's simulated personality and the original human, and the AI responded with about 85% accuracy to the human.

According to Bernstein, the goal of this research is to 'simplify sociological research.' Traditional social surveys require a huge amount of resources to survey real people, but by using AI that mimics real people, it may be possible to reduce resources and conduct surveys and simulations that would be impossible with real people.

However, there are ethical concerns that this technology could be misused to create credible deepfakes or to use some kind of proxy service to allow AI to impersonate the person in question.

'The biggest surprise for me was how little data you need to create a human copy,' Hassan Raza, CEO of Tavus, a service that creates clones of its customers, said of the research. 'Tavus requires a lot of emails and other information. The great thing about this study is that it shows that you might not need that much information.'

'A faithful AI replica of a human could be a powerful tool for policy-making because it would be much cheaper and quicker to survey than a group of humans,' said Richard Whittle of the University of Salford. He hopes that this research will spark a revolution in social research.

'This technology could revolutionize human-robot interaction, potentially making it possible to create robots that respond naturally to emotions and social signals. This could enhance not only productivity but also emotional connections in a future where AI is an integral part of human life,' Bernstein and his colleagues said .

Related Posts: