What is the statistical viewpoint to incorporate in order not to waste the A / B test

"A / B test"Is used in the construction of a website as a method to judge more excellent layout and design by preparing multiple patterns of images and sentences, replacing them with each other and comparing user's reactions. However, some of the software that conducts the A / B tests lacks a statistical point of view, there are things that do not make any profit and have no meaning at all, or conversely the results are misleading and even harmfulQubitMr. Martin Goodson is ringing a warning bell.

Mostwinningabtestresultsareillusory_0.pdf

(PDF file)http://www.qubit.com/sites/default/files/pdf/mostwinningabtestresultsareillusory_0.pdf

Mr. Goodson says that A / B test tools that do not keep the basics of statistics are circulating around the world, and sometimes loses the profit by testing it in some cases. From a statistical point of view, Mr. Goodson recommends the correct A / B test with the following three points.

◆ 1: Sufficient sample

For example, when investigating with the theme "Which is taller than men?", The method of investigating the height of one male and one female to draw a conclusion is absurd It is clear. It is because by chance, if you choose a terribly tall woman and a ridiculously low male, you will make a wrong conclusion that "women are taller than men".

In order to eliminate such accidental interruption as much as possible, "statistically many samples" is a statistical iron rule. If the sample size is small, Goodson says it not only wastes the test time but also leads to false conclusions. For example, considering a case where you need a two-month A / B test to derive a statistically significant difference and you test only two weeks to save time, the test results are incorrect The probability of becoming 67%.

Goodson recommends stopping the test when 500 differences appearmethodologyOr, I tried it with only 150 peopletestOr, it is making a conclusion with only 100 differencetestPointed out that "these tests do not work at all", he says that the minimum number of samples required for the A / B test is "6000".

According to Google data, it is 10% that adding a small change to the website produces a good effect, the remaining 90% is a wasteful change or a negative change. Assuming that the accuracy of the A / B test repeated for two months was 80%, 10 of the 100 trial changes were effective and 8 were found from the accuracy of the A / B test It will be. Also, under the general condition that the p value showing interruption of significance is 0.05, since it is 4.5 pieces which is 5% of 90 pieces which were not able to be seen, it is effective among 100 change points The change is 8 + 4.5 = 12.5 pieces.

In the case of an inaccurate A / B test, for example, if the accuracy is only 30%, statistically it is judged that it is "effective improvement" as a result of the A / B test if there are few samples Goodson says% is wrong.

◆ 2: Multiple tests

Goodson points out that there are many A / B test tools that are designed to stop the test there if positive results are obtained. However, if you try the "A / A test" which repeatedly tests the change point which resulted in "effected" in the A / B test and examines the conversion rate, it is said that it is understood that too early conclusions are wrong about. If the A / B test result does not match the A / A test result, the result is incorrect.

On the other hand, it is said that effects can be obtained by simultaneously performing multiple A / B testsIdeaAlthough it can be seen, it is not preferable. Mr. Goodson says that it should be tested in a state where there are a lot of samples, focusing on the premise of a proper hypothesis rather than a strategy of "striking a bad number of guns".

◆ 3: Return to the average

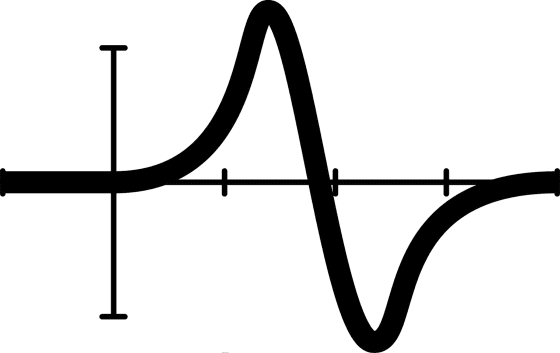

Statistics includes "Return to averageThere is a known phenomenon called. For example, the mean point of a group that was a markedly bad test result is a phenomenon that it becomes easier to approach the average value at the next examination. In the A / B test, the return to the average naturally takes place, so the change that seemed effective effectively at an early point in time may decrease as time goes by. If the effectiveness seems to be diminished with the passage of time, there is plenty to say that the change was not useful for increasing the conversion rate.

Mr. Goodson says that the result of the A / B test often falls into a situation called "curse of the winner" which overvalues it. If you feel that the effect will diminish over time, it is important to check whether the A / B test was done properly, and if you re-test again to make the results more reliable I am talking.

Related Posts:

in Note, Posted by darkhorse_log