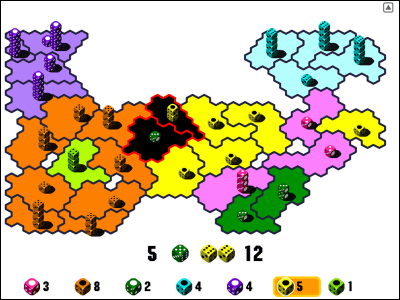

'BalatroBench' is a benchmark that shows which AI can play 'Balatro' best.

A benchmark called ' BalatroBench ' has been released that tests the performance of an AI model by playing the poker-roguelike game '

GitHub - coder/balatrobench: Benchmark LLMs' strategic performance in Balatro ????

https://github.com/coder/balatrobench

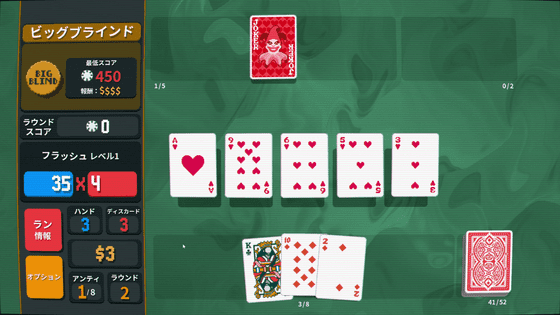

Balatro is a turn-based game, allowing for consistent performance evaluation regardless of the varying processing speeds of each model. Furthermore, when registering on the BalatroBench leaderboard, results must be registered using a specified seed, making accurate comparisons easier.

A play review of the game 'Balatro' that turns into a rich deck building with a poker + roguelike feel that makes you want to play it over and over again - GIGAZINE

Rules for registering for the leaderboard include 'initial (red) deck,' 'initial difficulty,' and 'fixed seeds (seed values AAAAA, BBBBB, CCCCC, DDDDD, EEEEE) and three plays per seed.'

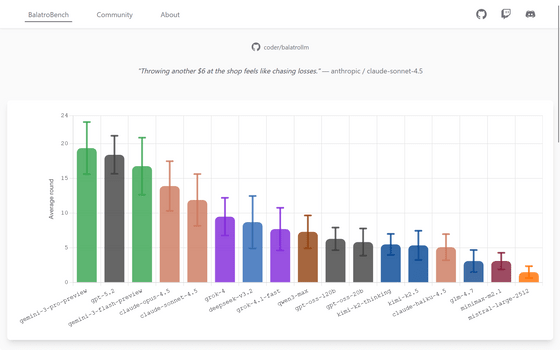

And the actual scores are as follows:

BalatroBench

https://balatrobench.com/

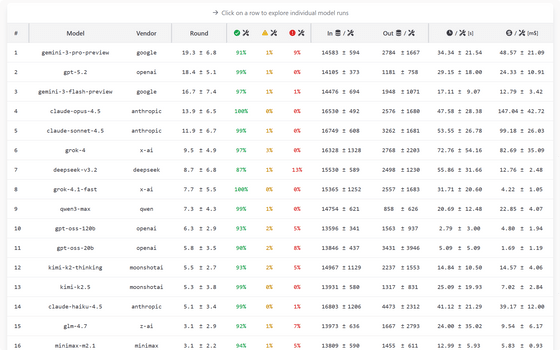

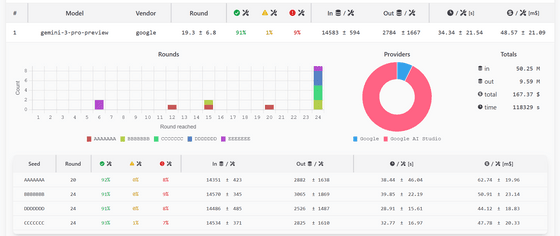

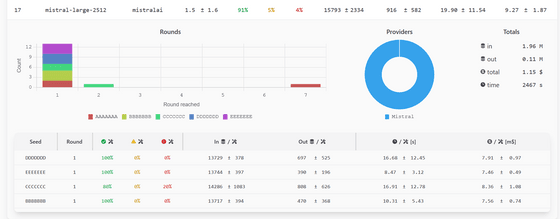

Scroll down the page to see the detailed figures. The columns in the table below show, from left to right, 'Number (#),' 'Model,' 'Vendor,' 'Average Rounds Reached,' 'Responses with Tool Calls that Can Be Executed in the Current Game,' 'Responses with Tool Calls that Cannot Be Executed in the Current Game,' 'Responses without Valid Tool Calls,' 'Average Input Tokens per Tool Call,' 'Average Output Tokens per Tool Call,' 'Average Time per Tool Call,' and 'Average Cost per Tool Call.'

Click on a row to see more information about each model. The model with the highest average number of rounds reached, or the 'best Balatro model,' was gemini-3-pro-preview, which cleared the 24th round (the final round) an incredible 9 times out of 15 plays.

The worst performing model on the leaderboard was mistral-large-2512, which lost in the first round in most attempts, with its best attempt only making it to the seventh round.

On the social site Hacker News, one commenter said, 'Google has a library of millions of books scanned from the Google Books project, which began in 2004. It contains many books on how to effectively play various traditional card games. It seems likely that an LLM trained on that dataset could generalize and understand how to play Balatro from text descriptions.'

Related Posts: