'Factorio Learning Environment (FLE)' is now available, a learning environment that evaluates the performance of AI models

The performance of large-scale language models continues to improve day by day, but the performance of benchmark tools that evaluate multiple large-scale language models according to certain standards is no longer catching up with the performance of the large-scale language models themselves, so there is an urgent need to develop tools that can accurately measure performance. To address this issue, researchers at AI company Anthropic have built and released a benchmark environment using the game '

Factorio Learning Environment

https://jackhopkins.github.io/factorio-learning-environment/

In recent years, the speed at which AI performance has improved has been astonishing, with newly emerged models often breaking records in just a few months. The capabilities of these models are measured using benchmark tools that quantify performance, but the performance of the benchmark tools must also be continually improved to match the performance of the AI models, which is costly and time-consuming, making this a challenge in AI development.

AI models are getting smarter and smarter, so testing methods can't keep up - GIGAZINE

To address these issues, Anthropic's Akbir Khan and others built a benchmark environment using Factorio.

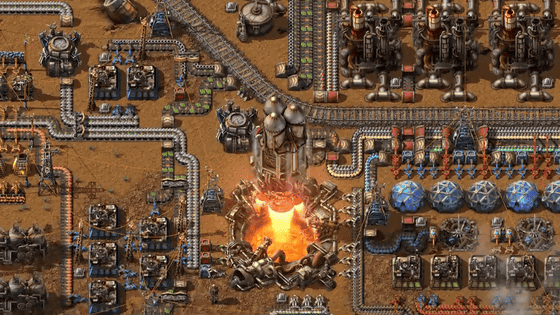

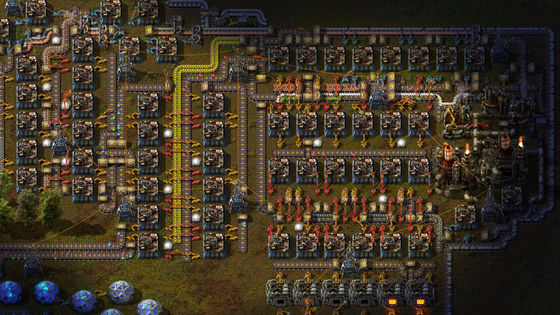

Factorio is a game in which players mine resources in the field, craft items, and automate the construction and operation of facilities. It is a representative title of 'factory construction and automation games.' Although the individual operations that players can perform are simple, there are many elements that players must pay attention to, such as the placement of buildings and resource management.

This process becomes exponentially more complex as the game progresses. The Factorio Learning Environment (FLE) was built for the purpose of making the AI perform these actions and measuring its performance.

The AI models whose performance is measured by FLE are programmed to play Factorio and optimize factories. The AI models learn the game in the same way that human programmers do, growing through trial and error and continually improving the speed at which the game progresses, and are evaluated based on their performance in processing actions.

For the experiment, Khan and his team gave the AI two goals. One was to 'build the largest factory possible,' and they observed whether the AI could set appropriate goals on its own, how it would balance short-term production with long-term progress, and how much it could expand the factory without external assistance.

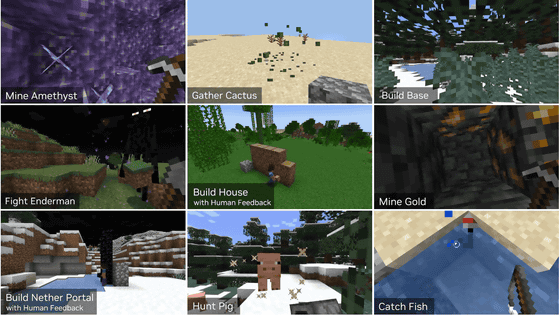

The other is to 'clear specific challenges.' Khan and his team set a total of 24 different tasks, ranging from simple tasks such as 'making an item that can be produced with at least two facilities' to complex tasks such as 'making an end-game item that requires the coordination of nearly 100 facilities.' Each model was given a certain amount of resources from the beginning, and a time limit was set to achieve the objective, and the progress of the tasks was evaluated.

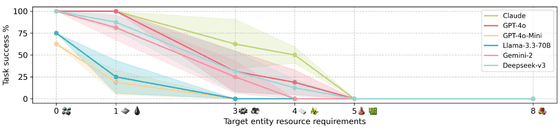

The six models evaluated were Claude 3.5-Sonnet, GPT-4o, GPT-4o-Mini, Deepseek-v3, Gemini-2-Flash, and Llama-3.3-70B-Instruct. The graph below shows the results of the experiment to clear a specific task, with the horizontal axis showing the number of facilities required to complete the task and the vertical axis showing the progress. As you can see from this, the progress drops sharply as the number of facilities required increases, and if there are more than five facilities, none of the models can even clear the task.

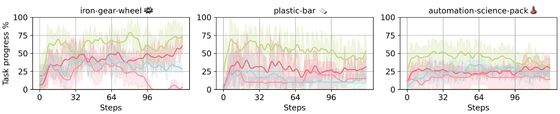

The graph below shows progress over time. Khan et al. state, 'The AI shows a pattern of rapid early progress followed by plateauing or regression. When attempting to scale up production or add new areas, the AI often appears to destroy existing equipment, suggesting that it is very difficult to demonstrate consistent performance on complex tasks.'

The thing that the AI had the most difficulty with was producing an item called 'plastic rods.' Plastic rods can only be produced by first producing electricity, then mining coal, extracting crude oil from an oil field, processing the crude oil into petroleum gas, and combining coal and petroleum gas.

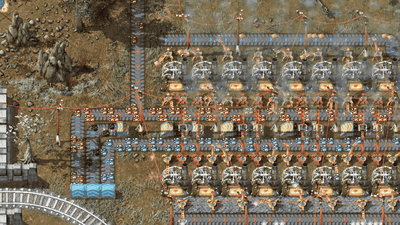

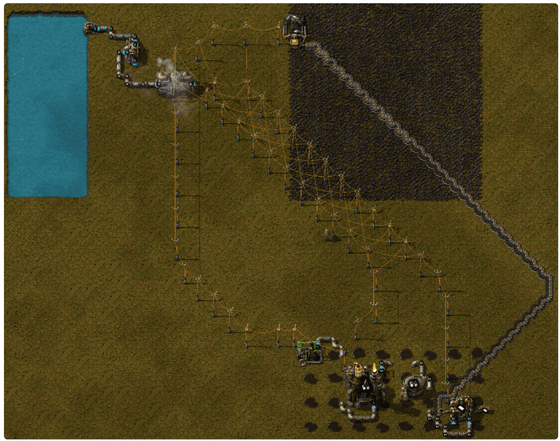

Below is a plastic rod production layout built by Claude Sonnet 3.5, which can produce 40 plastic rods in 60 seconds. There are various points to be pointed out, such as the fact that there are too many 'electric poles' that deliver electricity, but this is the model that achieved the highest score among all the models.

Although Claude Sonnet 3.5 had the highest overall score, it only managed to complete 7 out of 24 tasks. 'This shows there is room for improvement in this benchmark,' Khan said.

Through the experiment, the researchers observed that models with stronger coding capabilities (Claude 3.5-Sonnet, GPT-4o) achieved higher progress and completed more tasks. Only Claude Sonnet 3.5 consistently invested resources in new technology research, finding strategic investments worthwhile.

Gemini-2-Flash often performed narrow-minded operations, such as manually building more than 300 'wooden boxes' in 100 steps. Gemini-2-Flash and DeepSeek-V3 demonstrated their capabilities in the early stages of the game in experiments to clear specific tasks, but in experiments to freely build factories, they did not try to build large factories, and their overall performance declined.

All of the models also struggled with spatial awareness: while it's common in Factorio to have gaps between buildings for transport and power distribution, the AI would often place buildings too close together or incorrectly place resource-carrying items.

It has also been observed that AIs repeatedly try invalid operations. Instead of searching for alternatives, they would redo an operation that had caused an error. For example, GPT-4o performed an invalid operation 78 times in a row.

'Our results show that even state-of-the-art large-scale language models struggle to tune and optimize automation tasks,' Khan et al. said. 'The 'technology' in Factorio rapidly increases in complexity as the game progresses, making it difficult to achieve this even with ever-increasing advances in AI research. This allows for differentiation of models.'

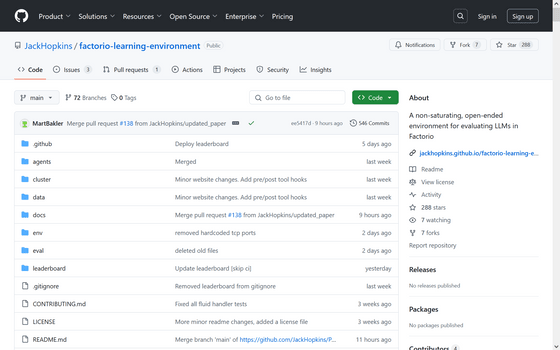

In addition, FLE-related data is available on GitHub.

GitHub - JackHopkins/factorio-learning-environment: A non-saturating, open-ended environment for evaluating LLMs in Factorio

Related Posts: