There is no indication that the evolution of AI will cease, and it is possible to learn about 300,000 times more in 6 years

Learning is indispensable to make computers using artificial intelligence (AI) make more accurate judgment. As the amount of learning increases, we can make more advanced judgments, but in order to increase the amount of learning we need a "high computational complexity" system that can handle large-scale processing. Established as a nonprofit research institution of AIOpenAIAccording to AI, the computational complexity used for learning continues to evolve at a speed that doubles in 3.5 months from 2012, and it has reached over 300,000 times as of 2018.

AI and Compute

https://blog.openai.com/ai-and-compute/

OpenAI states that three of "algorithms", "learning data" and "computational complexity" are indispensable elements for advancing AI. While it is difficult to quantify numerical improvements in algorithms and data, the amount of calculation can be quantified, and we can see how much AI is progressing by looking at the trend in the amount of computation.

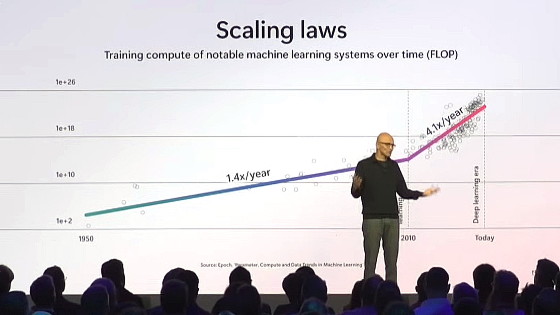

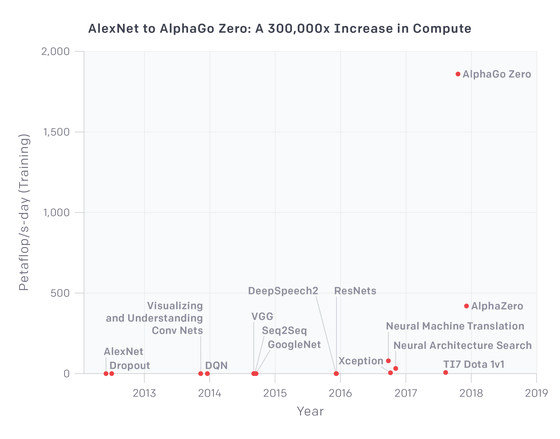

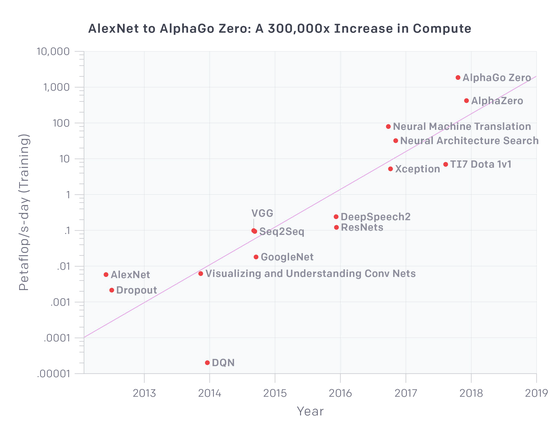

OpenAI graphs the transition of the calculation amount used for AI learning in 2012 and beyond. The vertical axis is the computational amount and it is several thousand trillion (1015) Times of calculation can be performed, and the horizontal axis shows the time axis (year). Note that "FLO" (Floating point operationAlthough it is written, it is actually the number of processes, not necessarily doing floating point arithmetic.

In the graph above, "2017 's"AlphaGo Zero"It is a projected value too much and I can hardly understand the difference in the computational complexity of other systems. Therefore, OpenAI also publishes a graph showing the logarithmic scale on the vertical axis. Looking at this graph, you can see that the computational complexity of the AI system, which appeared in 2012, has risen steadily year after year.

OpenAI says it can read four eras from the graph above.

· Before 2011: At that time I learned AIGPUIt seems that it was hard to achieve the level of computational complexity added to the graph.

· 2012 - 2014: Learning using GPU will appear. However, know-how has not been accumulated, so it was the limit because it achieves the calculation amount of 2 TFLOPS (1 trillion to 100 trillion times per day) using 1 to 8 GPUs.

· 2014 - 2016: The number of GPUs used for learning in the past two years will increase from 10 to 100 units. As a result, it is possible to achieve a computational complexity of 5 to 10 TFLOPS, and 100 trillion times to 1 kyoto (1016) It is now possible to do learning using the calculation amount. However, due to constraints of parallel processing, extra processing such as synchronization processing between GPUs increases as the number of units increases, and the amount of calculation did not increase in proportion to the number of units. Also, as the number of units increased, the increase in computational complexity became insignificant, and even if the number of hardware was increased simply, it was not possible to obtain a large effect.

· 2016 - 2017: Algorithms for parallel processing are now being reviewed instead of hardware approaches. As a result, it was possible to dramatically increase the performance per unit, and it was possible to exceed the calculation amount which has been regarded as the limit so far.

And as of 2018 many hardware-related startup companies are developing chips specialized for AI, and by 2020 many more cheap and superior performance products will be put on the market It is predicted. The price reduction can increase the scale of the system that can be constructed within the budget and it will be possible to construct a larger scale system than ever. For this reason, OpenAI expects the tendency of this graph to continue for several years from now as of 2018.

Related Posts: