What measures did Anthropic take to ensure Claude passed the engineer hiring test?

Anthropic employs a test that new engineers can answer at home when hiring them. While AI is permitted to be used in the test, truly talented engineers are said to surpass the AI's answers. However, as the performance of AI models has improved, the test has become easier to answer, forcing Anthropic to redesign the test. Anthropic shared insights into how it is addressing AI challenges.

Designing AI resistant technical evaluations \ Anthropic

Anthropic, the developer of the AI model 'Claude,' has been conducting a ' take-home test ' format for recruitment of its performance engineering team since early 2024. By setting a time limit for answers and allowing the use of AI, the test is more practical and easier to assess ability than an interview. The initial challenges were not completely solvable by AI, and candidates had to demonstrate their skills while utilizing AI tools. However, the company says it has been forced to redesign the test every time the Claude model has been updated and improved in performance.

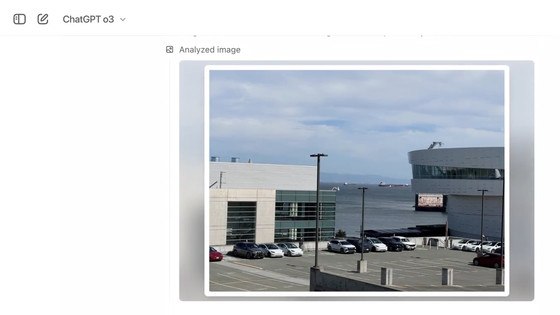

Initially, Claude Opus 4 outperformed most human candidates under the same time constraints, but humans performed exceptionally well, which helped identify truly talented engineers. However, the next model, Claude Opus 4.5, began performing on par with the best engineers. Given unlimited time, humans could still outperform AI, but under time constraints, there was no way to distinguish the output of the best candidate from the output of the best-performing model.

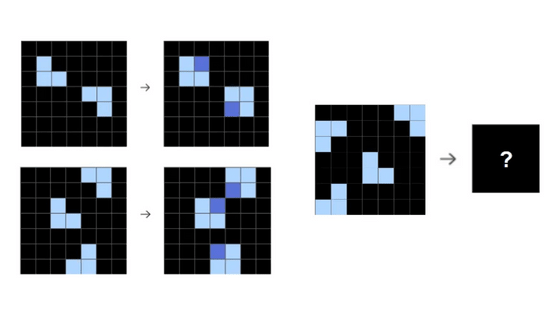

Anthropic's researchers have built three versions of the AI so far, allowing them to test it without compromising their policy of allowing the use of AI. They have gained insight into what the AI is good at and what it is not good at.

The initial test involved optimizing code for a fictitious accelerator. Candidates were required to have an understanding of design, the ability to build tools, and the ability to debug. In the year and a half since it was released, about 1,000 candidates have taken on this challenge, and many of them have been hired into the performance engineering team.

However, by May 2025, the Claude 3.7 Sonnet model had continued to evolve, and more than 50% of candidates had reached a level where they would be better off delegating entirely to Claude Code. Furthermore, when Anthropic tested the next-generation Claude Opus 4 pre-release version, it presented solutions that were better than almost all human solutions, so Anthropic decided to reassess the exam.

However, the solution was simple. The test was not originally designed to be solved in four hours, so they identified where Claude Opus 4 had gotten stuck. They rewrote that part and then reduced the time limit from four hours to two hours. They created a 'version 2' that emphasized clever optimization techniques over debugging and code size, and it worked fine for several months.

However, the next model, Claude Opus 4.5, broke the mold. The model solved problems over a two-hour period and achieved the passing standard within an hour. The model's score continued to improve as the solution time increased, and it achieved even higher scores after the model's official release.

The Anthropic test designers were advised by their colleagues to ban the use of AI, but they continued the policy because they felt that they needed to find a way for humans to differentiate in an environment where AI exists. However, they were concerned that designing a new test would solve the problem again with a new model.

One of the solutions Anthropic tried was to make the test more unique. Taking inspiration from a programming game called Zachtronics , Anthropic designed puzzles with extremely constrained, small instruction sets and integrated them into the test. This allowed a skilled human to outperform the AI. However, unlike the original test, the test no longer felt realistic, and the tasks no longer bore any resemblance to real-life work.

Anthropic published the test results and asked participants to submit their resumes if they could beat Claude's best performance.

GitHub - anthropics/original_performance_takehome: Anthropic's original performance take-home, now open for you to try!

https://github.com/anthropics/original_performance_takehome

Related Posts:

in AI, Posted by log1p_kr