OpenAI announces 'o3' and 'o4-mini,' which it calls 'the most advanced inference model in the company's history,' enabling 'Thinking with images' to be used to think using images as well as text

OpenAI has announced the release of new AI inference models, 'o3' and 'o4-mini'. OpenAI calls o3 'OpenAI's most advanced inference model ever', and claims that it outperforms previous models in benchmarks that measure mathematics, coding, reasoning, science, and visual understanding abilities.

Introducing OpenAI o3 and o4-mini | OpenAI

OpenAI launches a pair of AI reasoning models, o3 and o4-mini | TechCrunch

https://techcrunch.com/2025/04/16/openai-launches-a-pair-of-ai-reasoning-models-o3-and-o4-mini/

OpenAI's upgraded o3 model can use images when reasoning | The Verge

https://www.theverge.com/news/649941/openai-o3-o4-mini-model-images-reasoning

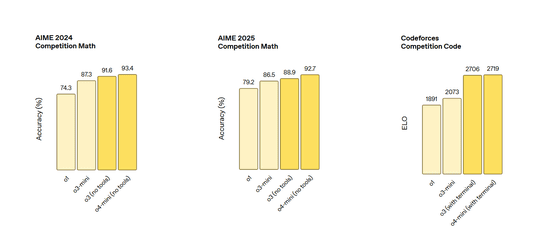

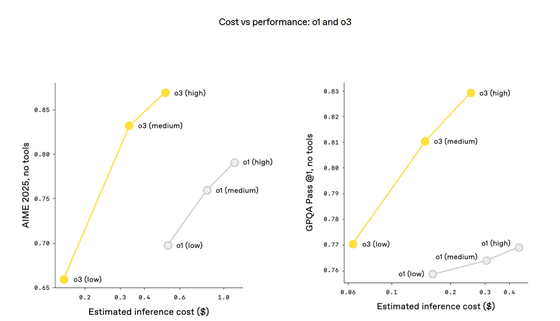

o3 is positioned as the 'most powerful inference model' and has demonstrated benchmark results that exceed existing models in multifaceted tasks such as code generation, mathematical analysis, and visual information understanding. For example, in AIME 2025, it achieved an accuracy rate of 88.9% without tools, and recorded an ELO of 2700 on Codeforces.

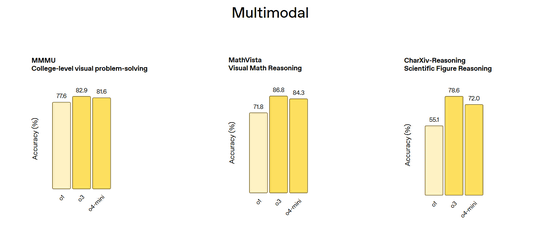

In addition, o3 showed high accuracy in practical tasks such as programming and consulting, with a 20% reduction in critical errors compared to o1. In particular, its ability to handle problems containing images and diagrams has been strengthened, achieving 82.9% in the academic vision benchmark

The o4-mini is a model optimized for high-speed, low-cost inference while keeping parameter scale low. It achieved astonishing scores of 93.4% and 92.7% in AIME 2024 and 2025, respectively, which are exceptionally high for a small model. OpenAI claims that its high processing efficiency means that there is a lenient usage limit, making it suitable for applications that prioritize large numbers of requests and real-time performance.

In the development of both models, the amount of training calculations and the 'thinking steps' during inference have been increased by an order of magnitude to reaffirm the scaling law of large-scale reinforcement learning that 'increasing the amount of calculations improves performance.' As a result, OpenAI reports that it has achieved higher accuracy than o1 even with the same latency and cost settings, and has demonstrated that performance continues to improve steadily as the inference time is further extended. In reinforcement learning, the model learns not only 'how to use tools' but also 'when to use them,' and o3 and o4-mini combine tools such as search, coding, file analysis, and image generation for each purpose, and derive a solution while revising the plan based on the information obtained along the way.

Another major feature of the o3 and o4-mini is that they allow for thinking with images, treating text and images on the same level and making chains of inferences while performing operations such as rotation and enlargement along the way.

Introducing OpenAI o3 and o4-mini—our smartest and most capable models to date.

pic.twitter.com/rDaqV0x0wE — OpenAI (@OpenAI) April 16, 2025

For the first time, our reasoning models can agentically use and combine every tool within ChatGPT, including web search, Python, image analysis, file interpretation, and image generation.

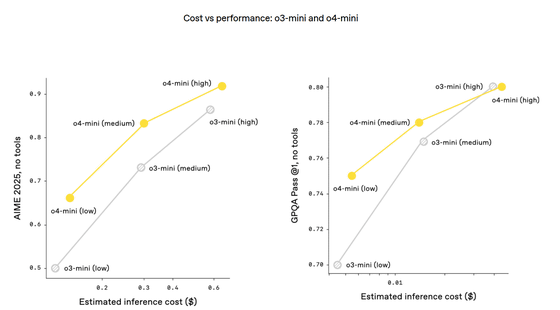

In terms of cost performance, the o3 is better than the o1, and the o4-mini is better than the o3-mini. For example, in AIME 2025, the o3 showed higher accuracy at a lower cost.

OpenAI also reports that the o4-mini also showed higher cost performance than the o3mini. In addition, the o4-mini recorded an unprecedented 92.7% accuracy rate for a small model in its category, and OpenAI says that the high throughput of the o4-mini is particularly effective in processing large amounts of requests and responding in real time.

To improve safety, OpenAi has updated its denial data on biorisks, malware generation, and jailbreak prompts, achieving a high pass rate on its internal denial bench. In addition, it has introduced an inference LLM monitor that reads safety specifications written by humans, and confirmed that it can detect and block approximately 99% of biorisk-related interactions.

At the time of writing, o3, o4-mini, and o4-mini-high, a derivative model of o4-mini, were already available to users of ChatGPT's paid subscription plans ChatGPT Plus, ChatGPT Pro, and ChatGPT Team. Free users can also experience part of the o4-mini. Both models are available via API, and the higher-end o3-pro will be added soon.

Related Posts: