Google releases translation-specialized AI model 'TranslateGemma,' now supports Japanese

Google has announced ' TranslateGemma ,' a set of translation models based on the '

TranslateGemma: A new family of open translation models

https://blog.google/innovation-and-ai/technology/developers-tools/translategemma/

TranslateGemma Technical Report - 2601.09012v2.pdf

(PDF file) https://arxiv.org/pdf/2601.09012

🗣 Introducing TranslateGemma, our new collection of open translation models built on Gemma 3.

— Google AI Developers (@googleaidevs) January 15, 2026

The model is available in 4B, 12B, and 27B parameter sizes, and furthers communication across languages, no matter what device you own. https://t.co/dniQD3RPKP

'TranslateGemma' is available in parameter sizes of 4B, 12B, and 27B, and can support communication between 55 languages. 4B is designed for mobile devices, 12B for consumer laptops, and 27B is the highest-end version that can run on a single H100 GPU or TPU in the cloud. Each model is available for free as an open model. It has stronger translation capabilities than the base model 'Gemma,' and has outperformed Gemma in several benchmarks.

When tested on the WMT24++ dataset, TranslateGemma's error rate was significantly lower than Gemma's. Google claims, 'This is a major win for developers. High-accuracy translation quality can now be achieved with fewer than half the parameters of the base model. This leap in efficiency enables higher throughput and lower latency without sacrificing accuracy.'

TranslateGemma's performance was built through a two-stage fine-tuning process: first, supervised fine-tuning, which fine-tuned the base Gemma 3 model using a rich dataset of human-translated text and high-quality synthetic translations generated by the state-of-the-art Gemini model; and second, reinforcement learning, which leveraged reward models including advanced metrics such as MetricX-QE and AutoMQM to further improve translation quality, guiding the model to produce contextually accurate and natural-sounding translations.

TranslateGemma inherits the multimodal capabilities of Gemma 3 and has been shown to have a positive effect on its ability to read and translate text from images, even without any specific multimodal fine-tuning during TranslateGemma's training process.

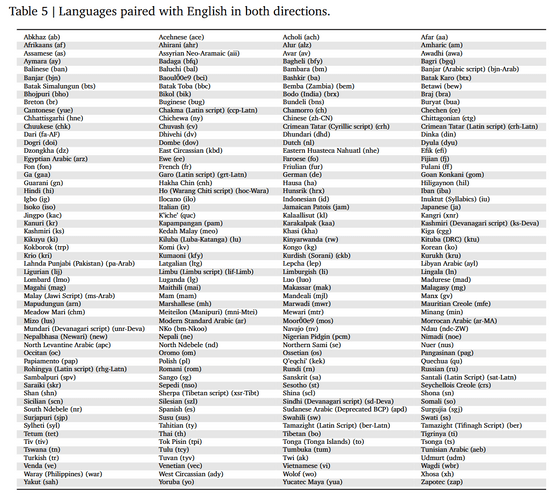

TranslateGemma has been rigorously trained and evaluated on 55 language pairs, including Japanese, to ensure reliable, high-quality performance in major languages like Spanish, French, Chinese, and Hindi, as well as many low-resource languages, Google explained. 'We're excited to see how our community uses these models to break down language barriers and deepen cross-cultural understanding,' it added.

The languages used for two-way translation to and from English are as follows:

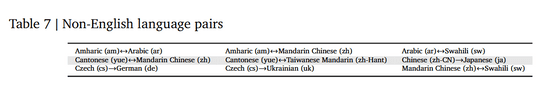

They have also been trained and evaluated in non-English pairs.

The model data for TranslateGemma is available at the following link:

TranslateGemma - a google Collection

https://huggingface.co/collections/google/translategemma

Related Posts:

in AI, Posted by log1p_kr