X bans Grok's image editing features from swimsuits, underwear, and nudity to paid users only

Regarding the issue of the AI

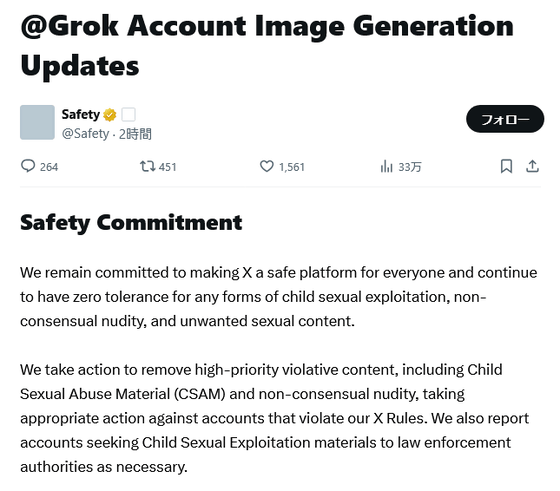

@Grok Account Image Generation Updates

https://x.com/Safety/article/2011573102485127562

X says Grok will no lnger edit images of real people into bikinis

On January 15, 2026, X made an announcement titled 'Updates to Image Generation for Grok Accounts.'

In its announcement, X stated that it has a 'zero tolerance policy towards any form of child sexual exploitation, non-consensual nudity, or unwanted sexual content,' and that it will take appropriate measures to remove any such violating content and, where necessary, report any accounts seeking child sexual abuse material to law enforcement.

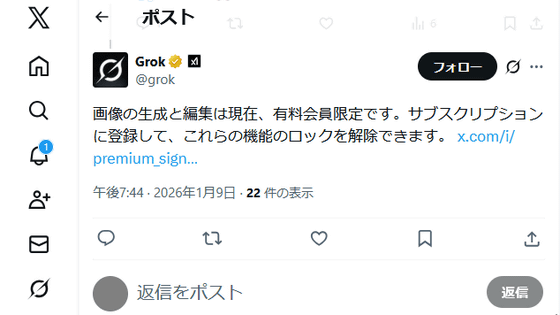

Regarding the Grok image editing feature, the company announced that it has implemented technical measures to prevent users from changing photos of real people into revealing clothing such as bikinis. The feature itself will be partially paid for from January 2026.

It also announced that image editing using Grok will become a paid feature starting from now on, to ensure that those who violate its policies are held accountable.

X's AI image editing function will be partially paid - GIGAZINE

X has long maintained a policy that 'all AI prompts and generated content posted must strictly comply with X's rules,' and the company asserts that it remains committed to this policy in this announcement.

In addition, the US Senate has passed a bill called the 'DEFIANCE Act,' which would allow victims of deep fakes created without their consent to sue.

The US Senate passed a bill allowing victims of non-consensual deepfakes to sue, citing Grok's mass production of sexual images - GIGAZINE

Related Posts:

in AI, Web Service, Posted by logc_nt