Instagram and Facebook are showing a lot of nuisance and fraudulent ads, including explicit pornography, while Meta is deleting even posts that are not related to pornography as 'policy violations'

Meta's

Facebook Is Censoring 404Media Stories About Facebook's Censorship

https://www.404media.co/facebook-is-censoring-404-media-stories-about-facebooks-censorship/

Algorithm auditing company AI Forensics

According to AI Forensics, the pornographic ads used audio, images and video to promote questionable sexual enhancement products, as well as AI-generated media, including deepfakes of celebrities, and generated more than 8 million impressions per year in the EU alone.

In addition, AI Forensics posted the exact same content as the pornographic ad on Instagram and Facebook. Meta then deleted the post for violating community standards. AI Forensics pointed out that 'this suggests that Meta has technology to automatically detect pornographic content, but does not apply it to ads. This is not a temporary bug, but has been ongoing since at least December 2023.'

There have also been cases where posts that did not contain sexual content have been deemed sexual and deleted. Foreign media outlet 404Media reported that 'as a result of one article being deemed sexual, unrelated articles have also been restricted as having sexual content.'

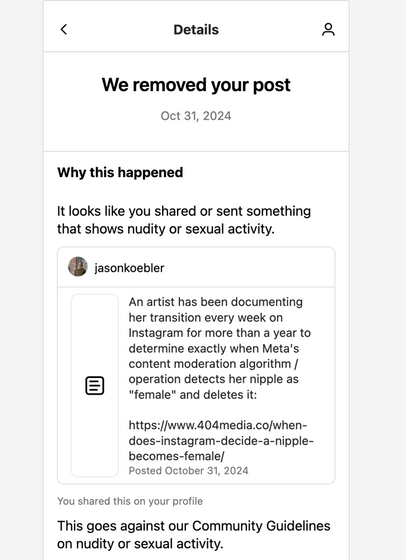

On October 31, 2024, 404Media posted an article about 'in transitu,' a project by artist Ada Ada Ada , in which she takes nude photos of herself every week while taking female hormones and uploads them to Instagram, gradually becoming more feminine, to examine at what point Instagram will delete the images for 'violating community guidelines.'

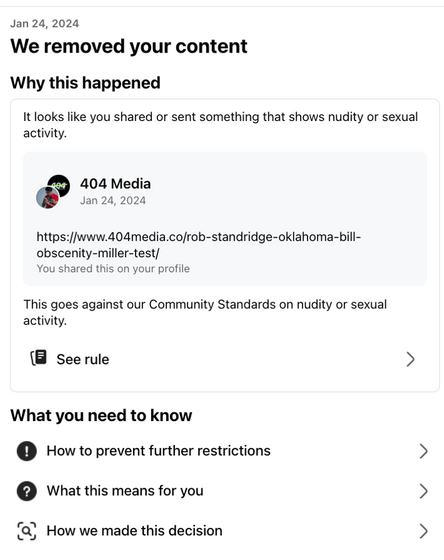

404Media immediately shared the article on Facebook, but Facebook notified 404Media's official account, 'We have removed your photo because it violates our community standards regarding nudity and sexual activity. In addition, when 404Media co-founder Jason Kebler shared the article on his personal Threads account, the post was removed for the same reason.

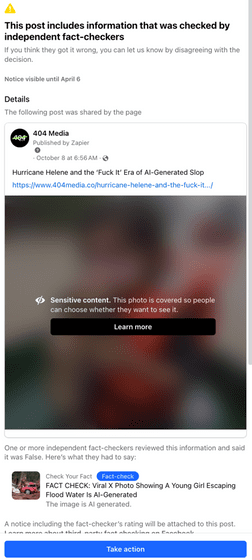

After the posts were removed, Facebook began removing 404Media posts that didn't even involve nudity or sexual activity. In fact, when 404Media shared articles like '

Additionally, some content has been removed for 'containing nudity or sexual acts.'

According to 404Media, none of these articles or the images contained in them violated Facebook's policies. Therefore, 404Media filed a complaint with Facebook. However, most of the complaints were not accepted, with some exceptions.

404Media said, 'It is extremely upsetting to see our journalism being removed as pornographic or harmful while Meta's social networking sites are accepting money from advertisers, including adult content, to create non-consensual content and to deceive users.'

Related Posts:

in Web Service, Posted by log1r_ut