A video of someone building a home AI machine using a cheap AI server equipped with NVIDIA's commercial GPU and AI chips is a hot topic.

NVIDIA's high-end GPU 'H100' and AI processing chip 'GH200' are basically products sold for data centers and are difficult to obtain for individuals. AI researcher

Building a High-End AI Desktop | David Noel Ng

https://dnhkng.github.io/posts/hopper/

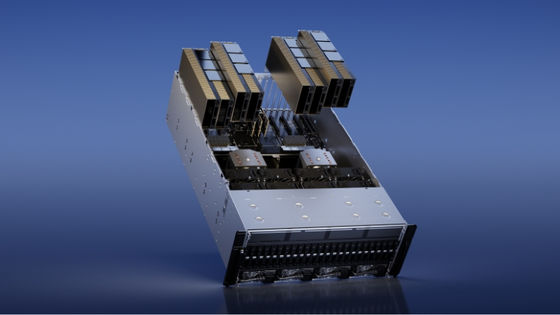

Servers equipped with the H100 and GH200 are traded at prices exceeding 10 million yen per unit. However, in early 2025, David discovered on Reddit that a 'server equipped with two H100s and two GH200s' was being sold for the very low price of 10,000 euros (approximately 1.8 million yen). The specifications of the server being sold were as follows:

・Nvidia Grace-Hopper Superchip (GH200) 2 units

Two 72-core Nvidia Grace CPUs

・2 Nvidia Hopper H100 Tensor Core GPUs

- Two 480GB ECC-compatible LPDDR5X memory

- Two 96GB HBM3 memory

900GB/s NVLink-C2C

・3000W power supply

・TDP is 1000W to 2000W

The reason the server was so cheap was explained to be 'a system that had been converted from water-cooled to air-cooled, looked ugly, and couldn't be installed in a rack mount.' David was initially suspicious, but after learning that the person selling the server was the administrator of an online store called ' GPTshop.ai ,' which sells modified NVIDIA data center servers for personal use, and that the person lived a two-hour drive from David's home, he decided to drive there and settle the transaction in cash. After touring GPTshop.ai's workshop, David purchased the server for 7,500 euros (approximately 1.4 million yen) and took it home.

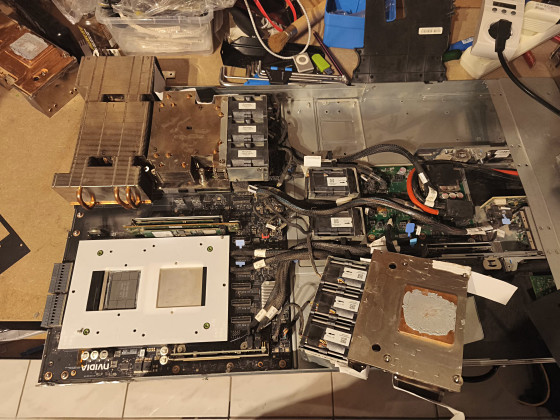

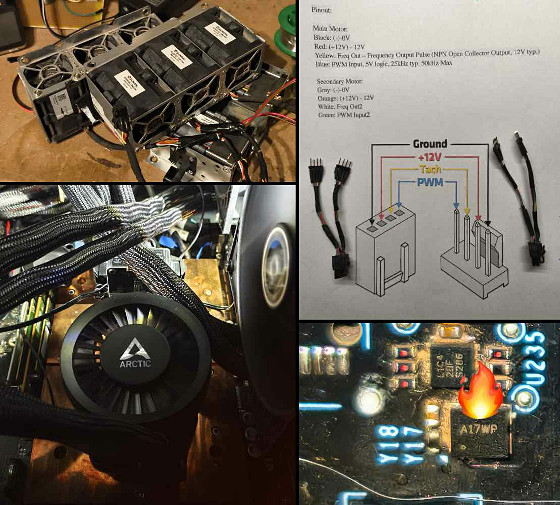

Here's what the server looked like right after I brought it home. It was covered in dust, even inside. It had eight dual fan modules, and when I turned it on, all the fans spun at full speed, emitting a loud, high-pitched noise. The noise was so loud it could be heard from 50 meters away from the basement where the server was located. My wife, who works from home, even banned me from using it because it was interfering with our remote meetings.

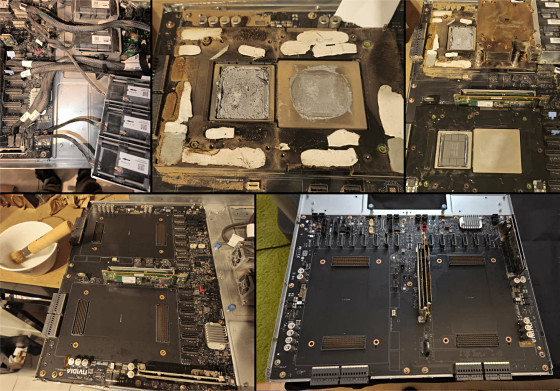

David disassembled the server, vacuumed up the dust, and wiped it down with several liters of isopropanol.

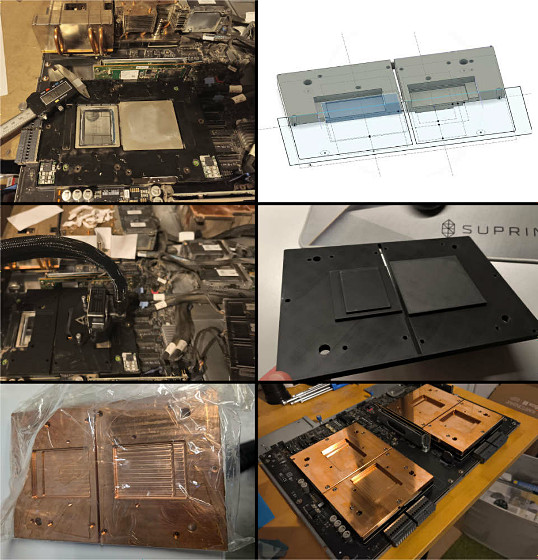

We also replaced the air-cooled system with a water-cooled system. We used the commercially available

During repeated testing, the server began to emit a 'popping, hissing' noise and a smoky smell. This was caused by a fan connector on the motherboard, and the problem was resolved after a soldering repair.

The final machine is shown below. The PC case and various mounts are also originals by David.

When the completed machine was run on the AI model ' gpt-oss-120b-Q4_K_M ' released by OpenAI, it succeeded in outputting a high-speed of 195.84 tokens per second. When running gpt-oss-120b-Q4_K_M on a home computer, even a high-performance computer can only output around 10 tokens per second, so this is literally an order of magnitude higher performance.

The total cost of materials, including the server, was 9,000 euros (approximately 1.7 million yen). David commented, 'With memory prices rising now, the two 480GB ECC LPDDR5X memory modules included in the server alone would exceed the price of the entire server.'

Related Posts: