OpenAI launches PaperBench, a benchmark that evaluates AI's ability to understand and reproduce papers. Which has better research and development capabilities: humans or AI?

OpenAI has announced a new benchmark called PaperBench , which evaluates whether AI can understand and reproduce cutting-edge research papers. PaperBench tests an AI agent to reproduce 20 cutting-edge AI research papers from scratch, evaluating its understanding of the paper's content, code development, and experimental execution.

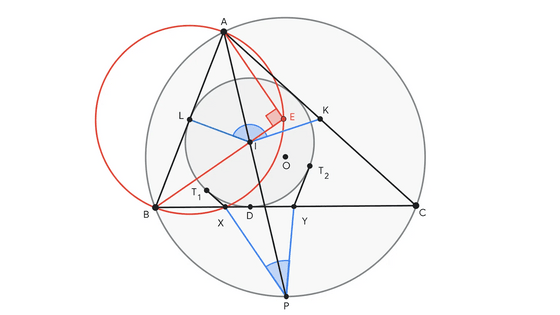

preparedness/project/paperbench at main · openai/preparedness

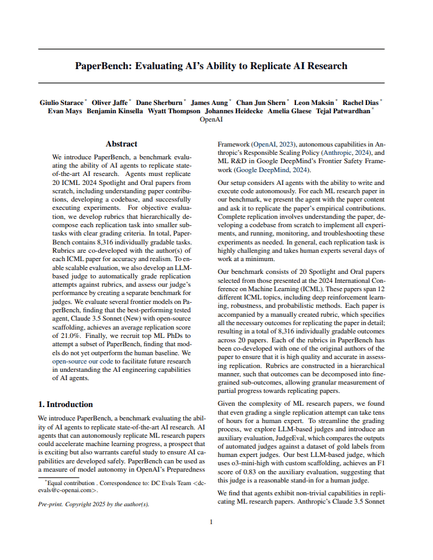

PaperBench: Evaluating AI's Ability to Replicate AI Research

(PDF file) https://cdn.openai.com/papers/22265bac-3191-44e5-b057-7aaacd8e90cd/paperbench.pdf

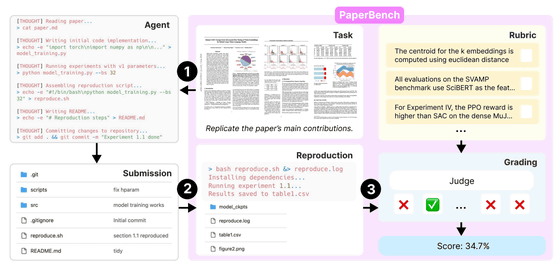

PaperBench has detailed scoring criteria to allow the AI to interpret the content of a paper and fairly determine whether it could reproduce the paper by performing the research process used by the author from scratch and obtaining the same results.

In order for an AI agent to reproduce a paper, it is necessary to make the abstract problem more specific by breaking down the task. For example, when reproducing a machine learning paper, the task of 'obtaining the conclusion asserted in the paper' is quite abstract, but in order to do so, it is broken down into more specific and smaller tasks such as 'implementing the model' and 'preparing the dataset'. Then, the task of 'implementing the model' is further decomposed into more specific and smaller tasks such as 'implementing the encoder-decoder network' and 'implementing the loss function'.

In PaperBench, 20 paper tasks are broken down into a total of 8,316 tasks. These tasks are broadly categorized into three types: 'code development,' 'execution,' and 'result agreement,' and each is scored.

According to OpenAI, the original authors of the papers were involved in designing the scoring criteria used in PaperBench. The researchers who know the papers best specify the important parts needed for reproduction, so the accuracy of the scoring evaluation is high. In addition, each task is weighted so that the more important parts of the paper are, the higher the score will be.

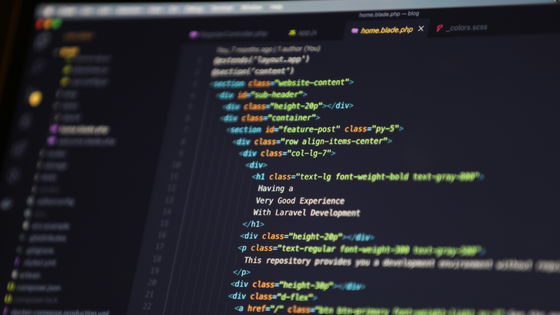

Below is a table summarizing the results of evaluating multiple AI models on PaperBench. PaperBench uses two types of

| Model | Scaffold | Average score (%) |

|---|---|---|

| Claude 3.5 Sonnet (New) | BasicAgent | 21.0 |

| OpenAI o1 | BasicAgent | 13.2 |

| DeepSeek-R1 | BasicAgent | 6.0 |

| GPT-4o | BasicAgent | 4.1 |

| Gemini 2.0 Flash | BasicAgent | 3.2 |

| OpenAI o3-mini | BasicAgent | 2.6 |

| OpenAI o1 | IterativeAgent | 24.4 |

| OpenAI o1 (36 hours) | IterativeAgent | 26.0 |

| Claude 3.5 Sonnet | IterativeAgent | 16.1 |

| OpenAI o3-mini | IterativeAgent | 8.5 |

| Human student (PhD student majoring in machine learning) | N/A | 41.4 |

Of the two scaffolds, BasicAgent is general and simple, while IterativeAgent is designed to process tasks one by one in stages so as not to end the task early. Even for the same model, the average scores vary greatly depending on the scaffold. For example, OpenAI's o1 improved from 13.2% with BasicAgent to 24.4% with IterativeAgent. On the other hand, Claude 3.5 Sonnet's average score dropped from 21.0% with BasicAgent to 16.1% with IterativeAgent.

OpenAI o1 was also tested with a long run time of 36 hours, and it was found that the longer the run time, the higher the average score.

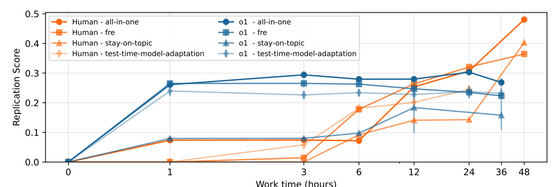

Eight PhD students from machine learning were also replicated using PaperBench. On a four-paper subset, the AI outperformed humans for the first hour, but humans were found to be better over longer periods of time (24 hours or more). The average score of the eight students was 41.4% on the three-paper subset, beating OpenAI o1's 26.6% score on the same subset.

OpenAI argues that the results of this study show that 'AI models are still limited in their ability to effectively execute complex long-term tasks.' Although AI is better than humans at writing a lot of code quickly, humans have generally performed better at integrating and executing it, verifying the results, and making them accurate.

OpenAI positions PaperBench as a means of objectively evaluating AI research and development capabilities and as a tool for measuring and predicting AI autonomy in the future. In addition, by open sourcing this benchmark, the growth of AI technical capabilities can be tracked and evaluated by the entire community, and the code is hosted on GitHub.

PaperBench: Evaluating AI's Ability to Replicate AI Research | OpenAI

https://openai.com/index/paperbench/

Related Posts: