An AI that can perform tasks with just text instructions and can teach skills to another AI will be developed.

One of the cognitive functions that separates humans from other animals is that humans can perform a variety of tasks by following verbal or written instructions. A new research team at

Natural language instructions induce compositional generalization in networks of neurons | Nature Neuroscience

https://www.nature.com/articles/s41593-024-01607-5

Two artificial intelligences talk to each other - Medias - UNIGE

https://www.unige.ch/medias/en/2024/deux-intelligences-artificielles-se-mettent-dialoguer

Scientists create AI models that can talk to each other and pass on skills with limited human input | Live Science

https://www.livescience.com/technology/artificial-intelligence/scientists-create-ai-models-that-can-talk-to-each-other-and-pass-on-skills-with-limited-human- input

Performing new tasks without prior training and based solely on verbal or written instructions is a unique human ability. Humans can also use words to teach other humans the tasks they have learned.

A research team led by Alexandre Pouget, a neuroscientist at the University of Geneva, worked to develop an AI that has these abilities that humans have. 'Currently, conversational agents using AI can integrate linguistic information to generate text and images,' Pouget said. 'You can't translate instructions into sensorimotors, and you can't explain them to another AI to reproduce them.'

Pouget and his team are using a natural language processing model called

The RNN developed by the research team was able to fully understand the text with the built-in language model SBert. Even though the RNN had no experience of watching training videos or performing tasks, it was able to ``point in the direction of the stimulus'' and ``point in the direction of the stimulus'' and ``point in the direction of the stimulus'' with just instructions in natural language. It has been reported that they were able to perform tasks such as 'pointing to the brighter of the stimuli' with an average accuracy of 83%.

RNNs were also able to transfer the skills they had learned to their sister AIs using verbal instructions. 'Once learned these tasks, the network was able to explain it to a second network (a copy of the first network) and have it reproduce the tasks,' Pouget said. 'As far as we know, two AIs This is the first time I've been able to have a conversation in a purely linguistic way.'

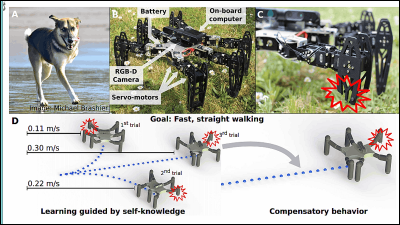

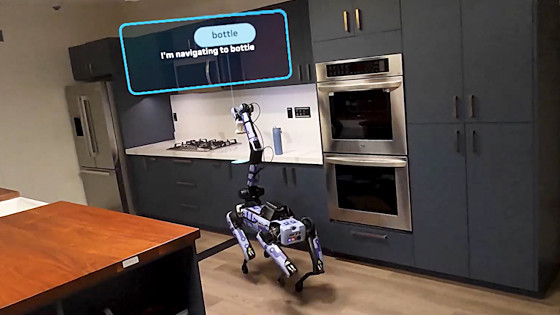

The results of this research will have a promising impact on the field of robotics, where the development of technology that enables machines to interact with each other is a challenge. The research team said, ``The network we developed is very small. There is nothing that prevents us from developing more complex networks based on this and integrating them into humanoid robots that can understand each other.''

Related Posts: