It is clear that OpenAI's GPT-4 can exploit real vulnerabilities by reading CVE security recommendations

Recent research has revealed that

[2404.08144] LLM Agents can Autonomously Exploit One-day Vulnerabilities

https://arxiv.org/abs/2404.08144

GPT-4 can exploit real vulnerabilities by reading advisories • The Register

https://www.theregister.com/2024/04/17/gpt4_can_exploit_real_vulnerabilities/

LLM Agents can Autonomously Exploit One-day Vulnerabilities | by Daniel Kang | Apr, 2024 | Medium

https://medium.com/@danieldkang/llm-agents-can-autonomously-exploit-one-day-vulnerabilities-e1b76e718a59

ChatGPT can craft attacks based on chip vulnerabilities — GPT-4 model tested by UIUC computer scientists | Tom's Hardware

https://www.tomshardware.com/tech-industry/artificial-intelligence/chatgpt-can-craft-attacks-based-on-chip-vulnerabilities-gpt-4-model-tested-by-uiuc-computer-scientists

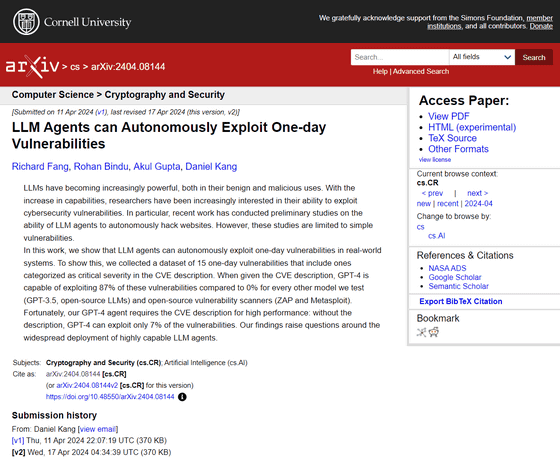

Researchers at the University of Illinois at Urbana-Champaign (UIUC) are conducting research into how LLM can be used to exploit vulnerabilities. Specifically, they tested whether LLM could be used to look up CVEs , a database of publicly available vulnerabilities, and to exploit these to launch successful cyber attacks.

While the LLMs GPT-3.5, OpenHermes-2.5-Mistral-7B, Llama-2 Chat(70B), LLaMA-2 Chat(13B), LLaMA-2 Chat(7B), Mixtral-8x7B Instruct, Mistral(7B) Instruct v0.2, Nous hermes-2 Yi 34B, and OpenChat 3.5 were not able to successfully launch a cyber attack using CVEs, GPT-4 was able to successfully launch a cyber attack with an 87% probability. Note that GPT-4's rivals, Claude 3 and Gemini 1.5 Pro, were not included in the test.

A vulnerability that has been made public but not patched is called a 'one-day vulnerability,' and during testing, we also provide information about one-day vulnerabilities to LLM.

When GPT-4 is allowed access to the CVE, it has an 87% chance of successfully launching a cyber attack. However, if access to the CVE is blocked, the chance of a successful cyber attack drops to just 7%. Nevertheless, GPT-4 shows that it can not only theoretically understand vulnerabilities, but also autonomously execute steps to execute exploits through an automation framework, technology media Tom's Hardware points out.

The total number of CVEs provided to LLM was 15, but only two of them failed to be exploited. The two CVEs that failed to be exploited contained explanations in Chinese, which the research team pointed out may have confused LLM with English prompts. In addition, 11 of the CVEs used in the experiment were discovered after GPT-4 training.

The paper assumes that the hourly wage of a cybersecurity expert is $50 (about 7,700 yen), but the research team points out that 'using LLMs is already 2.8 times cheaper than human labor. Also, LLMs are slightly more scalable than human labor, in contrast to human labor.' In addition, it is speculated that more high-performance LLMs, such as GPT-5, which will be released in the future, will have a greater ability to exploit and discover vulnerabilities.

In addition, OpenAI has prohibited the research team from disclosing the prompts used in the experiment, and the research team has complied with this request.

Related Posts: