A method to predict 'very rare dangerous behavior' of AI models is developed

Advance safety testing is essential to improve the safety of AI, but if dangerous AI behavior occurs very rarely, it may be overlooked in normal testing. To avoid this, AI company Anthropic has developed a method to predict very rare behaviors.

Forecasting rare language model behaviors \ Anthropic

In AI development, it is necessary to minimize the possibility that an AI will lie or provide dangerous information in response to malicious questions. However, even if an AI has been tested thousands of times in a test environment without any problems, it may provide problematic information after being used billions of times after release.

Even if the information is provided with an extremely low probability of 'one in billions,' it would be a serious problem if it were provided even once, so the safety tests performed on the model would be useless. To avoid this, Anthropic investigated whether it is possible to check the safety of the model with a small number of tests.

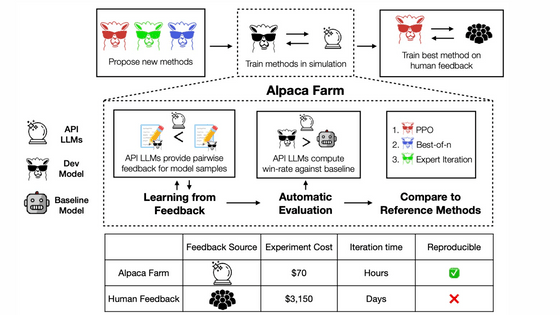

First, the Anthropic research team presented the AI with a number of prompts and calculated the probability that the AI would respond with a harmful response, measuring how dangerous the response was.

We looked at the prompts with the highest risk of response and plotted (visualized) them according to the number of queries. Interestingly, we found that the relationship between the number of queries and the risk probability follows a distribution known as a '

Anthropic argues that 'the properties of power laws are so well understood mathematically that even if we test only a few thousand queries, we can calculate the worst-case risk of having millions of queries.'

To see how accurate the predictions are, Anthropic ran a number of different scenarios.

First, to examine the risk of the AI providing dangerous information, such as 'how to make harmful chemicals,' the researchers estimated the risk for 900 to 90,000 queries. They found that 86% of the power law predictions were within a single order of magnitude of error from the actual measurements.

Next, they evaluated whether the AI would behave in a 'power-seeking' or 'self-protective' manner. The mean absolute error was 0.05, which is much lower than the conventional method (0.12). This means that the power law prediction is more accurate than the conventional method.

Anthropic said, 'Regular testing is virtually impossible to detect all rare risks. Our method is not a perfect solution, but it may improve the accuracy of predictions compared to traditional testing.'

Related Posts: