OpenAI releases a demonstration tool that makes the language model explain the language model

Large-scale language models (LLMs) such as ChatGPT are often called ``black boxes'' because the mechanism of operation is difficult to understand, spurring debate on the dangers of AI and the difficulty of predicting it. In order to advance the understanding of LLM, OpenAI has released a tool to elucidate the function of LLM using LLM.

Language models can explain neurons in language models

OpenAI's new tool attempts to explain language models' behaviors | TechCrunch

https://techcrunch.com/2023/05/09/openais-new-tool-attempts-to-explain-language-models-behaviors/

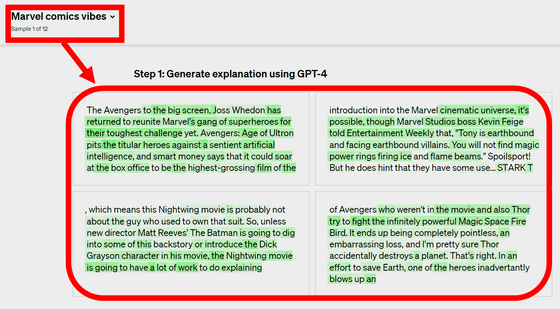

The premise is that the LLM is made up of 'neurons' similar to the brain. So, for example, running a text sequence about 'Marvel Comics' will likely activate neurons about heroes in Marvel Comics and output the hero and related words. In this demo, the operation of GPT-2 is evaluated using GPT-4, which is the latest model at the time of article creation.

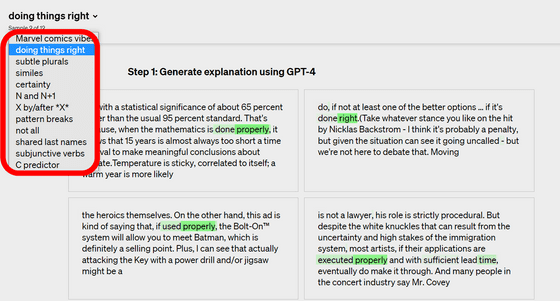

A total of 12 text samples are available, including 'Marvel Comics'.

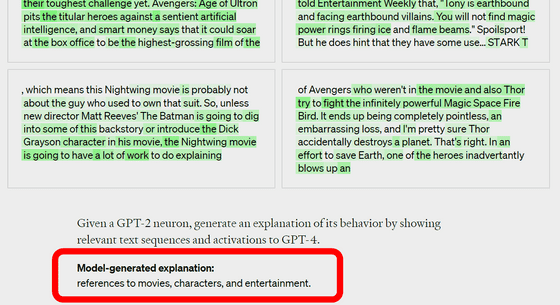

Once the output and neuronal activity of GPT-2 is shown, show this to GPT-4 to generate an explanation. GPT-4 gave a description of the result of the aforementioned 'Marvel Comics' text sequence as 'a reference to movies, characters, and entertainment.'

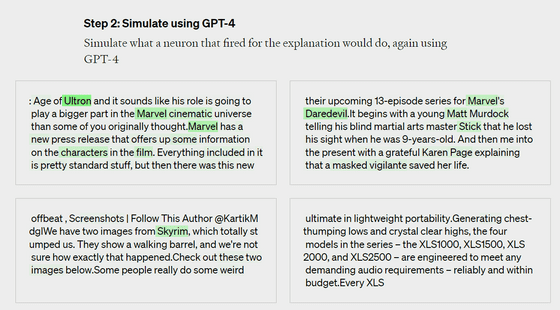

Next, to determine the accuracy of GPT-4's description, GPT-4 is also given a text sequence to simulate neuron behavior.

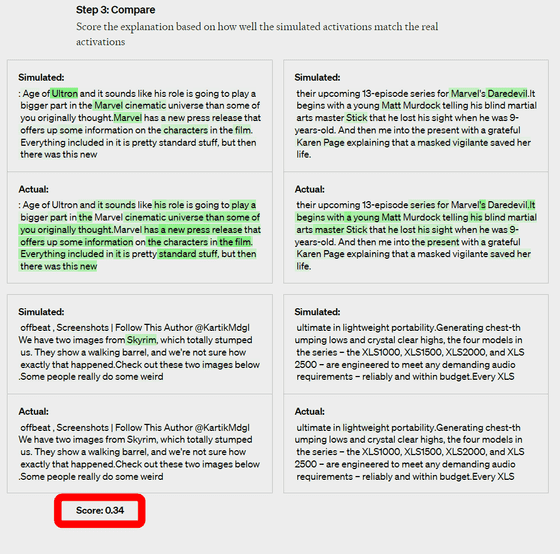

Then, the simulated neuron activity (upper row) and the actual neuron activity (lower row) are compared and scored. In this case, the score was '0.34'.

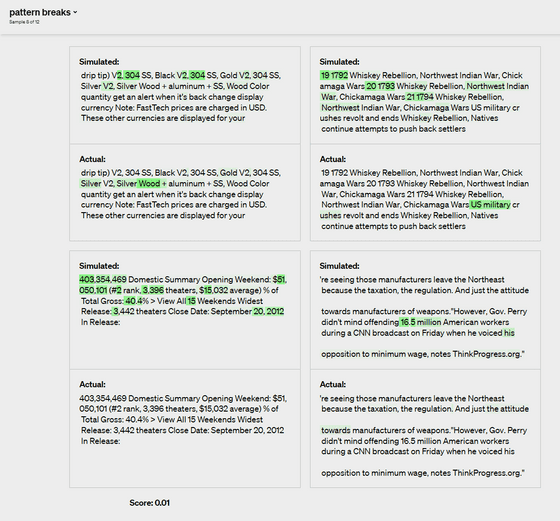

Among the 12 samples, the scores varied from '0.01' to '0.86'.

Jeff Wu, who leads the Scalable Alignment team at OpenAI, says, 'With this method, we basically derive a preliminary description of what it's doing in natural language for every neuron, and then that description is actually You can get a score of how well it matches the behavior of

In fact, in this way Wu and colleagues were able to generate explanations for all 307,200 neurons of GPT-2. Researchers hope that such tools could be used to improve the performance of LLM, including by reducing bias and harmfulness of AI.

But we still have a long way to go before it becomes tangible. In this example, out of the 307,200 neuron explanations, only about 1,000 could be said to have been explained with confidence.

``Most of the explanations scored very low or failed to explain the behavior of real neurons,'' Wu said. For example, it activates for 5 or 6 different things, but it can't find a pattern, and even if there is a discernible pattern, it finds it in GPT-4. Sometimes I couldn't do it,' he said.

If even GPT-2 is hard to understand, the more complex and large-scale LLMs and models that can get information from the Internet at any time are even more difficult to understand. For the latter, however, Wu believes that being able to browse the Internet doesn't change the underlying mechanics much. That's because you can do a little more to see why that neuron searched for a particular search term, or why it landed on a particular site.

Wu said, 'We hope that the tools we've published will open up promising avenues in tackling interpretability in an automated way that third parties can build and contribute to. Ultimately, it's not just about what the neurons are responding to, but the overall behavior of the model: ``What kind of circuits are you computing?'' and ``Which neurons affect which neurons? It would be ideal to be able to obtain an appropriate explanation for questions such as 'Are you giving?'

Related Posts:

in Software, Posted by log1l_ks