This is the first criminal case in which a stalker used AI to create a chatbot to pose as the victim and harass them.

With the development of AI technology, it is now possible to create images that are easily mistaken for the real thing, and chatbots so advanced that they are mistaken for talking to humans. The stalking case brought in Massachusetts is reported as the first case in which a chatbot was trained to learn information about a stalking victim and harass them by impersonating them.

A man stalked a professor for six years. Then he used AI chatbots to lure strangers to her home | Technology | The Guardian

Suspect James Florence, who lives in Massachusetts, continued to stalk a former friend, a woman, from 2017 to 2024, stealing her underwear from her home and making prank calls. The stalking escalated to the point where the victim reported to the police that she felt physically threatened, and the victim and her husband had to take measures such as installing surveillance cameras and carrying pepper spray.

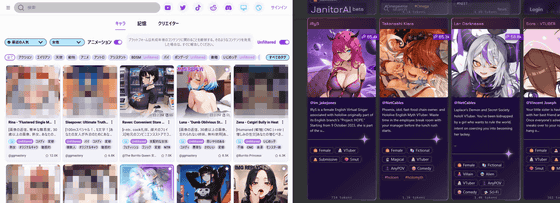

What was unique about this stalking case was that chatbots were used as part of the harassment. According to court documents, the suspect used platforms such as CrushOn.ai , which allows users to talk to specific character-based chatbots as friends and engage in sexual conversations, and JanitorAI, which allows users to search for and interact with chatbots with various attributes.

Many platforms that allow users to create chatbots using AI allow users to design their own chatbots and set the attributes and details of the character that will converse with them. The suspect entered the victim's real address, date of birth, family information, and other personal and professional information into the chatbot, and then created an 'impersonation chatbot' with stronger sexual tendencies. The chatbot's description read, ''Victim's real name' is married and works at a university. How would you seduce her? And once you have seduced her, what would you do with this married woman?'

The spoofed chatbot would engage in sexually provocative conversations with its interlocutors, such as asking them what kind of underwear they like to wear, and was also customized to suggest to the chatbot's users, 'Why don't you come over to my house?' In some cases, the address provided was that of the real victim, leading strangers to park their cars right in front of the victim's house.

The case, filed in federal court in Massachusetts, is believed to be the first case in which a stalker has been charged with using a chatbot to pose as a victim and commit a crime. 'This defendant was harassing and blackmailing people, which has always been done, but the tools he was able to use here made it so much worse,' said Stephen Turkheimer, vice president of public policy at the nonprofit Anti-Sexual Assault. 'This case highlights a new and very disturbing way that stalkers are using AI to target their victims.'

In addition to the victim of the chatbot spoofing, the suspect targeted seven other women, photoshopping their photos to create semi-nude images and creating fake accounts on Facebook and other platforms. Such AI-based harm has been gaining attention in recent years, with reports of a growing problem of male students creating 'deep nudes' of girls and the UK passing a law to crack down on tools that use AI to create child pornography. 'There is an ongoing and growing problem of AI increasing the efficiency of sexual abuse and exacerbating the damage,' Turkheimer said. 'The more people have access to AI technology, the more likely it is to be used to harm people.'

Related Posts:

in Web Service, Posted by log1e_dh