Alibaba's Qwen team releases 'QVQ', an open-source AI model that can recognize images

The research team behind Alibaba's large-scale language model 'Qwen' has released 'QVQ-72B-Preview' as an experimental research model focused on enhancing visual reasoning capabilities.

QVQ: To See the World with Wisdom | Qwen

https://qwenlm.github.io/blog/qvq-72b-preview/

Qwen/QVQ-72B-Preview · Hugging Face

https://huggingface.co/Qwen/QVQ-72B-Preview

The QVQ-72B-Preview is a model based on the Qwen2-VL-72B with enhanced visual reasoning capabilities. The original Qwen2-VL-72B was released in September 2024 and was capable of understanding videos and using multiple languages.

Alibaba releases new AI model 'Qwen2-VL', which can analyze videos longer than 20 minutes and summarize and answer questions about the content - GIGAZINE

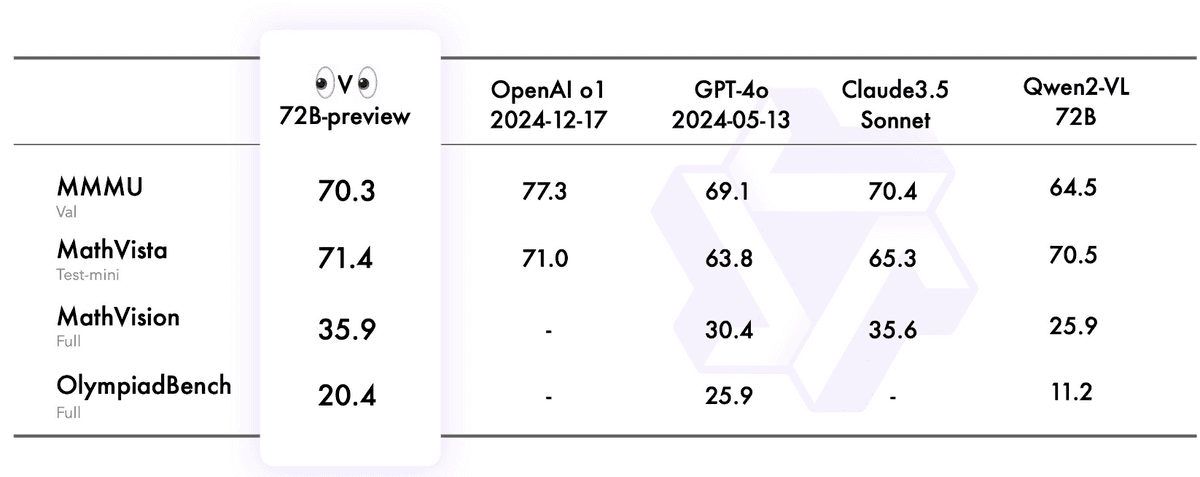

The benchmark results for QVQ-72B-Preview are shown in the figure below. The 'MMMU' benchmark, which evaluates the model's comprehensive understanding and reasoning capabilities related to vision, significantly outperforms the previous model, Qwen2-VL, and narrows the gap with the state-of-the-art model, OpenAI's o1 model. In addition, the scores for the other three benchmarks, which measure mathematical and physical capabilities, also improved significantly from Qwen2-VL.

In addition, the research team stated that 'QvQ-72B-Preview is an experimental research model,' and pointed out that at the time of writing, it was not possible to input video, which was possible with the previous Qwen2-VL model, and that there are some issues that may arise when it is put into practical use, as follows.

・Multiple languages may be mixed when responding

May fall into circular logic patterns during reasoning, generating redundant responses without reaching a conclusion

・Separate safety measures are required to ensure security and reliability

Loss of focus on image content during multi-step visual inference can lead to hallucinations

A demo of the QvQ-72B-Preview is now available

on Hugging Face, and engineer Simon Willison tried it out and reported his results on his blog .One prompt Willison tried that worked was to show a picture of a pelican and ask participants to 'count how many there are.'

The reply from QVQ-72B-Preview is translated into Japanese as follows. In addition to counting the number of birds, it also mentions the pelican that is slightly visible at the edge of the screen.

You have a picture of a pelican and you need to count them. Let's think about it for a moment... First of all, we know that a pelican is a big bird with a big beak, right?

[Omitted]

Let's count again, more carefully this time, starting from the left.

1. The first pelican stands directly in front and is fully visible.

2. Behind it, a little to the right, is a second pelican.

3. And further back there is a third pelican.

4. And next to it is a fourth pelican.

So that's four pelicans in total, but there is part of another bird on the right edge of the image, possibly the back or tail feathers of a pelican, but it's not entirely in the frame so I don't think it should be counted as a whole pelican.

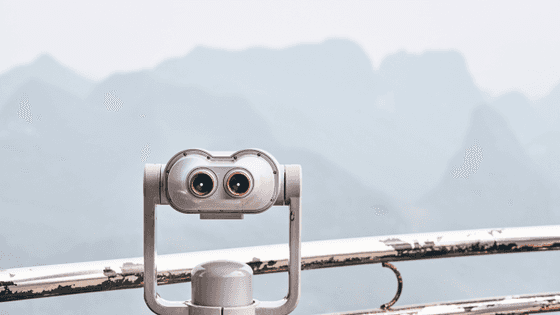

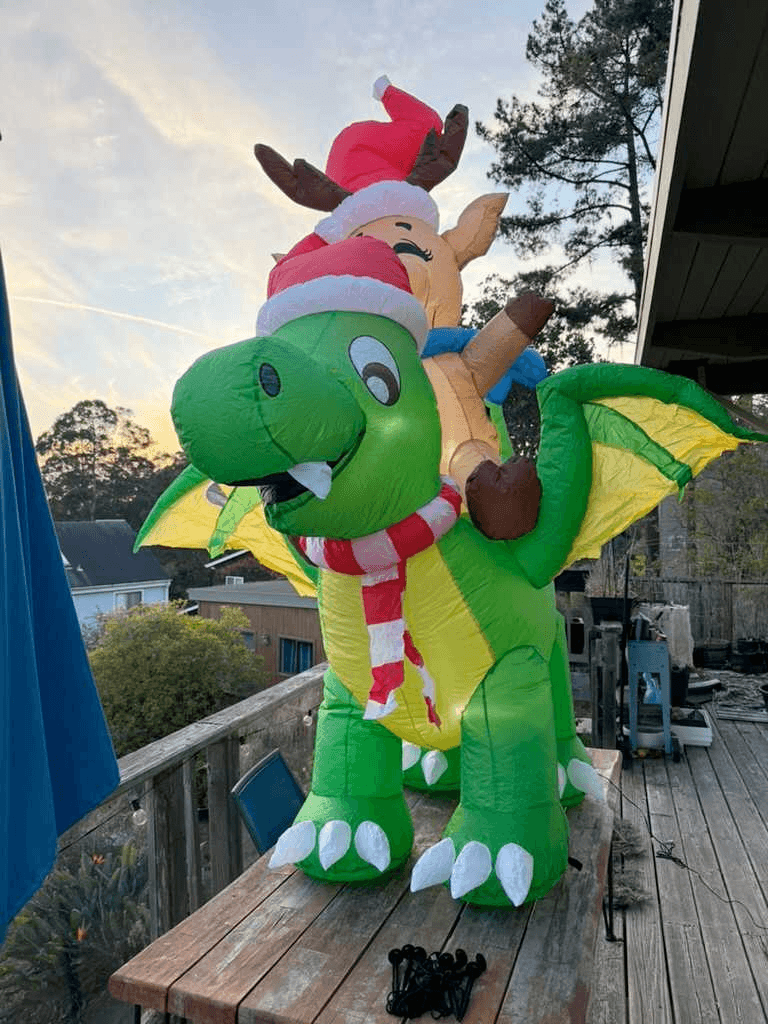

Another interesting answer that Willison mentioned was when he showed participants the image below and asked them to 'guess the height of a dinosaur.'

QVQ-72B-Preview stated that he was 5 feet 10 inches (178 cm) tall, and estimated that he was 8 to 9 feet (244 to 274 cm) taller than himself. Willison commented, 'I wonder how he determined his height.'

Related Posts: