AI successfully recreates real scenery from environmental sounds

A research team from Wuhan University and other institutions has announced that they have succeeded in converting recorded audio into landscape images using a specially designed AI.

From hearing to seeing: Linking auditory and visual place perceptions with soundscape-to-image generative artificial intelligence - ScienceDirect

Researchers Use AI To Turn Sound Recordings Into Accurate Street Images - UT News

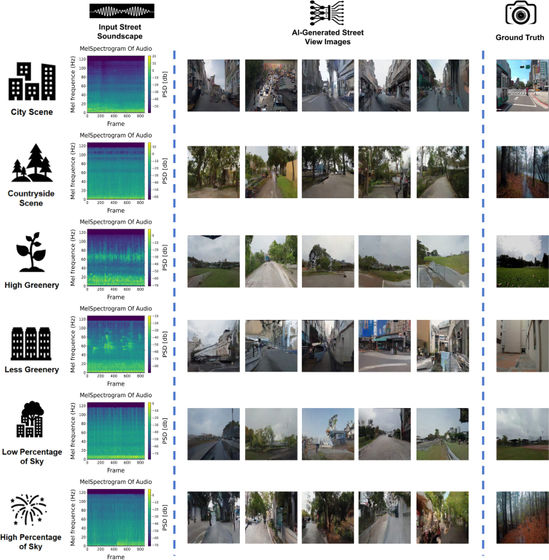

Yonggai Zhuang of Wuhan University and his colleagues used YouTube videos and audio taken in cities in North America, Asia, and Europe to create 10-second audio-still image pairs, which they used to design an AI model that can generate high-resolution images from audio.

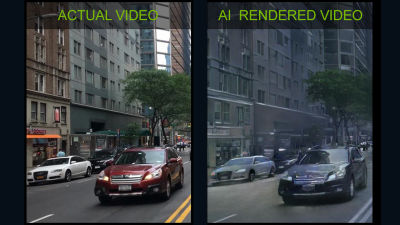

The AI was then asked to play 100 different sounds and generate images, which were then compared to the real images and rated by humans and computers for accuracy. The computer-generated images were compared to the relative proportions of buildings, sky, and vegetation in each image.

The results showed that the proportion of sky and vegetation was strongly correlated between the generated images and the real images, but the proportion of buildings was somewhat less correlated, and that human participants selected the image that was most similar to the real image with an average accuracy of 80%.

'The ability to visualize scenes from sounds is uniquely human and reflects a deep sensory connection with our environment,' Zhuang and his colleagues said. 'Our findings demonstrate that using advanced AI techniques supported by large-scale language models, it is possible for machines to achieve something closer to human perception.'

A computer analysis showed that the images generated closely resembled the proportions of sky, plants, and buildings, often reflected the architectural style and close distances between objects, and accurately reflected lighting conditions, such as whether the recordings were made on a sunny, overcast, or nighttime day. 'The lighting conditions may be determined from certain sounds, such as traffic sounds or the chirps of nocturnal insects,' Zhuang and his colleagues wrote.

Zhuang et al. said, 'This research suggests that AI may be able to understand human subjective experiences. If a person closes their eyes and listens to the sounds around them, they should be able to associate the sound of a distant car or the gentle rustling of leaves with the scenery of a city or forest. By sharing these sensations with an AI, it may be possible to use AI in urban design to create comfortable and beautiful spaces.'

Related Posts: