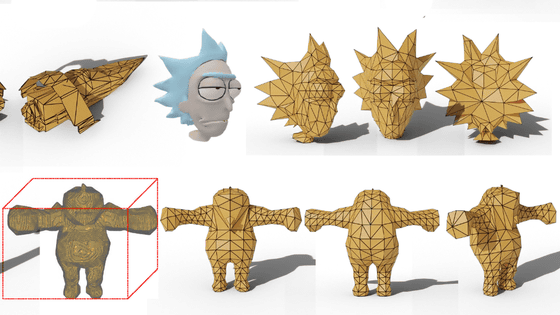

NVIDIA unveils a method to generate a 3DCG virtual world from real images using AI, significantly reducing the cost of building a 3D environment

NVIDIA has succeeded in developing the world's first AI capable of 'constructing 3D CG virtual environments from real-world footage.' AI-generated 3D CG virtual environments can be used for self-driving car training, games, and VR, significantly reducing the time and cost required to build 3D environments compared to conventional methods.

NVIDIA Invents AI Interactive Graphics - NVIDIA Developer News CenterNVIDIA Developer News Center

You can see what kind of 3D environment NVIDIA's AI can create from real-world images in the movie below.

Research at NVIDIA: The First Interactive AI Rendered Virtual World - YouTube

'This is the world's first AI-rendered interactive virtual world.'

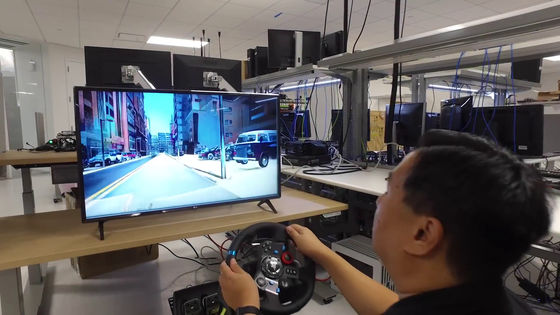

After these words, a person appeared playing a racing game.

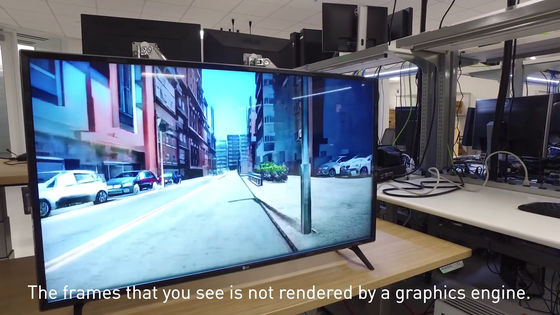

The images displayed on this display are not generated using a graphics engine, but are 3DCG created by AI by converting real-world images.

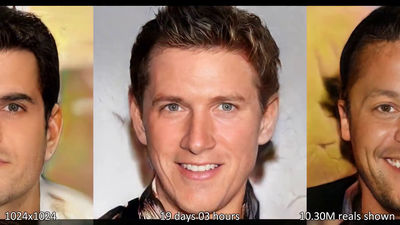

Ting-Chun Wang of NVIDIA developed the AI using a conditional generative neural network, which allows the neural network to be trained on real-world footage and then used to render new 3D environments.

'What if we could train an AI model that could generate new worlds based on real-world movies?'

NVIDIA has developed an AI that can turn this ideal into reality, creating a 3D virtual world from a movie of the real world.

'This is the first attempt to use deep networks to combine machine learning and computer graphics to generate images,' says Ming-Yu Liu, a researcher at NVIDIA who worked with Wang on AI development.

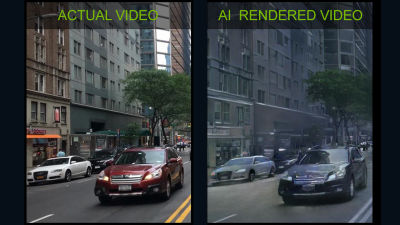

'We use real-world city footage to train neural networks to render urban environments.'

To train the AI, the researchers used footage of cars driving around urban areas.

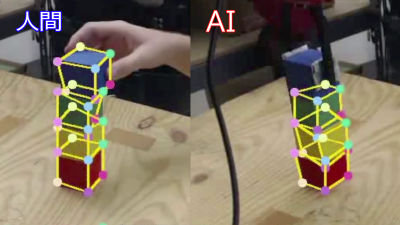

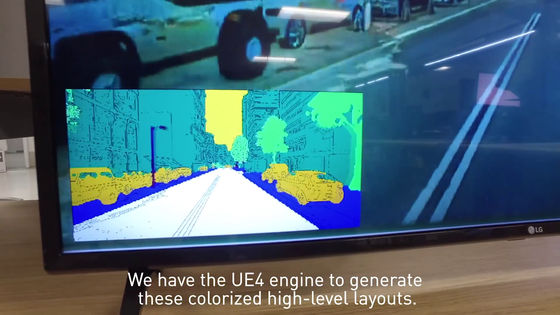

In addition, they are using a separate segmentation network to enable high-level semantic extraction from a series of videos.

What this means is that by color-coding the video using Unreal Engine 4, objects in the video are layered by type and given different colors. For example, in the video below, roads are white, cars are yellow, and buildings are blue-green, showing that the boundaries between objects are correctly recognized.

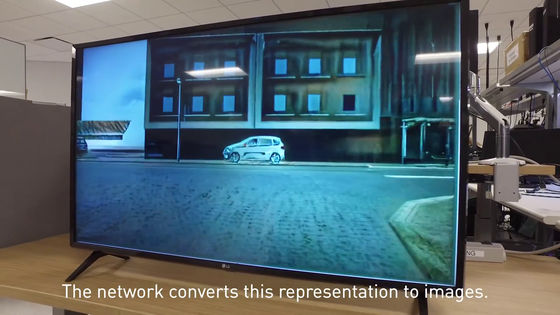

The network then converts this representation into an image. This is the general process of AI converting real-world footage into virtual images.

'This research builds on NVIDIA AI research presented at

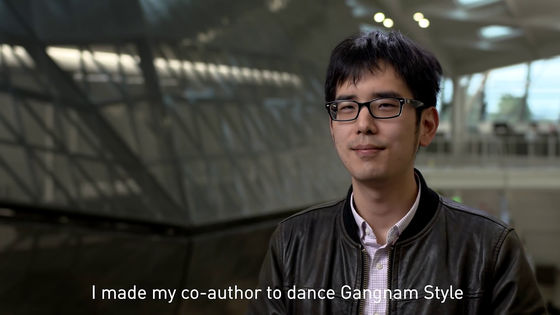

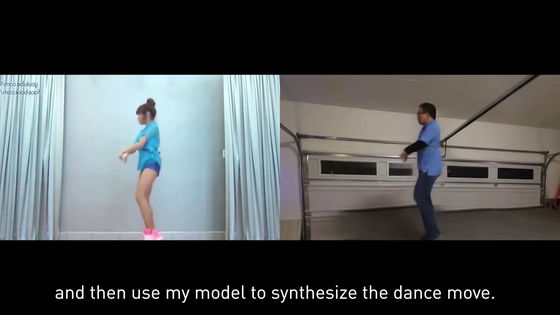

And what else can this AI do besides converting real-world footage into digital images? 'I can even get my co-author to dance

All you need is a Gangnam Style movie.

Based on this, a movie has been completed in which Liu dances the Gangnam style with ease.

'This is a video made by a machine. It's not me,' Liu said shyly, and the movie ended.

Related Posts: