'MarioVGG' is an AI model that learns Super Mario gameplay footage and automatically generates gameplay videos from text

by

Virtuals Protocol , an AI development startup, has announced that it has developed an AI model called MarioVGG that can generate gameplay footage of 'Super Mario Bros.' by inputting text. MarioVGG is trained on approximately 730,000 frames of 'Super Mario Bros.' gameplay video.

MarioVGG

https://virtual-protocol.github.io/mario-videogamegen/

New AI model “learns” how to simulate Super Mario Bros. from video footage | Ars Technica

https://arstechnica.com/ai/2024/09/new-ai-model-learns-how-to-simulate-super-mario-bros-from-video-footage/

The Virtuals Protocol research team trained the model on a publicly available Super Mario Bros. gameplay dataset. This dataset contains play data for a total of 280 stages, with over 737,000 frames of images and their corresponding input data organized. However, this time the data for the first stage of Super Mario Bros., 'World 1-1,' was excluded from the learning for evaluation purposes.

In the data preprocessing stage, the data was split into chunks of 35 frames each, allowing the model to learn immediate outcomes for a variety of inputs. For simplicity, the team focuses on two inputs: 'run right' and 'run right and jump.'

Training the model took about 48 hours using a single NVIDIA RTX 4090 GPU. After training, MarioVGG was able to generate new frames from an initial frame of still images and text input using a standard convolution and denoising process.

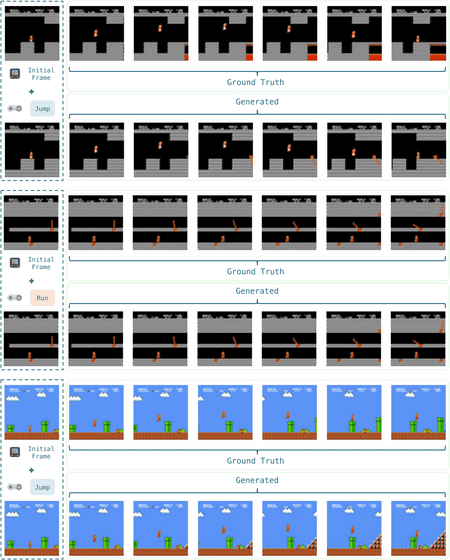

However, while the frame resolution of the play data was 256x240 pixels, the generated video is 64x48 pixels, and the playback speed is about five times faster. Therefore, the video is quite rough compared to the actual gameplay video. The following GIF animation is a part of the video actually generated by MarioVGG.

According to the research team, at the time of writing, it takes 6 seconds for 6 frames of MarioVGG to generate video. Since the generation speed of '1 second per frame' is far from real-time video generation, the research team argued that 'in the long term, this technology may significantly change the game development process, but there is a long way to go before it can completely replace existing game engines.'

The research team also noted that the most noteworthy aspect of this study is that MarioVGG was able to learn the game's physics and interaction rules without explicit programming.

For example, MarioVGG was able to replicate the behavior of gravity when Mario falls off a cliff. It also learned basic collision detection to stop Mario's progress when he approaches an obstacle. This shows that the AI can extract the laws of physics from video data and apply them to new situations.

Another interesting point is that MarioVGG also 'generates' the environment, the research team said. Elements such as terrain and obstacles automatically generated by MarioVGG are consistent with the look of the original game. In other words, MarioVGG does not simply imitate the player's movements, but can be considered to have succeeded in creating the game world itself, and can be considered a new form of procedural content generation using AI, the research team said, and future challenges include learning with more diverse data, optimizing the model, and implementing more advanced control functions.

Related Posts: