How to manage your 'crawl budget' to get more pages into Google search results faster

Google continues to crawl all websites on the Internet to create a search index, but it does not crawl all the pages of each website. Instead, it determines the maximum number of pages to crawl for each website. This page limit is called the ' crawl budget ,' and Google's official documentation summarizes 'things to check to make the most of your limited crawl budget.'

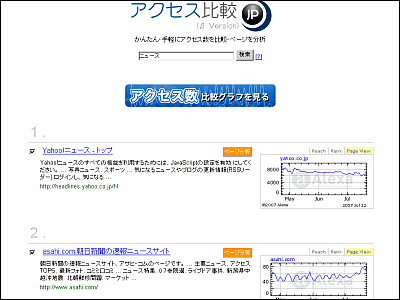

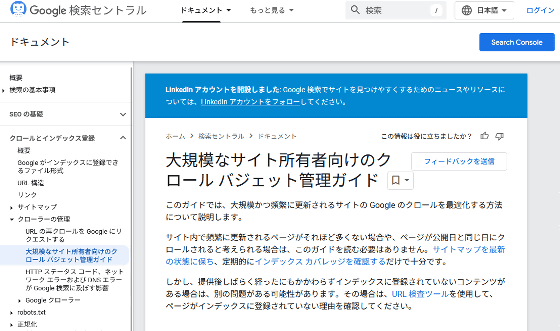

Crawl Budget Management for Large Sites | Google Search Central | Documentation | Google for Developers

https://developers.google.com/search/docs/crawling-indexing/large-site-managing-crawl-budget?hl=ja

Google's crawler (Googlebot) increases the crawl frequency limit if the website continues to respond quickly, and decreases it if the response slows down or an error is returned. Googlebot also determines the crawl budget based on the website's 'update frequency,' 'page quality,' and 'relevance.'

If the same content exists multiple times on a website, crawl budget will be wasted. For this reason, it is necessary to explicitly indicate which content is authentic. The following page provides detailed information on how to indicate authentic content.

How to specify the canonical page using rel='canonical' etc. | Google Search Central | Documentation | Google for Developers

https://developers.google.com/search/docs/crawling-indexing/consolidate-duplicate-urls?hl=ja

If you have pages that you don't want to be registered in the search index, you can reduce the consumption of the crawl budget by editing ' robots.txt ' to block crawling in advance. Also, since Googlebot stops crawling the page when it returns a 404 or 410 status code , you can save crawl budget by setting it to return each status code correctly. Furthermore, since Googlebot reads the sitemap periodically, it is important to include all the content you want to crawl in the sitemap.

The crawl budget is also affected by the time it takes to crawl, so you can increase the number of crawlable pages by speeding up your site's response time. It is also effective to use the crawl statistics report to check for crawling issues, and to use the URL inspection tool to check whether each page is crawlable. Please see the following page for solutions to problems with your pages.

Crawl Statistics Report - Search Console Help

https://support.google.com/webmasters/answer/9679690?hl=ja

Related Posts:

in Web Service, Posted by log1o_hf