How does using a CDN affect Google's search indexing?

CDN is a technology that enables fast delivery by caching content on edge servers installed close to users. CDNs are being introduced to various sites to speed up the display of websites, but Google has published an article about how such CDNs affect search crawler bots.

Crawling December: CDNs and crawling | Google Search Central Blog | Google for Developers

https://developers.google.com/search/blog/2024/12/crawling-december-cdns

No matter how much you invest in servers and how fast your response time is, it still takes time to respond to access from users who are physically far away. Google has summarized the benefits of using a CDN in the following three points, including the fact that it 'reduces physical distance and enables fast response.'

1: Fast response and reduced load through caching

CDNs cache resources such as images, videos, HTML, CSS, and JavaScript on edge servers. Users access the edge server closest to their location, which shortens communication times, and by distributing resources cached on the edge server, access is not concentrated on the original server, reducing the load.

2: Protection from mass access

Because all traffic attempting to access the site goes through the CDN service, even if a DDoS attack is launched, which sends a huge amount of traffic to a website or service and takes down the server, the CDN can detect and block signs of unauthorized access, thereby mitigating the attack.

3. Reliability

For some sites, even if the origin server goes down, the CDN can still deliver the content using a cached copy.

Given these benefits, Google states that 'CDNs are a valuable ally' and recommends their introduction, especially for large sites or those expecting large amounts of traffic.

Google normally limits the amount of pages it crawls at one time for a domain to avoid putting too much strain on its servers, but it will crawl sites that use CDNs more frequently, and it can tell if a site has a CDN by looking at the site's IP address.

Because the 'limit on the amount of pages crawled at one time' is per domain, it is possible to crawl more content than usual by using a unique domain such as 'cdn.example.com' to deliver static resources such as images. Google's crawler can handle cases where all domains go through the CDN, as well as cases where only some domains go through the CDN, but it is recommended that all domains be delivered through the CDN service when using multiple domains in order to avoid affecting page loading speed.

On the other hand, one problem that can occur when using a CDN is that the crawler may mistakenly recognize a large number of accesses as unauthorized access and block the crawler. The impact on crawl results varies depending on how the block is handled.

◆Hard Block

Google classifies a response that contains an error to the crawler as a 'hard block.'

1:

503

or 429 status code responseBoth 503 and 429 are status codes that indicate that the server is temporarily unavailable. If you communicate this to Google's crawlers, you may have some time to address the CDN issue before it is affected. Google recommends responding with these status codes.

2: Timeout

If a CDN times out a connection, the URL will be removed from Google's search index, which may affect how Google determines a site's load, resulting in a decrease in crawl frequency.

3: 200 status code returns an error page

If the status code that returns the error page is 200, which indicates 'OK' when communication was successful, the crawler will analyze the error message. If the crawler recognizes that 'this page is an error page,' it will simply remove the URL from the search index, but if it recognizes it as a normal page, all pages that display the same message will be treated as 'duplicate URLs.' It takes a long time to recrawl duplicate URLs, so recovery may be prolonged.

◆Soft Block

Some CDN services may ask for a CAPTCHA or other challenge to verify that you are a human before you can access the site. Google classifies such a block as a 'soft block.' Google's crawlers do not pretend to be human, so they are unable to crawl the content after all, but they can avoid removing it from their search index by returning a 503 status code to indicate that it is temporary.

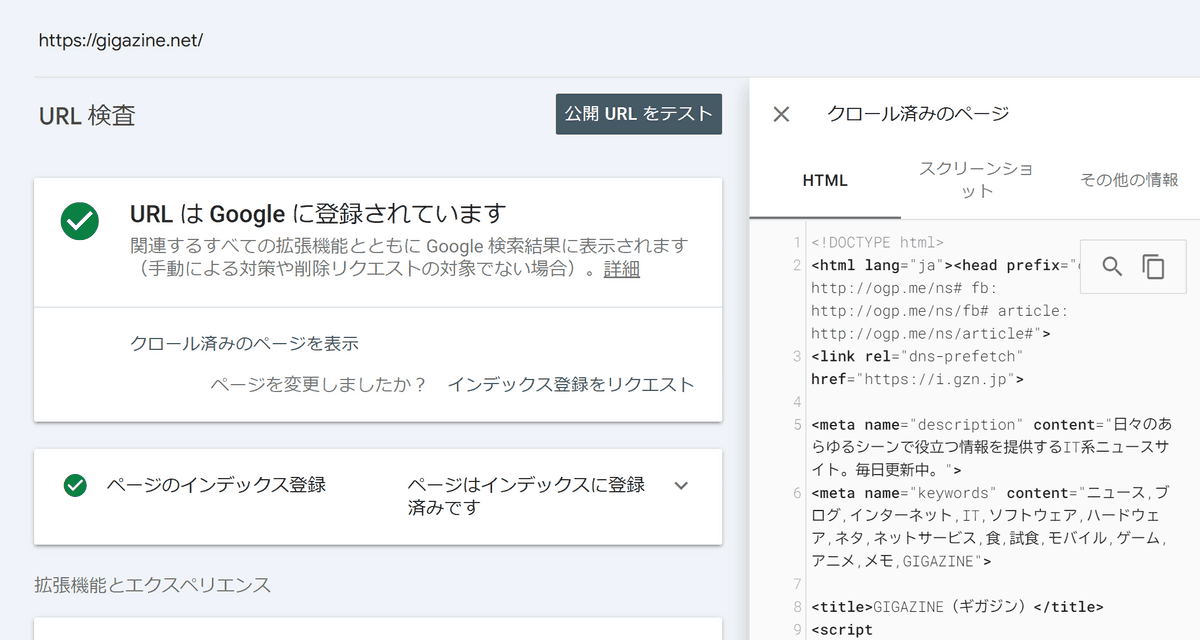

Google provides a '

URL Inspection Tool

' as a tool to check whether the crawler is viewing the site correctly. If you have trouble with crawling, it is a good idea to use the URL Inspection Tool to check that the crawler is displaying the correct content.

If you are seeing a blank page, an error page, a bot challenge page, or anything else, we recommend contacting your CDN.

Related Posts:

in Web Service, Posted by log1d_ts