GitHub's performance engineering team explains how increased CPU usage can degrade performance

Even if you have a high-performance CPU, it is useless if there is a long idle time, but if you put too much load on it, performance will drop, so it is not efficient. GitHub has published the results of an experiment conducted to optimize system efficiency while maintaining performance.

Breaking down CPU speed: How utilization impacts performance - The GitHub Blog

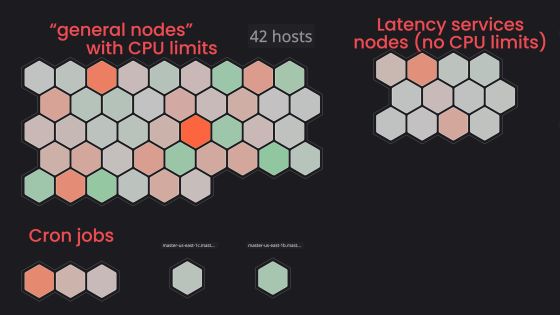

GitHub first developed an environment called 'Large Unicorn Collider (LUC)' designed to meet the requirement of being able to understand how the system behaves under various loads while collecting data from a workload as close to the production environment as possible.

We then sent moderate production traffic to a LUC Kubernetes pod hosted on a dedicated machine to establish a baseline, and then applied stress using a tool called “stress,” which runs random processing tasks to intentionally occupy a certain number of CPU cores, and collected data.

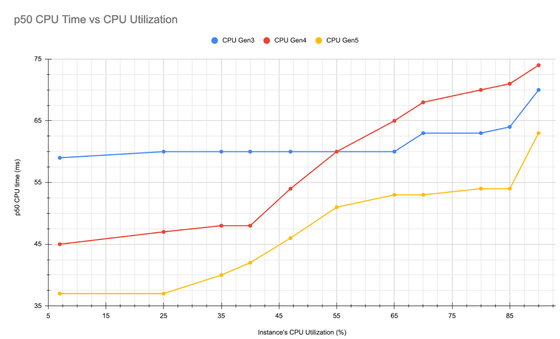

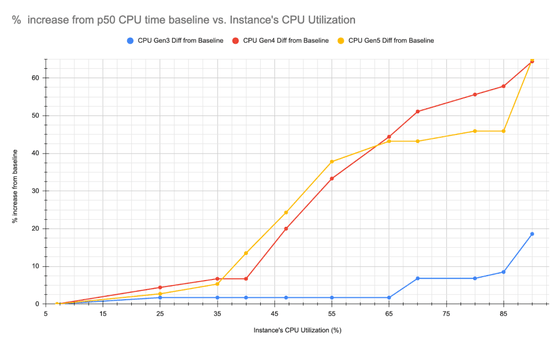

As expected, we found that CPU time increased for all instance types as CPU utilization increased. Below is a graph of CPU time per request as CPU utilization increased. The vertical axis is p50 (50th percentile), or the median CPU time, and the horizontal axis is CPU utilization.

In addition, below we focus on the rate of increase in latency, with the vertical axis changed to the rate of increase over the baseline of p50 CPU time.

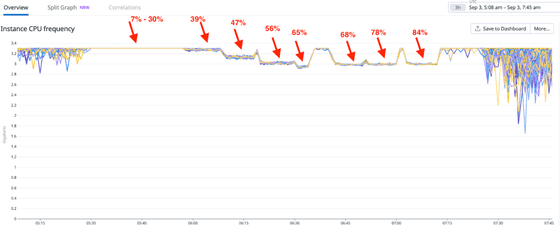

One interesting thing GitHub points out is the change in CPU frequency as CPU usage increases, which is due to Intel's

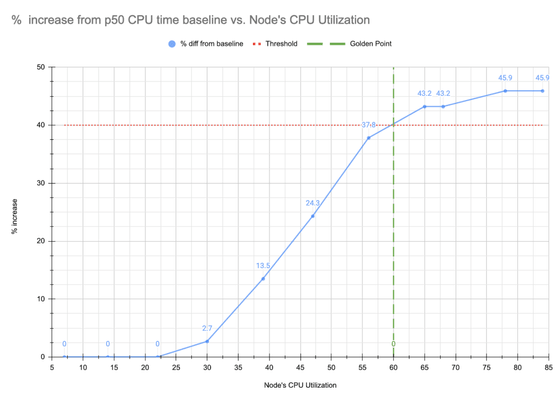

While underutilized nodes waste data center resources, power, and space, overutilizing nodes is also inefficient.

According to GitHub, there are two things to consider when optimizing the relationship between CPU usage and CPU time, i.e. latency:

- CPU usage is high enough to avoid under-utilization of resources.

- The degradation of CPU time does not exceed the acceptable limit.

Here is an example that meets the above criteria: We aim to keep CPU time degradation below 40%, which corresponds to 61% CPU usage in a particular instance.

Summarizing the test results, GitHub said, 'We observed that across different CPU families, performance degrades as CPU utilization increases. However, by identifying optimal CPU utilization thresholds, we were able to improve the balance between performance and efficiency, ensuring that our infrastructure is both cost-effective and performant. This insight will help inform future resource provisioning strategies and help maximize the impact of hardware investments.'

Related Posts:

in Hardware, Web Service, Posted by log1l_ks