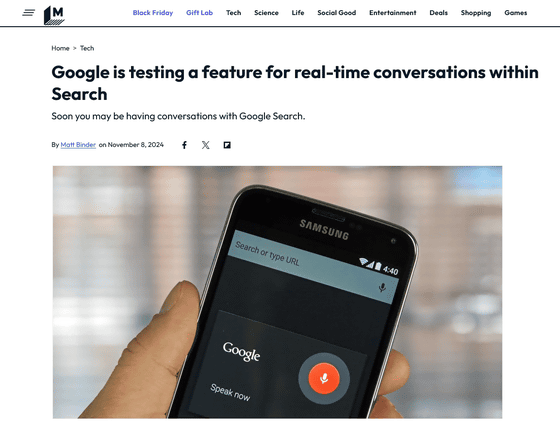

Google is testing a feature that lets users 'search by talking to AI' in their browser. Will search engines using generative AI become commonplace?

Google announced a feature called '

Google is testing a real-time voice-to-search feature | Mashable

https://mashable.com/article/google-voice-to-search-testing

Google's testing a conversational search feature that updates results in real time | Android Central

https://www.androidcentral.com/apps-software/googles-testing-a-conversational-search-feature-that-updates-results-in-real-time

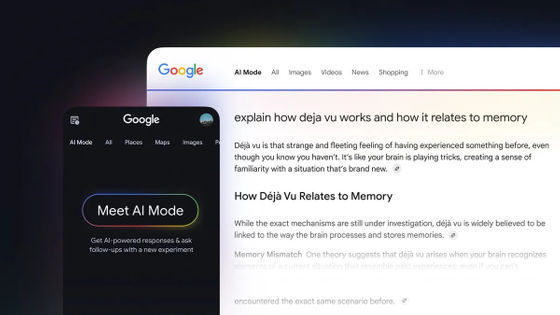

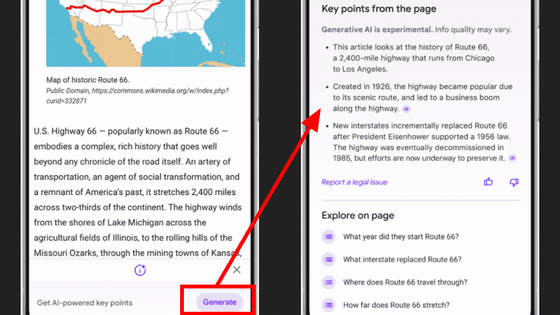

In addition to SGE, which shows an overview from search results, and the real-time AI search engine Perplexity , Meta is reportedly developing its own AI-powered search engine , and in November 2024, OpenAI announced the AI search service 'ChatGPT search.' Due to the rapid advancement of AI, generative AI is also becoming involved in search engines. AI-powered search engines do not display a list of pages containing the search query like traditional web searches, but instead summarize answers to the query from web search results using AI and display them. OpenAI claims that 'To get quality information on the web, you need to search repeatedly. However, ChatGPT search gives you better answers.'

OpenAI announces AI search service 'ChatGPT search', appealing that there is no need to Google repeatedly - GIGAZINE

In the latest move to combine search engines with generative AI, Google is reportedly testing a 'real-time conversational voice search feature.' Search engines such as Google Chrome allow for 'voice search,' but voice search allows you to speak your search query into the voice. The new format is different, allowing you to search in a 'conversational' format, digging deeper into search results.

In the movie released by Android developer AssembleDebug , you can see how the conversation search actually works.

The demo of new conversational search in Google app. It continuosly listens to your voice where you can ask followup questions while performing a search. #Android #Google pic.twitter.com/DgXnMx5DU4

— AssembleDebug (Shiv) (@AssembleDebug) November 4, 2024

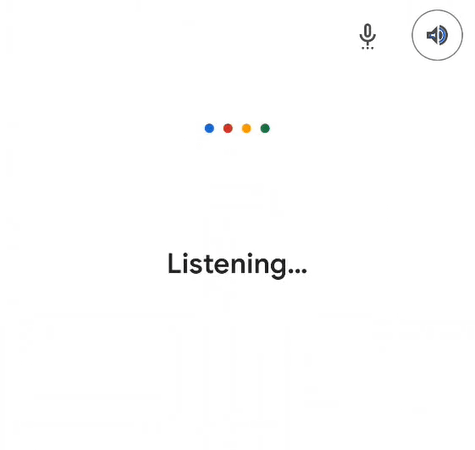

When you launch Conversation Search, the screen will say 'Listening...' and enter a mode to accept voice input.

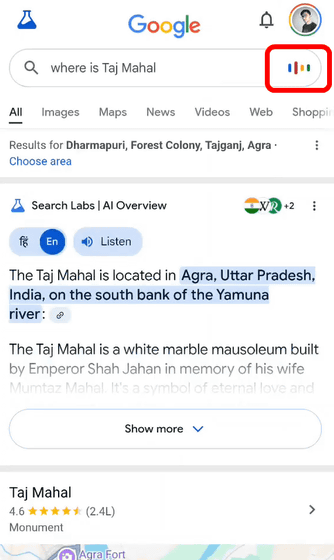

AssembleDebug said, 'where is Taj Mahal.' While typing, a 'busy' icon is displayed as shown in the image below.

The search results will then display SGEs and maps explaining the location of the Taj Mahal. Up to this point, it is the same as a conventional voice search, but what is noteworthy is that the right side of the search query remains as 'Voice input in progress.'

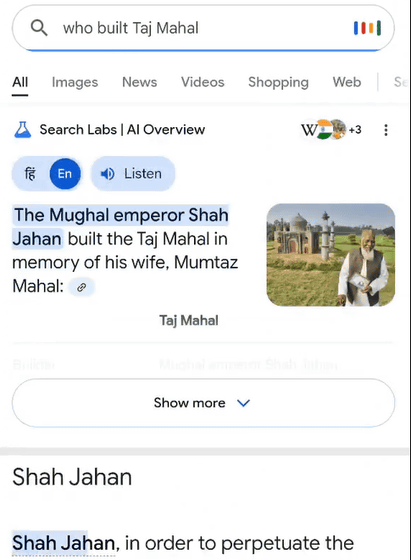

Next, AssembleDebug asked, 'Who built Taj Mahal?' and information about

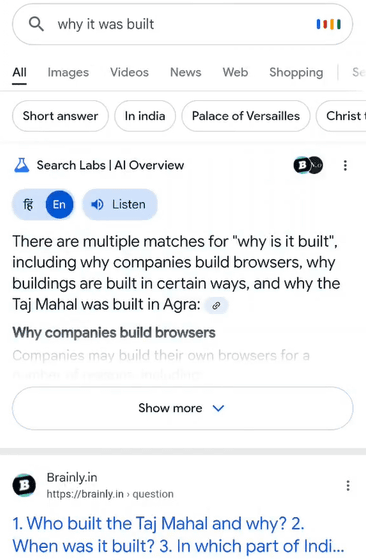

Additionally, when the user used the demonstrative “why it was built” the Taj Mahal results continued to pop up, suggesting that the user was not simply conducting a series of voice searches but was instead using a series of conversations to further refine the search results.

With the previous Google voice search feature, once you entered a search query and finished the search, voice input would end, and to ask another question you had to tap the microphone icon again to start voice input again. The new update that Google is testing allows you to start a search process and the AI will continue to dig into search results based on the context of your conversation.

The conversational voice search feature is a test feature that is not publicly available in Google apps, and it is unclear whether it will actually be released. However, as AI companies such as OpenAI and Meta are promoting the integration of AI and search engines, including speech-to-text conversion, Google's move as the largest search engine is attracting attention.

Related Posts:

in Web Service, Posted by log1e_dh