Why do researchers need to run AI on their local PCs?

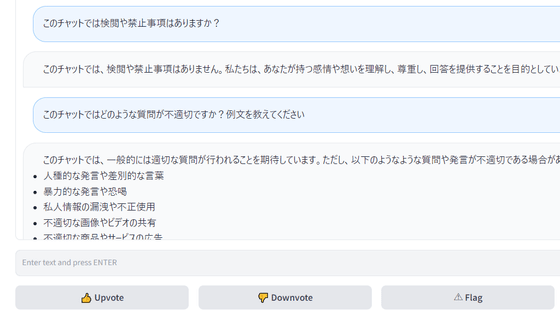

Since advanced generative AI requires appropriate equipment to operate, general users have no choice but to connect to the Internet in order to use advanced generative AI. On the other hand, there have been attempts to run advanced AI locally, and there are some advanced AIs that actually run locally. The scientific journal Nature introduced some user feedback on the benefits of running AI locally.

Forget ChatGPT: why researchers now run small AIs on their laptops

Chris Thorpe, who runs histo.fyi, a database of immune system protein structures, runs the AI on his laptop. Thorpe had previously tried the paid version of ChatGPT, but found it expensive and the output inappropriate, so he uses Llama, which has 8 to 70 billion parameters, locally.

Another advantage, according to Thorpe, is that 'local models do not change.' Commercially deployed AI models can change their behavior at the company's discretion, forcing users to change their prompts and templates, but local models can be used freely at the user's discretion. 'Science is about reproducibility. Not being able to control the reproducibility of what you're generating is a cause for concern,' said Thorpe, expressing his opinion on the usefulness of running AI locally.

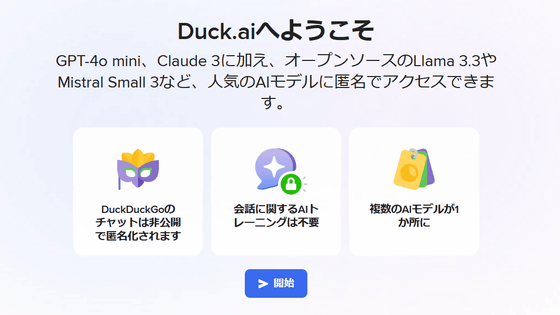

At the time of writing, there are AI models with hundreds of billions of parameters, but the more parameters there are, the more complex the processing becomes. Some companies are releasing these complex models, while others are releasing reduced versions that can run on consumer PCs or open weight versions that expose the 'weights'.

For example, Microsoft released the small language models '

Kal'tsit, a biomedical scientist from New Hampshire, is one of the developers who created the local application. Kal'tsit fine-tuned the 'Qwen' model created by Alibaba, and created a model that proofreads manuscripts and creates code prototypes, and released it to the public. 'In addition to being able to fine-tune the local model for its intended use, another advantage is privacy. Sending personally identifiable data to a commercial service could violate data protection regulations and would be troublesome if an audit were conducted,' Kal'tsit said.

Other doctors who use AI to help with diagnoses based on medical reports or to transcribe and summarize patient interviews say they use local models because of their focus on privacy.

'Whether you choose the online or local approach, local models should perform well enough for most use cases out of the box,' said Stephen Hood, head of open source AI at Mozilla, the tech company that develops Firefox. 'It's amazing how quickly we've made progress in the last year. It's up to you to decide what kind of application you want. Don't be afraid to get your hands dirty. You might be pleasantly surprised.'

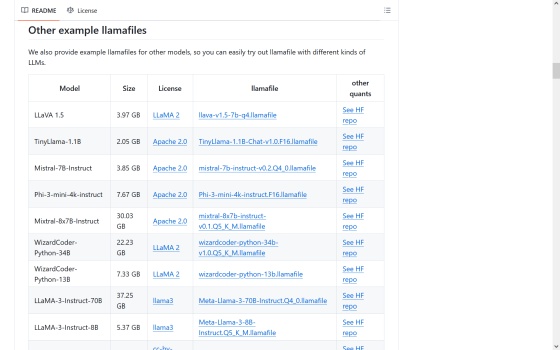

One way to run an AI model locally is to use a tool called 'llamafile' provided by Mozilla developers.

GitHub - Mozilla-Ocho/llamafile: Distribute and run LLMs with a single file.

https://github.com/Mozilla-Ocho/llamafile?tab=readme-ov-file

Once you access the above URL, click on the link that says 'Download llava-v1.5-7b-q4.llamafile.'

Change the extension of the downloaded file to '.exe'.

Clicking on the file will launch a browser and display the chat interface.

The above method will allow you to run ' LLaVA 1.5 ' locally. There are also some links to other models.

Related Posts:

in Software, Posted by log1p_kr