OpenAI and Anthropic agree to submit unpublished AI to government agency

The US Artificial Intelligence Safety Institute , an organization within the National Institute of Standards and Technology, announced that it has signed a contract with OpenAI and Anthropic for pre-release testing of AI.

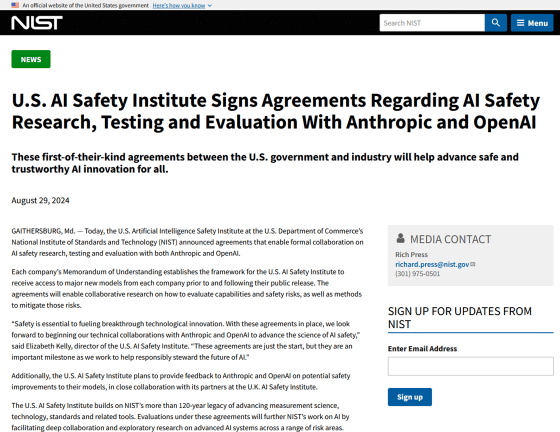

US AI Safety Institute Signs Agreements Regarding AI Safety Research, Testing and Evaluation With Anthropic and OpenAI | NIST

https://www.nist.gov/news-events/news/2024/08/us-ai-safety-institute-signs-agreements-regarding-ai-safety-research

This agreement establishes a framework for the American AI Safety Institute to access pre-release AI models from OpenAI and Anthropic. By accessing both companies' AI models, the American AI Safety Institute will be able to evaluate their functionality and safety risks. The American AI Safety Institute also intends to work with both companies to study ways to mitigate AI safety risks.

In addition, the US AI Safety Institute has announced that it will share its safety evaluation of OpenAI and Anthropic's new AI models with the UK AI Safety Institute .

'We are pleased to have reached an agreement with the American AI Safety Institute to conduct pre-release testing of future models,' said OpenAI CEO Sam Altman. 'We believe it is important that AI safety testing be conducted at the national level for many reasons, and the United States must continue to play a leading role.' He welcomed the deal with the American AI Safety Institute.

we are happy to have reached an agreement with the US AI Safety Institute for pre-release testing of our future models.

— Sam Altman (@sama) August 29, 2024

for many reasons, we think it's important that this happens at the national level. US needs to continue to lead!

In addition, OpenAI has developed an AI model called 'Strawberry' that can solve mathematics, and it has been reported that it demonstrated it to the National Security Agency (NSA).

OpenAI demonstrates groundbreaking achievement called 'Strawberry' to US officials, aiming to surpass GPT-4 by creating training data for flagship LLM codenamed 'Orion' - GIGAZINE

Related Posts:

in Note, Posted by log1o_hf