Elon Musk has ordered NVIDIA chips reserved for Tesla to be diverted to xAI

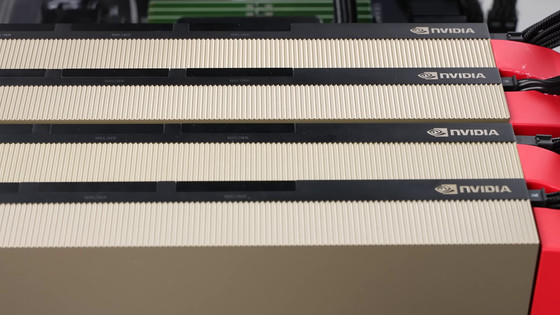

It turns out that Elon Musk ordered 12,000 GPUs reserved for Tesla to be shipped to xAI, and Musk has been criticized for differing from what he told investors.

Elon Musk told Nvidia to ship AI chips reserved for Tesla to X, xAI

https://www.cnbc.com/2024/06/04/elon-musk-told-nvidia-to-ship-ai-chips-reserved-for-tesla-to-x-xai.html

According to internal documents seen by foreign media CNBC, Musk asked NVIDIA to prioritize chip deployment for xAI, an AI company he owns, and 12,000 of the high-performance 'H100' chips reserved for Tesla were diverted to xAI.

At Tesla's first quarter earnings briefing in April 2024, Musk said, 'By the end of 2024, we will increase the number of H100s from 35,000 to 85,000,' and later wrote in a post on X that 'Tesla will spend $10 billion (about 1.55 trillion yen) on training and inference AI in 2024,' raising investors' expectations. Some investors felt 'betrayed' by the news. CNBC reports that Tesla shares fell 1% immediately after the report.

In response to the report, Musk said, 'Tesla just didn't have a place to store the chips. The Gigafactory in Texas is almost done expanding, and it will house the H100 chips for Tesla.'

Tesla had no place to send the Nvidia chips to turn them on, so they would have just sat in a warehouse.

— Elon Musk (@elonmusk) June 4, 2024

The southern extension of Giga Texas is almost complete. This will house 50k H100s for FSD training.

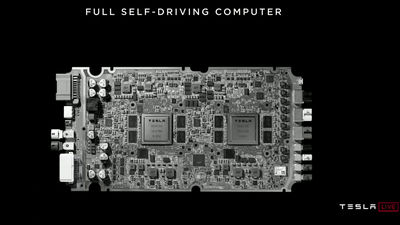

Tesla needs high-performance GPUs to build its self-driving and other systems, while xAI needs them to build its chat AI, Grok.

According to Musk, about half of Tesla's $10 billion expenditure will be for Tesla-designed AI inference computers and sensors, and the supercomputer 'Dojo,' while the amount spent on NVIDIA will be $3 billion (about 470 billion yen) to $4 billion (about 620 billion yen). Regarding the possibility of doing computing in-house without relying on NVIDIA, Musk said, 'It's a long road, but I think there is a possibility of success.'

Training compute for Tesla is relatively small compared to inference compute, as the latter scales linearly with size of fleet.

— Elon Musk (@elonmusk) June 4, 2024

Perhaps the best way to think about it is in terms of power consumption.

When the Tesla fleet reaches 100M vehicles, peak power consumption of AI…

Related Posts:

in Posted by log1p_kr