Anthropic publishes reflections on existing policies to safely provide 'AI that poses catastrophic risks to humanity'

Anthropic, an AI company that develops chat AI such as 'Claude,' has announced the points of reflection on its AI safety policy. A new policy will be built based on the points of reflection announced this time.

Reflections on our Responsible Scaling Policy \ Anthropic

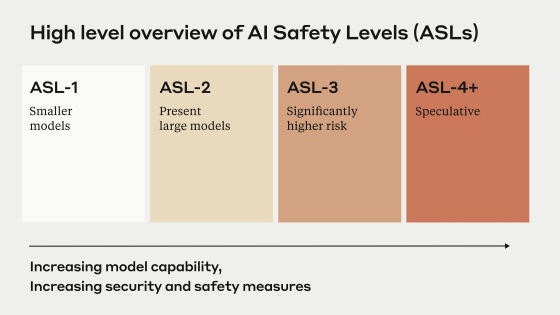

Anthropic is an AI company whose CEO is Dario Amodei, who was involved in the development of GPT-2 and GPT-3 at OpenAI. Anthropic classifies the threats to safety that come with improved AI performance using an index called 'AI Safety Level (ASL),' with 'AI that does not pose a significant risk' as ASL-1, 'AI that shows signs of being misused for the development of biological weapons, etc.' as ASL-2, 'AI that poses a devastating risk compared to search engines and textbooks' as ASL-3, and 'AI that has performance far removed from current AI and the level of danger cannot be defined' as ASL-4.

At the time of writing, the mainstream chat AI, including Anthropic's chat AI 'Claude,' falls under ASL-2. In order to safely develop AI that falls under ASL-2, Anthropic is working on 'identifying and disclosing' redline features that pose significant risks' and 'developing and implementing new standards for safely handling redline features.' Of these, the work of 'developing and implementing new standards for safely handling redline features' is called the 'ASL-3 standard,' but the existing policy does not allow the ASL-3 standard to be fully promoted.

Anthropic has recently published 'reflections on existing policies,' which are important in advancing the work to improve the policy. Reflections include 'new generation models have new features that differ from one model to another, making it difficult to predict the characteristics of future models,' 'even in relatively established fields such as chemistry, biology, radioactive materials, and nuclear, there are differences of opinion among experts on which risks have the greatest impact,' and 'what risks does AI's capabilities pose.' In addition, 'rapid response cycles with experts in each field helped us recognize problems with tests and tasks,' and 'attempts to quantify threat models helped us determine priority features and priority scenarios.' These include operations that should be continued in the future.

Anthropic will be improving its policies based on lessons learned from its existing policies and will be publishing a new policy soon.

Anthropic CEO Amodei predicts that ASL-4, an AI with capabilities far removed from current AI and with an undefined level of danger, will appear sometime between 2025 and 2028.

'By 2025-2026, AI will emerge that will cost more than 1 trillion yen to train AI models and pose a threat to humanity,' predicts CEO of AI company Anthropic - GIGAZINE

In addition, attitudes toward AI safety vary widely from company to company. For example, it has become clear that OpenAI has disbanded its research team on AI safety.

OpenAI's 'Super Alignment' team, which was researching the control and safety of superintelligence, has been disbanded, with a former executive saying 'flashy products are being prioritized over safety' - GIGAZINE

Related Posts:

in Software, Posted by log1o_hf