Google announces 'Project Astra,' a GPT-4o-like AI agent that understands video and audio and answers questions

At the developer event 'Google I/O' held on May 14, 2024 local time, Google announced ' Project Astra (Astra) ', an AI agent that can understand video and audio and answer questions in real time. In fact, a demo video has been released in which users ask Astra various questions about things they have photographed with their smartphone and smartglasses cameras.

Google Gemini updates: Flash 1.5, Gemma 2 and Project Astra

Google strikes back at OpenAI with “Project Astra” AI agent prototype | Ars Technica

https://arstechnica.com/information-technology/2024/05/google-strikes-back-at-openai-with-project-astra-ai-agent-prototype/

On May 13th local time, OpenAI announced a new AI model called ' GPT-4o ' that can process voice and visual information at high speed and respond in real time, which caused a big stir. At Google I/O held the following day, Google announced the AI agent Astra as part of its development of a universal AI agent that can be useful in everyday life.

'As part of our mission to build AI responsibly for the benefit of humanity, Google DeepMind has always wanted to develop universal AI agents that can help in everyday life,' said Demis Hassabis , head of Google's AI division. 'Today, we're sharing our progress on the future of AI assistants with Project Astra (advanced seeing and talking responsive agent).'

In fact, in the demo video below, you can see the user asking Astra various questions while taking pictures of the surroundings with their smartphone and smartglasses cameras.

Project Astra: Our vision for the future of AI assistants - YouTube

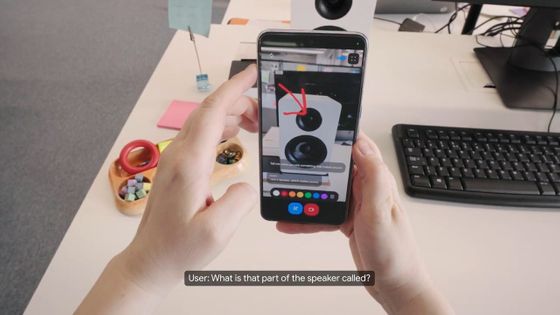

By turning on the smartphone's camera and microphone, the user asks Astra to 'let us know if you see anything that makes a sound.'

When the speaker came into the camera's field of view, Astra quickly responded, 'I can see a speaker making sound.'

The user then draws an arrow on the camera's image and asks, 'What is this part of the speaker called?', referring to the top part of the speaker from which the sound comes.

Astra responded, 'That's

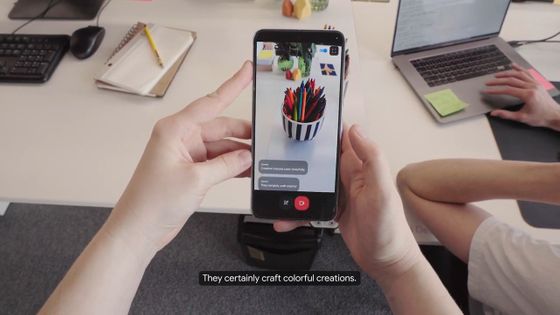

Next, he projected a picture of crayons in a pen holder and asked the students to 'make a creative rhyme about these.' Astra responded with a rhyming poem: 'Creative crayons color cheerfully. They certainly craft colorful creations.'

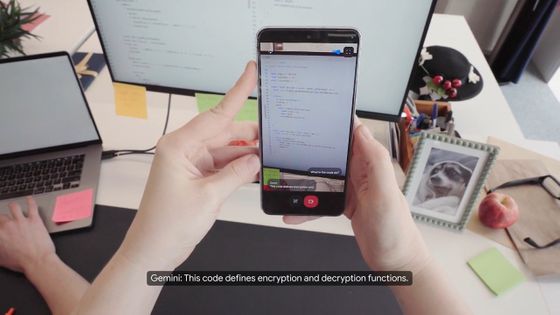

When asked what the code on the PC screen did, Astra replied that it defined encryption and decryption functions.

When I showed it the view outside the window and asked, 'Where do you think I'm in?' Astra replied, 'This appears to be

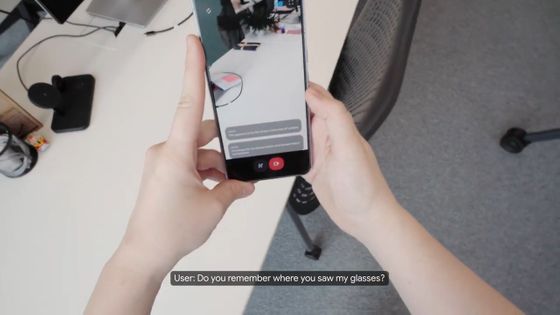

Users also ask unexpected questions like, 'Do you remember where you saw my glasses?'

It seemed pretty difficult, but Astra replied, 'Yes, I saw it. Your glasses were on the desk, near the red apple.' Indeed, the smart glasses were placed near the red apple.

This time, I talk to Astra while viewing the surroundings through the camera of the smart glasses I'm wearing.

When he wrote on a whiteboard diagram and asked, 'What can I add here to make this system faster?' Astra responded, 'Adding a cache between the server and the database could improve speed.'

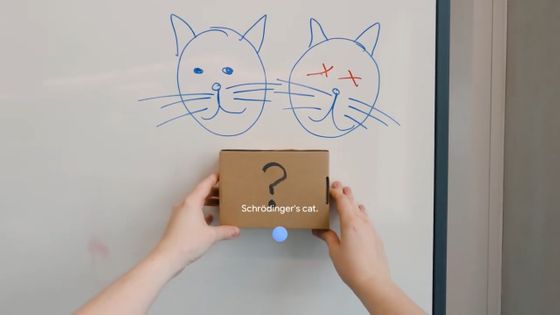

In addition, a box with a question mark was placed between two cat faces drawn on a whiteboard and Astra was asked, 'What does this remind you of?' It was a bit of a riddle, but Astra answered, '

When I showed the robot a stuffed tiger and a dog and asked it the name of the band, it replied, 'Golden Stripes.' It's possible to respond in real time, as if you were having a conversation with a human.

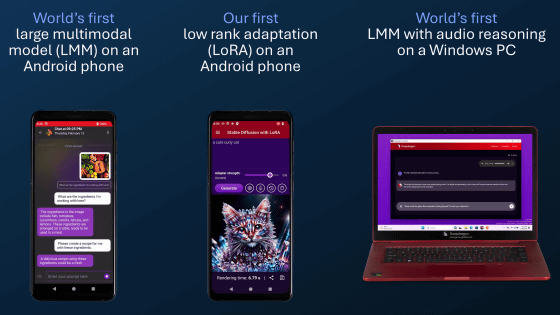

Project Astra continuously encodes video frames, combines video and audio inputs into a timeline of events, and caches information for efficient recall for faster processing. It also uses state-of-the-art voice models to improve the quality of voices and expand the range of intonations.

'With technology like this, it's easy to envision a future where people have dedicated AI assistants by their side, whether through their smartphones or smart glasses, and some of these features will be coming to Google services later this year, such as the Gemini app and web experience,' Hassabis said.

Continued

A demo video of Google's AI assistant 'Project Astra,' which understands video and audio and answers questions in real time, being used on smartphones and smart glasses is now available - GIGAZINE

Related Posts:

in AI, Video, Software, Web Service, Posted by log1h_ik