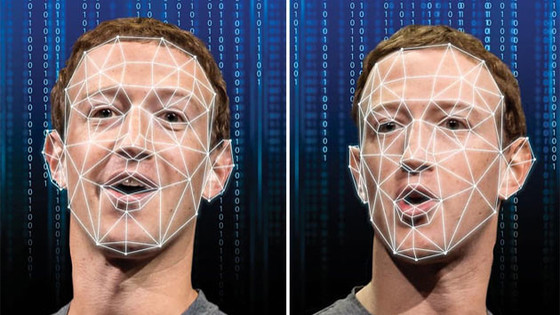

Meta oversight board to investigate AI deepfake porn scandal involving celebrities altered on Instagram and Facebook

by

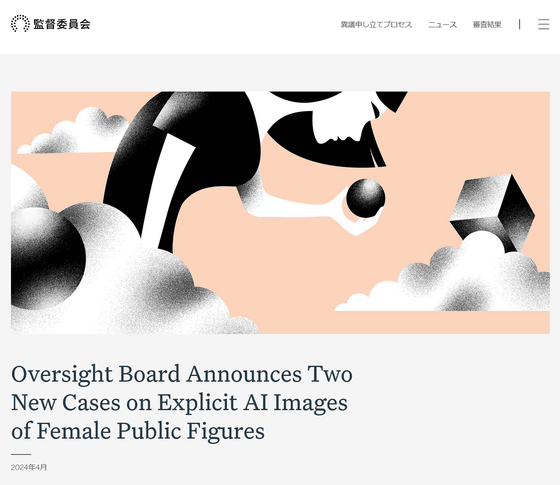

On April 16, 2024, Meta's Oversight Board , a third-party organization that monitors content removed from the platform, announced that it had launched an investigation into two cases of deepfake pornography on Facebook and Instagram, and that it would 'assess whether Meta's policies and enforcement practices are effective in addressing deepfake pornography.'

Oversight Board Announces Two New Cases on Explicit AI Images of Female Public Figures | Oversight Board

https://www.oversightboard.com/news/361856206851167-oversight-board-announces-two-new-cases-on-explicit-ai-images-of-female-public-figures/

Explicit AI Images Case Bundle | Transparency Center

https://transparency.fb.com/ja-jp/oversight/oversight-board-cases/explicit-ai-images/

Meta's oversight board to probe subjective policy on AI sex image removals | Ars Technica

https://arstechnica.com/tech-policy/2024/04/metas-oversight-board-to-probe-subjective-policy-on-ai-sex-image-removals/

The Oversight Board reports that it has investigated two cases involving deepfake images generated by AI.

In the first case, a nude image of an Indian female celebrity was generated by AI and posted to an Instagram account that only shares AI-generated images of Indian women. A user who witnessed the post reported it, but Meta did not review it within 48 hours, so the report was automatically closed.

The user who reported the post tried to appeal, but the report was automatically closed again, and Meta did not remove the image until the user appealed to the Oversight Committee. The committee acknowledged that 'Meta's judgment was incorrect' and removed the post for violating the community standards on bullying and harassment.

by

In the second case, an AI-generated nude image of an American female celebrity was posted to a Facebook group that posts AI work. The caption of the post included the name of the female celebrity. However, another user had already posted the same image, and Meta's safety team reviewed it and deleted it for violating the anti-bullying and harassment policy . The image posted at this time was also registered in 'an automated enforcement system that automatically detects and removes images identified by human reviewers as violating policies.'

A user who tried to share an AI-generated image in a Facebook group protested the automatic deletion of their post, but their report was automatically closed, so they appealed to the Oversight Board.

The Oversight Committee is seeking public comment until April 30, 2024 on: 'The nature and severity of the harm caused by deepfake pornography, particularly its impact on female public figures;' 'The extent to which the problem of deepfake pornography is becoming more severe in the United States and India;' 'The most effective policies and enforcement processes to address deepfake pornography;' 'The problems with relying on automated systems, as in the first case, resulting in reports being closed.'

by the forum

Following the Oversight Committee's announcement, Meta said it would 'agree with the Oversight Committee's decision.'

Related Posts:

in Web Service, Posted by log1i_yk