Google unveils technology that gives AI the ability to process infinite amounts of text

Researchers at Google have published a paper on ' infini-attention, ' a method that enables large-scale language models (LLMs) to process text of infinite length.

[2404.07143] Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attention

Google's new technique gives LLMs infinite context | VentureBeat

Google Demonstrates Method to Scale Language Model to Infinitely Long Inputs

https://analyticsindiamag.com/google-demonstrates-method-to-scale-language-model-to-infinitely-long-inputs/

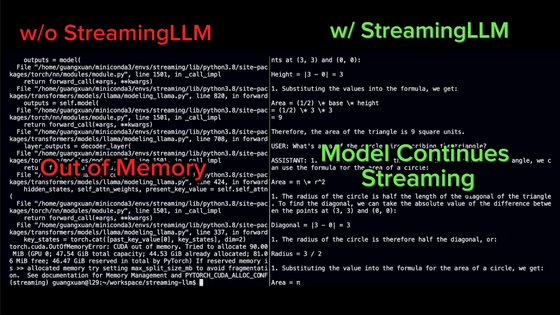

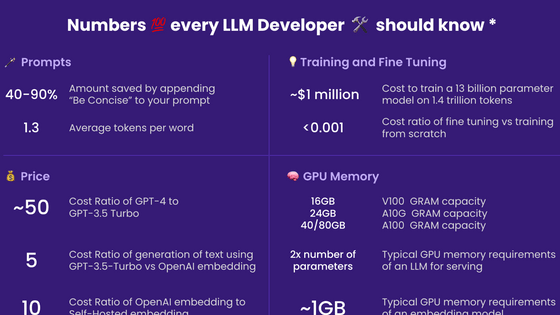

AI has a 'context window' that indicates the amount of tokens it can work with. For example, in the case of ChatGPT, if the amount of conversation with the AI exceeds the 'context window,' performance will decrease and tokens at the beginning of the conversation will be discarded.

For this reason, increasing the amount of tokens that can be processed is a major initiative to gain a competitive advantage and improve the model.

'Infini-attention,' developed by Google researchers, is an approach that incorporates compressed memory into the 'attention mechanism' of Transformer-based LLM, building a single Transformer block with local attention and long-term linear attention.

The key point of this initiative is that it is a method that can be used even with limited memory and computational resources. In an experiment, a long-text language modeling benchmark was conducted, and the model using infini-attention exceeded the baseline model, achieving a comprehension rate 114 times higher based on memory size.

The 1B model, fine-tuned for passkey instances of up to 5K sequence length, is capable of solving 1M-length problems, and could theoretically handle many more tokens in the same way while maintaining quality.

Related Posts:

in Software, Posted by logc_nt