Meta announces the second generation of its self-developed AI chip 'MTIA', achieving three times the performance of the previous generation and already deployed in data centers

On April 10, 2024, Meta announced the second generation of its self-developed AI chip , the Meta Training and Inference Accelerator (MTIA), designed for AI workloads. It has significantly improved performance compared to the first generation and has already been deployed in Meta's data centers.

Our next generation Meta Training and Inference Accelerator

https://ai.meta.com/blog/next-generation-meta-training-inference-accelerator-AI-MTIA/

Introducing Our Next Generation Infrastructure for AI | Meta

https://about.fb.com/news/2024/04/introducing-our-next-generation-infrastructure-for-ai/

Meta's new AI chips run faster than before - The Verge

https://www.theverge.com/2024/4/10/24125924/meta-mtia-ai-chips-algorithm-training

Meta unveils its newest custom AI chip as it races to catch up | TechCrunch

https://techcrunch.com/2024/04/10/meta-unveils-its-newest-custom-ai-chip/

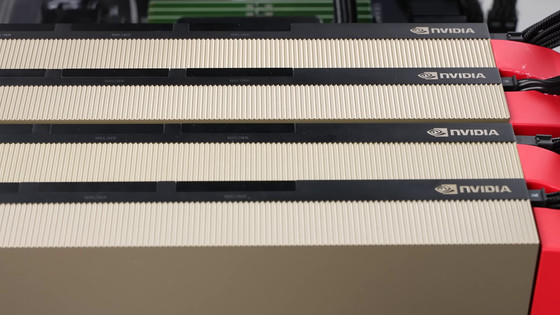

Meta is using AI in various of its apps and services, and CEO Mark Zuckerberg has declared that he aims to 'build general-purpose artificial intelligence and make it open source.' Meta is also promoting the construction of hardware specialized for AI development, as well as software, and aims to build a large-scale computing infrastructure including 350,000 H100s by the end of 2024. In addition, in 2023, Meta announced its own AI chip 'MTIA' .

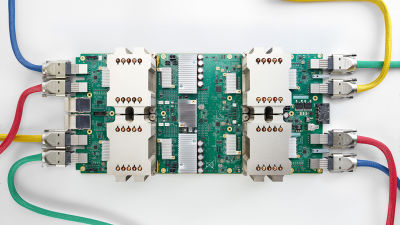

MTIA is designed to work optimally with ranking and recommendation ad models such as Facebook and Instagram, making training more efficient and facilitating inference tasks. Meta said, 'MTIA is a long-term bet to provide the most efficient architecture for Meta's unique workloads. As AI workloads become increasingly important to our products and services, this efficiency will be at the core of our ability to deliver the best experience to users around the world.'

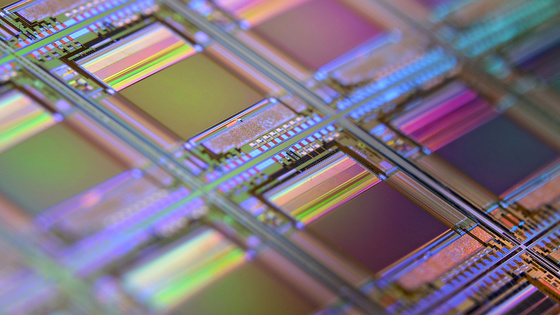

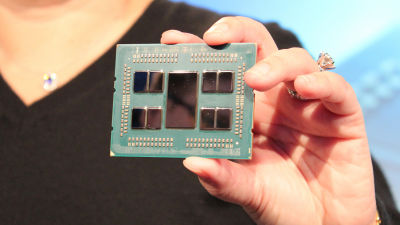

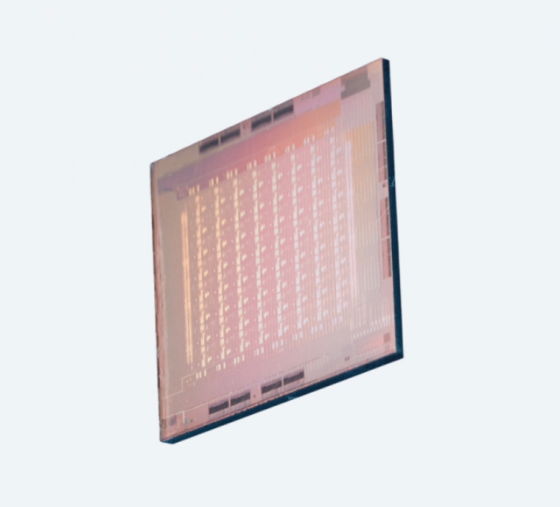

On April 10, 2024, Meta announced details about the second-generation MTIA. The second-generation MTIA is an AI chip codenamed 'Artemis,' and focuses on achieving the right balance between computing, memory bandwidth, and memory capacity.

Meta to deploy proprietary AI processor 'Artemis' in its data centers in the second half of 2024 - GIGAZINE

The first generation MTIA is manufactured using a 7nm process, while the second generation MTIA is manufactured using a 5nm process. The operating frequency is 800MHz for the first generation and 1.35GHz for the second generation, the on-chip memory is 128MB for the first generation and 256MB for the second generation, and the thermal design power is 25W for the first generation and 90W for the second generation.

Meta's initial test results show that the second-generation MTIA delivers three times better performance than the previous generation across four models, and Meta says that the second-generation MTIA is already in production and being deployed in Meta's data centers.

Meta said, 'MTIA has been deployed in our data centers and is now in service in a production model. This program has enabled us to allocate and invest in more compute power for more intensive AI workloads, and we are already seeing positive results. MTIA has proven to be very complementary to commercial GPUs in providing the optimal combination of performance and efficiency for Meta's specific workloads.'

Related Posts:

in Hardware, Posted by log1h_ik