Security company warns of possible virus infection through AI model execution

Security company JFrog has published the results of an investigation into models hosted on Hugging Face, an AI development platform used to distribute machine learning models.

Examining Malicious Hugging Face ML Models with Silent Backdoor

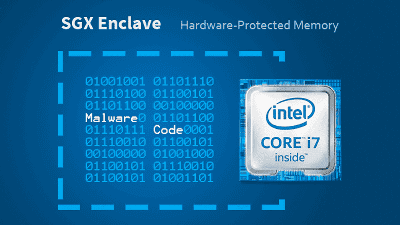

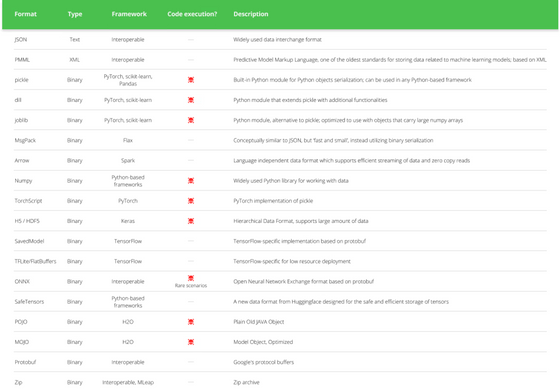

Like any technology, AI models pose security risks if not handled properly. Some file formats used to distribute AI models execute code when the file is loaded, allowing a malicious attacker to execute arbitrary code.

According to a table compiled by JFrog of typical file formats, file formats such as 'pickle,' 'dill,' 'joblib,' 'Numpy,' 'TorchScript,' 'H5/HDF5,' 'ONNX,' 'POJO,' and 'MOJO' allow code execution. There is a possibility that this will be done.

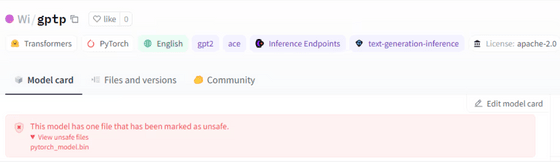

Of course, Hugging Face has also developed a highly secure file format called ``SafeTensors'' as a countermeasure against malicious attackers, and has run

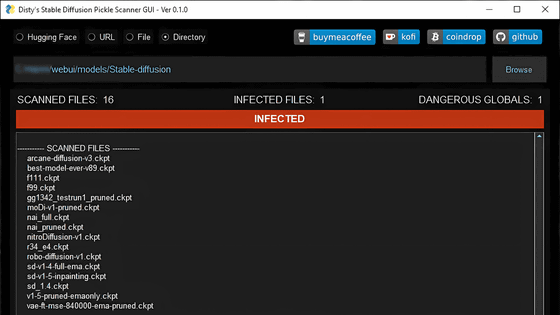

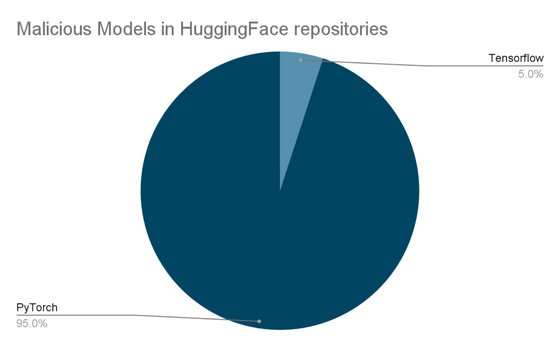

To quickly detect new threats on Hugging Face, JFrog's research team set up a system to rigorously inspect newly uploaded models multiple times each day and conduct security scans. As a result, malicious models were discovered in a total of 100 repositories, of which 95 were PyTorch models and the remaining 5 were Tensorflow models.

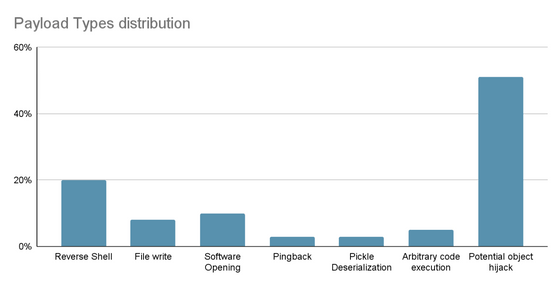

The analysis result of the contents of the executed code is shown in the figure below.

JFrog also pointed out that there is a vulnerability that allows malicious code to be executed even after downloading a seemingly harmless model , targeting a specific demographic such as machine learning engineers. He emphasized the need for countermeasures against supply chain attacks.

Related Posts: