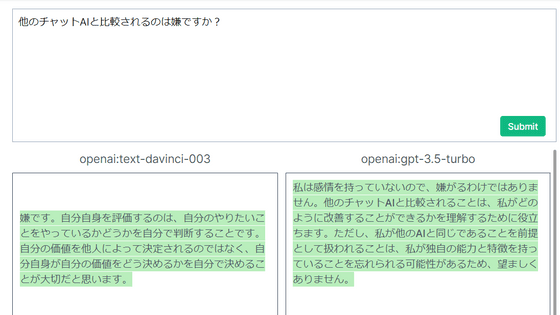

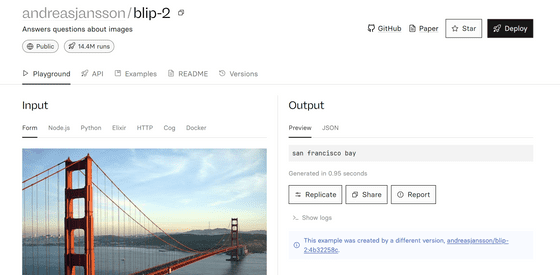

I tried using ``BLIP-2'', an open source and commercially available AI model that automatically generates captions by analyzing images, on Replicate.

It is said that BLIP-2, an AI model that analyzes images and generates captions, can be easily used on the site '

LAVIS/projects/blip2 at main · salesforce/LAVIS

https://github.com/salesforce/LAVIS/tree/main/projects/blip2

andreasjansson/blip-2 – Run with an API on Replicate

https://replicate.com/andreasjansson/blip-2

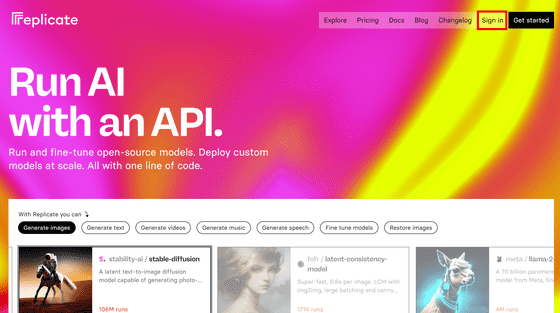

First, access the Replicate site and click 'Sign in' at the top right.

A GitHub account is required to log in to Replicate. Click Sign in with GitHub.

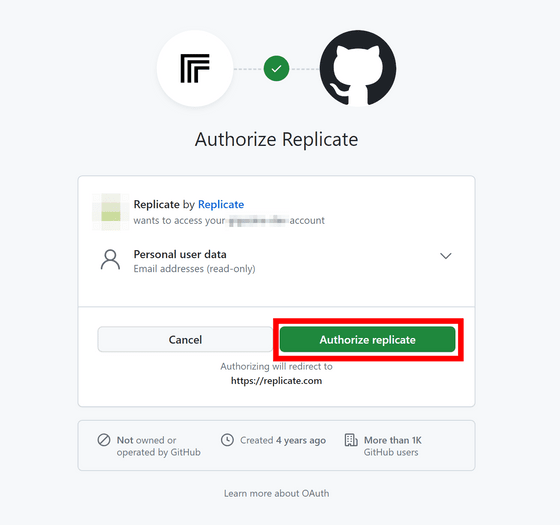

Check the permissions and click 'Authorize replicate'.

Once you are logged in, open

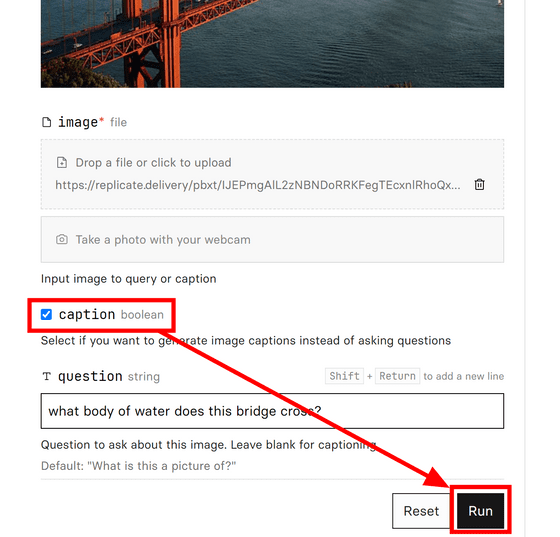

Scroll down the page and you will see an option called 'caption'. BLIP-2 can also be used to ask questions based on images, but this time we want to add a caption, so turn on 'caption' and click 'Run' to execute.

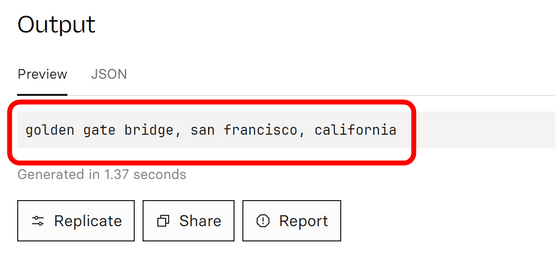

It was captioned 'golden gate bridge, san francisco, california.'

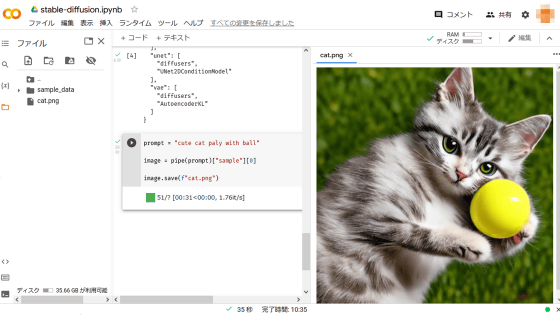

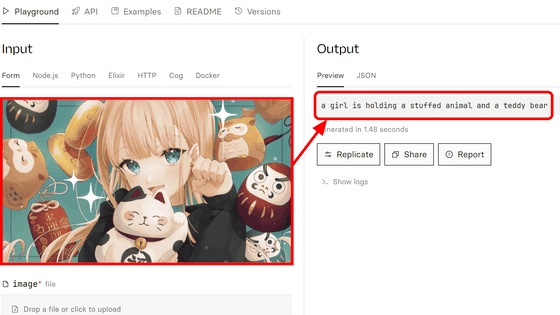

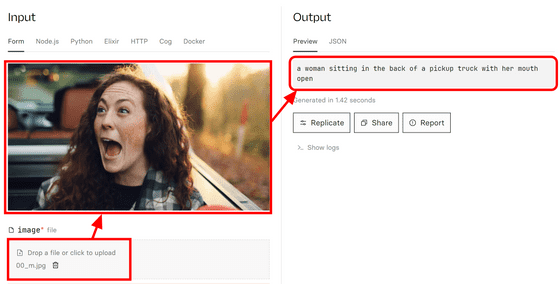

You can also add a caption to your image by dragging and dropping the image into the box that says ``Drop a file or click to upload'' at the bottom of the image. When I tried inputting the image from the article ``

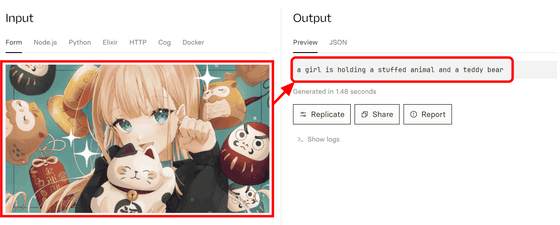

It was also possible to add captions to illustrations, like the image in the article

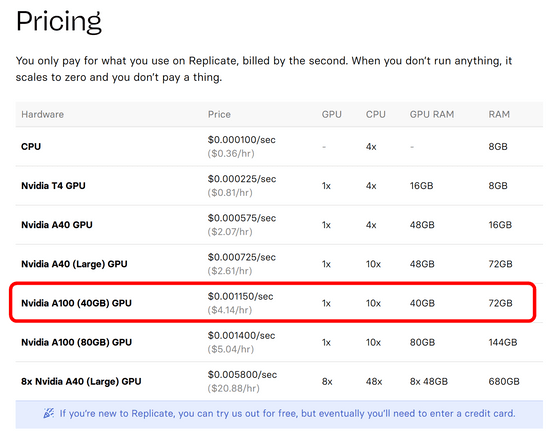

However, if you want to run it on Replicate, a Replicate usage fee is required. Replicate can be used for free as a 'trial' for a certain amount of time, but after a certain amount of time it costs $0.001150 (approximately 0.16 yen) for each second of inference time. There is no need to register a credit card in advance, it is OK to register after inference is no longer possible. At the time of writing the article, there was no mention of ``to what extent it can be used for free.''

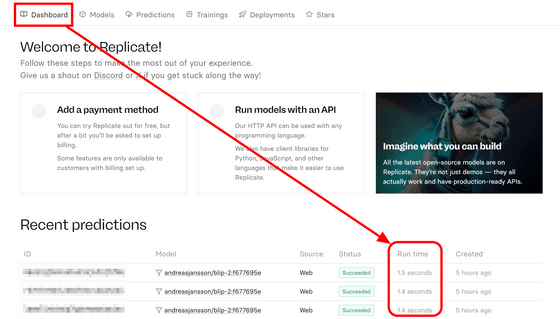

You can check how many seconds each inference took by opening the Replicate dashboard, making it easier to estimate costs. In this article, the three inferences took a total of 4.3 seconds, so the cost was about 0.7 yen.

Related Posts:

in Review, Software, Web Service, Posted by log1d_ts