Apple aims to run large language models locally on the iPhone

Researchers at Apple have published a paper titled ' LLM in a flash: Efficient Large Language Model Inference with Limited Memory ' on the preprint server arXiv. The paper presents a 'solution that paves the way for efficient inference of large language models (LLMs) on devices with limited memory,' i.e., technology for running LLMs on devices such as the iPhone. It is believed that Apple aims to run LLMs on iPhones in the future.

[2312.11514] LLM in a flash: Efficient Large Language Model Inference with Limited Memory

Paper page - LLM in a flash: Efficient Large Language Model Inference with Limited Memory

https://huggingface.co/papers/2312.11514

Apple Develops Breakthrough Method for Running LLMs on iPhones - MacRumors

https://www.macrumors.com/2023/12/21/apple-ai-researchers-run-llms-iphones/

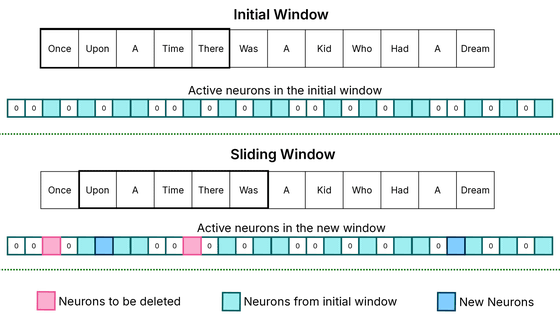

In their paper, the research team notes that mobile devices such as smartphones have more abundant flash memory storage than the RAM traditionally used for LLM execution, and aims to maximize the throughput of flash memory using two techniques: 'windowing' and 'row-column bundling.'

'Windowing' means that an AI model reuses some of the data it has already processed, rather than loading new data each time. This reduces the need for constant memory fetches, making the process faster and smoother. 'Matrix bundling' is a technique that increases the size of data chunks to accommodate sequential data access in flash memory.

According to Apple's research team, the combination of windowing and matrix bundling allows AI models to run using up to twice the available DRAM, achieving inference speeds four to five times faster than CPUs and 20 to 25 times faster than GPUs on standard processors.

Google has already begun commercializing LLM, which typically runs in data centers, on mobile devices such as smartphones. The company has announced that it will be deploying Gemini Nano, the smallest version of its multimodal AI, on the Pixel 8 Pro, running it as a local AI on the device rather than in the cloud.

Gemini Nano, a local-first LLM that runs on smartphones instead of on the cloud, is now compatible with the Pixel 8 Pro, and will be used to enhance Gboard's Smart Reply and recorder auto-summarization - GIGAZINE

Meanwhile, Apple has been including its virtual assistant, Siri, in its devices, including the iPhone, since 2011. However, Siri is not a chatbot that generates human-like conversational sentences like ChatGPT, Bing Chat, or Gemini, but is merely an assistant tool that enables operation via voice input.

While it has been said that Apple is lagging behind Google and Microsoft in AI technology, it has been reported that Apple has already built its own LLM called 'Ajax' and is developing its own chatbot AI, internally known as 'Apple GPT.' According to MacRumors, an Apple-related news site, this Ajax is designed to rival OpenAI's GPT-3 or GPT-4 and operates with 200 billion parameters.

Is Apple developing its own large-scale language model and chatbot AI 'Apple GPT'?

In November 2023, CEO Tim Cook acknowledged that Apple was working on generative AI, saying, 'We're researching generative AI, and there will come a time when we'll unveil products that are centered around it,' without revealing any details.

Apple CEO Tim Cook reiterates that 'we are working responsibly on developing generative AI' - GIGAZINE

Apple's generative AI efforts may ultimately be incorporated into Siri. It's reported that the software engineering group will incorporate AI features into iOS 18 in October 2023, with LLM-based sentence generation being applied to Siri and messaging apps. The company is also considering integrating generative AI into development tools like Xcode, and is also planning to introduce coding support AI that autocompletes code, similar to Microsoft's GitHub Copilot.

Additionally, Jeff Poo, an analyst at Haitong International Securities, predicts that Apple will include generative AI features for iPhones and iPads in iOS 18, which will likely be released in late 2024. According to Poo, Apple will already have several hundred AI servers ready by October 2023, with plans to further expand the number in 2024.

The paper is intended to demonstrate how LLMs can be run on iPhones. The research team commented, 'This breakthrough is particularly important for deploying advanced LLMs in resource-limited environments, expanding their applicability and accessibility. The integration of sparsity awareness, context-adaptive loading, and hardware-oriented design paves the way for effective inference of LLMs on memory-limited devices.'

Related Posts:

in AI, Software, Smartphone, Posted by log1i_yk