``BrainGPT'' that reads the mind and converts it to text has appeared, and the state of the experiment can be confirmed in the movie

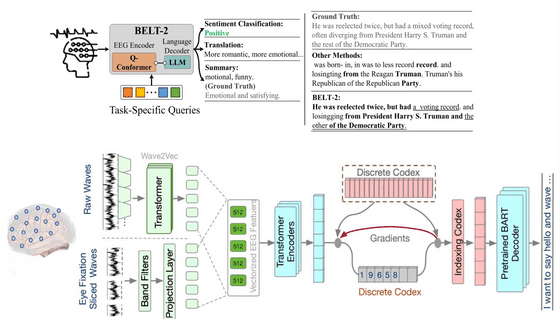

At the machine learning and computational neuroscience conference event 'NeurIPS' held from December 10th to 16th, 2023, a research team from the University of Technology Sydney conducted a large-scale project to 'translate raw brain waves directly into language.' A paper on the language model 'BrainGPT' was selected as a featured paper.

Portable, non-invasive, mind-reading AI turns thoughts into text | University of Technology Sydney

New Mind-Reading 'BrainGPT' Turns Thoughts Into Text On Screen | IFLScience

https://www.iflscience.com/new-mind-reading-brainingpt-turns-thoughts-into-text-on-screen-72054

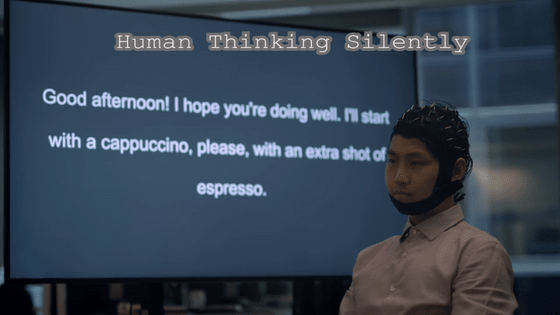

The state of the experiment to actually read human thoughts is published on YouTube.

UTS HAI Research - BrainGPT - YouTube

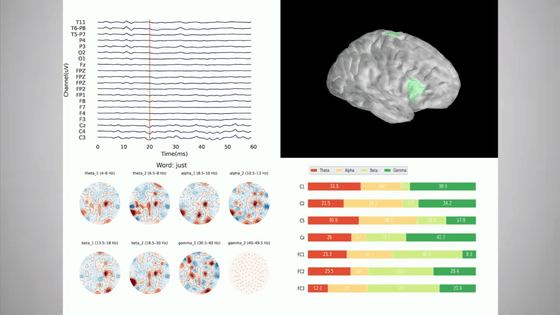

BrainGPT is a system that generates text through a large-scale language model based only on brain waves read from a hat, without the need for an MRI machine or implant. 'For the first time, we have incorporated discrete encoding techniques into the brain-to-text translation process,' the research team said.

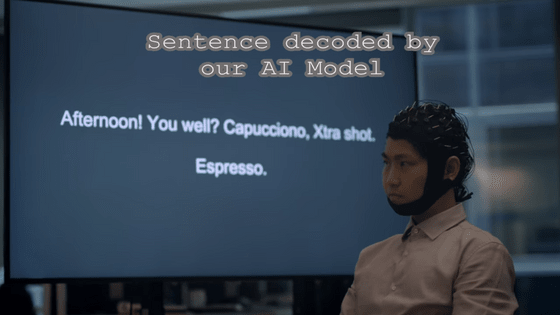

In the experiment, subjects were asked to think about text displayed on a screen. The first example sentence is 'Good afternoon! I hope you're doing well. I'll start with a cappuccino, please, with an extra shot of espresso.'

The result read by BrainGPT is 'Afternoon! You well? Capuccino, Xtra shot. Espresso.' Although a lot of information is missing, each part can be read properly.

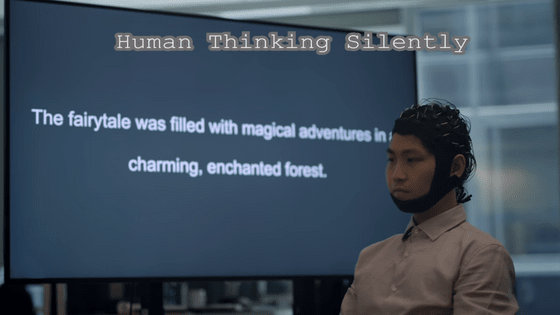

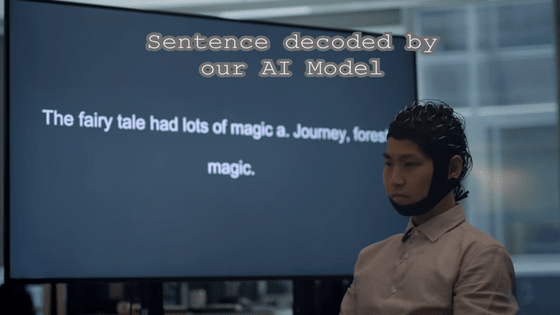

The next example sentence is 'The fairytale was filled with magical adventures in a charming, enchanted forest.'

The output result is 'The fairy tale had lots of magic a. Journey, forest magic.' Perhaps because the sentences are shorter than before, sentences with more similar overall meanings are generated.

Yikun Duan, lead author of this paper, said about BrainGPT, ``Synonyms are often used for nouns, such as ``author'' being output as ``human,'' so it is better at matching verbs than nouns. '' and speculate that the reason for this is that ``the brain may generate similar brain wave patterns that are semantically similar when processing nouns.''

Accuracy measurements using the BLEU algorithm, which evaluates the closeness of the output from the original text and the EEG, yielded a result of approximately 0.4. The research team believes that it is possible to raise this number to 0.9, which is equivalent to conventional language translation programs.

The paper has not yet been peer-reviewed, but a preprint has been published on arXiv , so if you are interested, please check it out.

Related Posts: