``Human-SGD'' will be announced that can generate high-resolution 3DCG models using AI from just one photo

To create a 3DCG model from a photo, it is necessary to take many photos of the subject from various directions. Researchers from Kuwait University, Meta, and the University of Maryland have announced `` Human-SGD, '' which generates high-resolution CG models from just one photo.

[2311.09221] Single-Image 3D Human Digitization with Shape-Guided Diffusion

Human-SGD

https://human-sgd.github.io/

Jia-Bin Huang, one of the research team, has released a movie on YouTube that explains what kind of model Human-SGD is.

3D Human Digitization from a Single Image! - YouTube

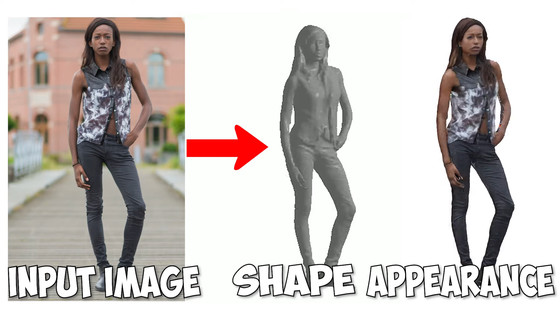

One photo of a woman wearing a rider jacket.

The 3DCG model generated from this photo is below. The photo is only taken from the front, but it is a high-precision 3DCG model that will not break when viewed from any 360 degree angle.

Photo of a man walking in a polo shirt and shorts

Not only the texture, but also the detailed shapes such as the hem of shorts and the hem of a polo shirt coming out of the pants are reproduced.

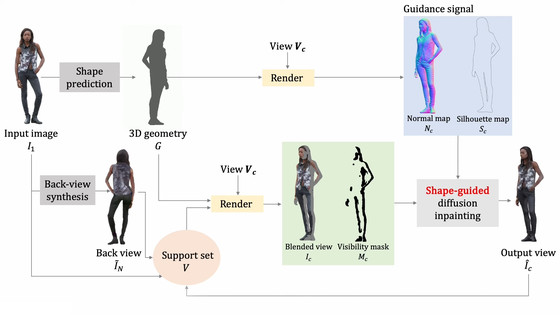

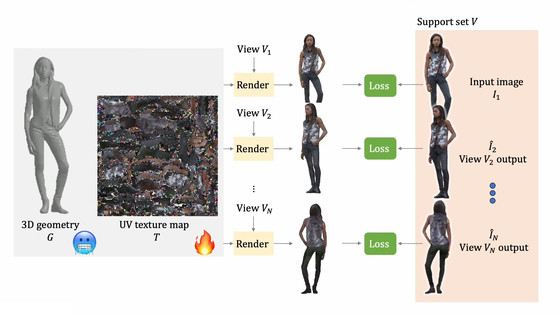

Human-SGD generates and combines shapes and textures from the input photos.

The process to generate it is as follows. Human-SGD renders a shape from the silhouette of a photo, and then automatically generates a back photo from the front photo and combines and renders.

Textures are generated using a diffusion model, and

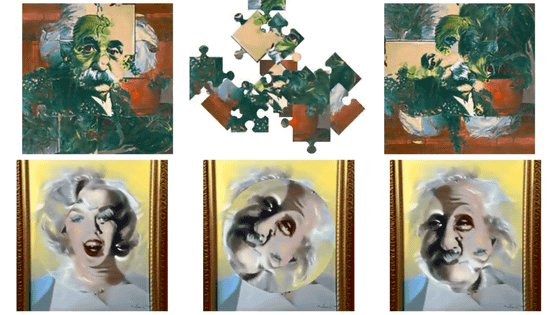

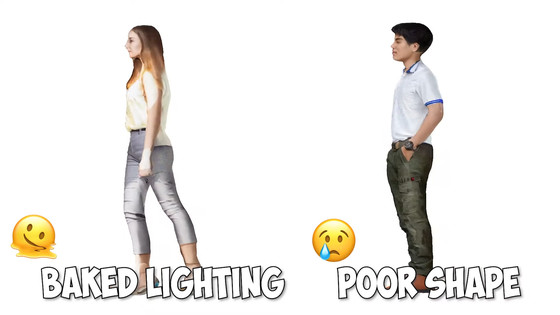

The following three photos were presented for comparison.

The CG model generated by

The model generated with HUMAN-SGD has a relatively natural shape, and textures with low noise are pasted easily.

The back side, which is not shown in the photo, also looks natural and gives the impression that there are few defects.

However, according to the research team, photos with strong shadows interfere with texture generation. In addition, it is difficult to generate more precise shapes with existing methods, so further research is required.

Related Posts: