'A method to generate a 3D image that has depth and can move the viewpoint back and forth and left and right' is developed from only one image

In recent years, the technology to generate three-dimensional and deep '3D images' has made great progress, and new researchers

Worldsheet: Wrapping the World in a 3D Sheet for View Synthesis from a Single Image

https://www.researchgate.net/publication/347442173_Worldsheet_Wrapping_the_World_in_a_3D_Sheet_for_View_Synthesis_from_a_Single_Image

Worldsheet: Wrapping the World in a 3D Sheet for View Synthesis from a Single Image

https://worldsheet.github.io/

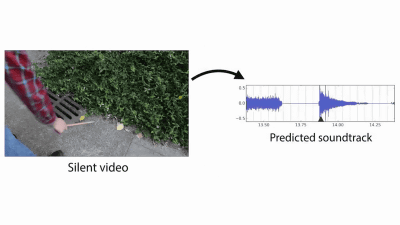

Several technologies have been developed to generate images that give a sense of depth, and in September 2019, a technology to generate 'images in which the viewpoint moves as if the camera is moving' was announced, and in April 2020. In the month, a method to generate '3D image that produces depth including multiple layers from one image' was announced.

A paper that allows you to easily create elaborate 3D photographs from images will be published, and it is also possible to actually generate 3D photographs --GIGAZINE

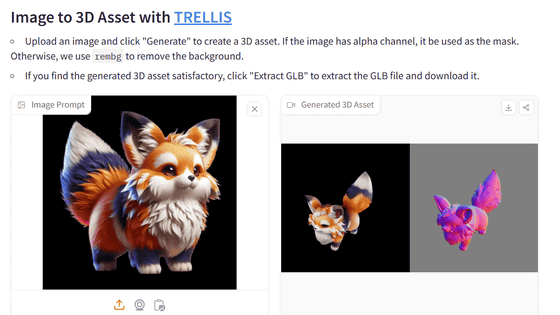

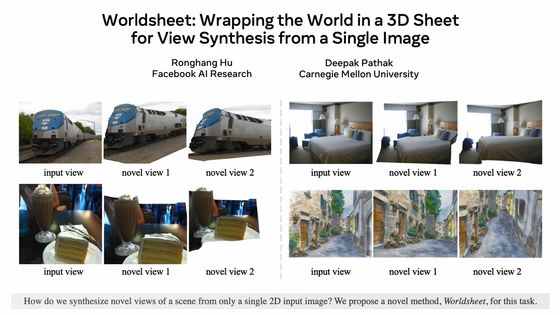

And a new researcher at Facebook AI Research and Carnegie Mellon University has announced a method called ' Worldsheet .' With this method, not only can the viewpoint be moved in a wider range than before, but it is also possible to generate a 3D image with less image distortion due to 3D conversion. The 3D images actually generated by the new method and their mechanism are introduced in the following movie.

Worldsheet: View Synthesis from a Single Image --YouTube

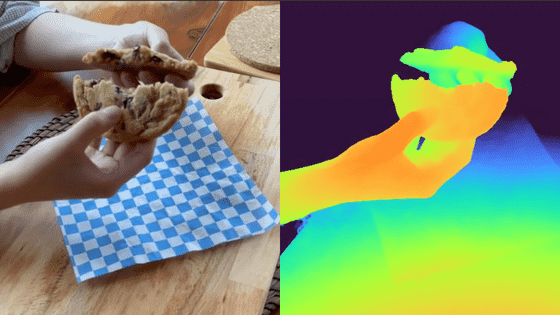

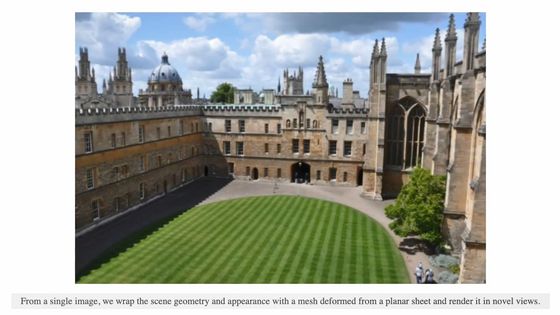

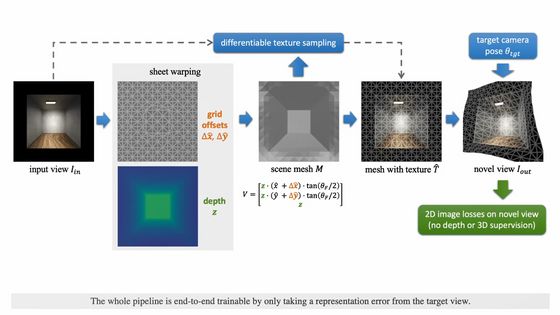

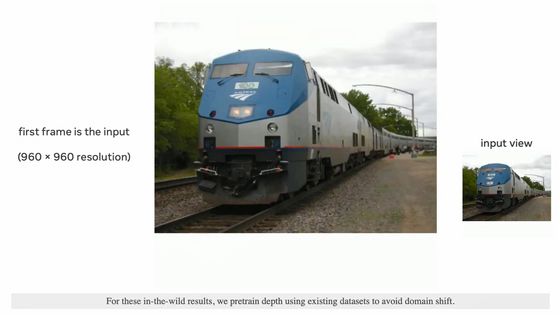

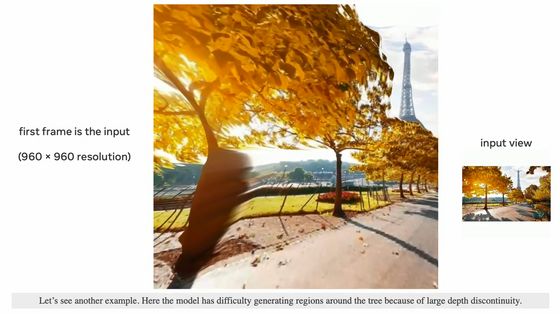

The Worldsheet was announced by Ronghang Hu of Facebook AI Research and Deepak Pathak of Carnegie Mellon University. The challenges in generating a 3D image from a single image with a limited amount of information are understanding the 3D shape of the landscape and texture mapping from different perspectives. In order to solve this problem, the two people devised a mechanism to 'grasp the 3D shape by pasting the flat mesh sheet so that it matches the learned depth.' For example, when one image like the one below is input ...

Worldsheet learns the depth in the image, pastes a flat mesh sheet, grasps the 3D shape, and performs texture mapping.

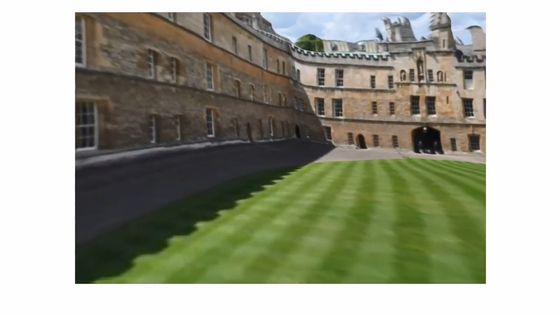

In the 3D image generated in this way ...

It is possible to move the viewpoint quickly.

As you approach the walls of the building, you can actually feel that the wall on your left has a height.

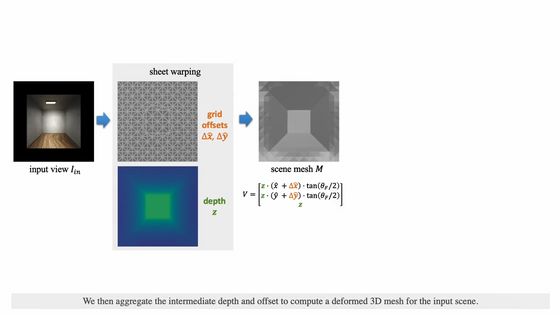

The mechanism is illustrated below. First, depth information is inferred from a single image, and a grid-like mesh sheet is pasted based on this information. It is said that these operations are performed by a

By mapping the texture corresponding to the shape of the mesh sheet, the texture is naturally placed even when the position of the viewpoint changes.

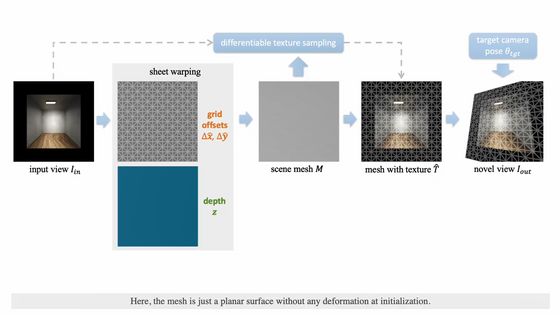

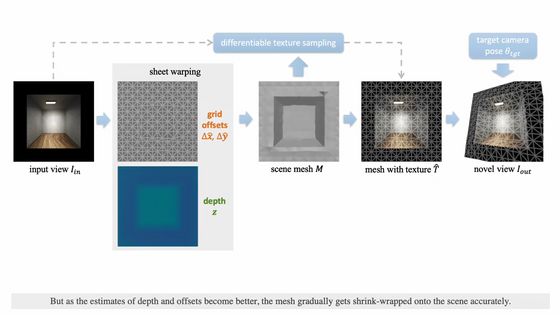

This method does not require a 3D image when training a neural network, and trains using only a 2D image. The mesh sheet does not form depth in the early stages of training, but ...

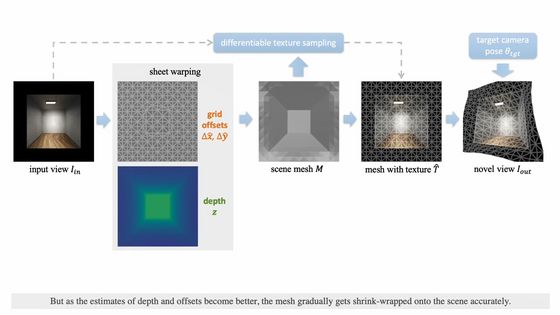

Gradually start to grasp the 3D shape.

And finally, the research team says it will be able to generate 3D images that accurately reproduce the space.

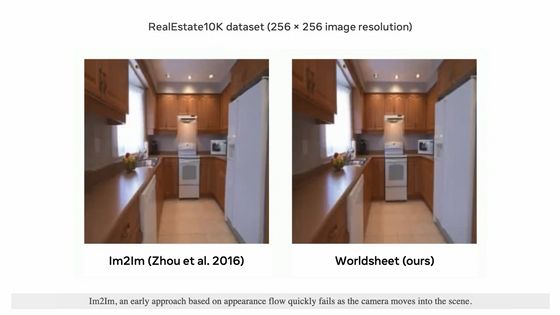

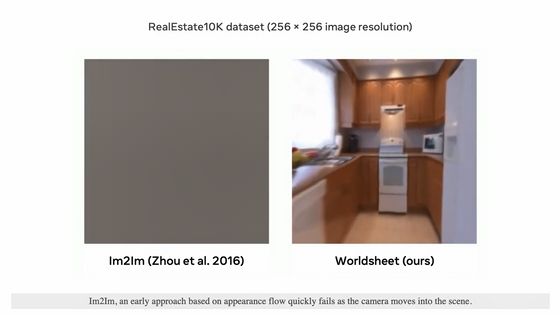

The movie also compares traditional techniques with 3D images generated by Worldsheet. Comparing the 3D image generated by the method 'Im2Im' developed in 2016 with the 3D image generated by Worldsheet (right) ...

The 3D image generated by Im2Im may not be displayed when the viewpoint is moved, probably because the mapping is insufficient. On the other hand, the 3D image of Worldsheet is displayed unchanged even if the viewpoint moves.

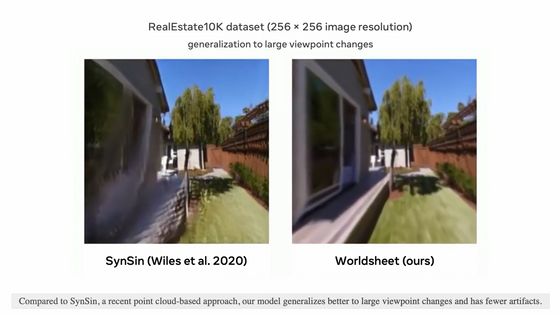

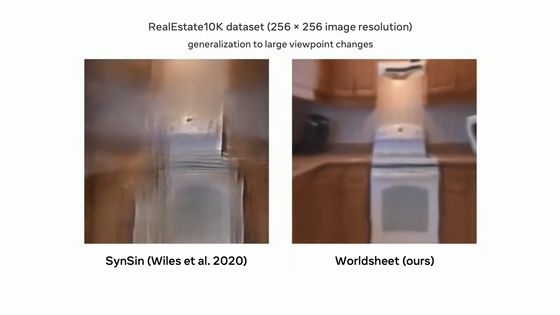

Compared to the 3D image generated by 'SynSin' (left) developed in 2020, you can't tell the difference at first glance ...

When I moved the viewpoint to the back, the 3D image of SynSin was greatly disturbed. However, the 3D image of Worldsheet remains almost undisturbed.

If you look at the side of the house with your point of view to the left, you can clearly see that the 3D image generated by Worldsheet is better at mapping textures.

When comparing with SynSin with the image used for comparison with Im2Im ...

After all, it was confirmed that the disturbance when moving the viewpoint to the back was greatly reduced in Worldsheet.

In the photo taken from the sky at the boundary between the waterside and the forest ...

You can see a big difference in the turbulence when zooming and rotating the camera.

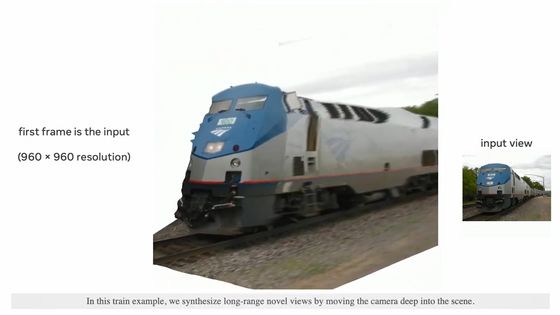

This is a 3D image generated by inputting a picture of a train into a Worldsheet.

In addition to being able to line up next to the train as if walking in the image ...

It is also possible to look at the train from a different angle than the first.

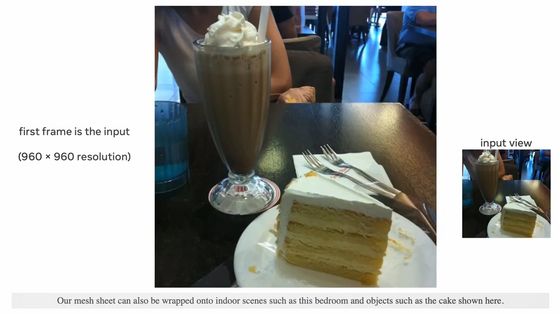

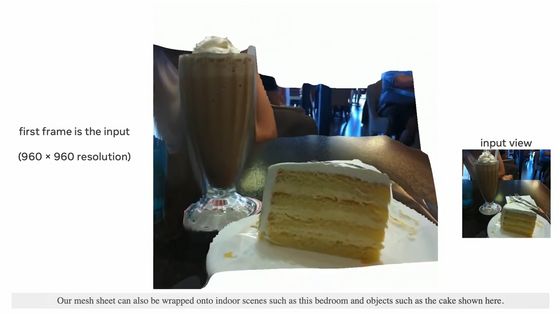

You can also look at the cake taken from diagonally above from the side.

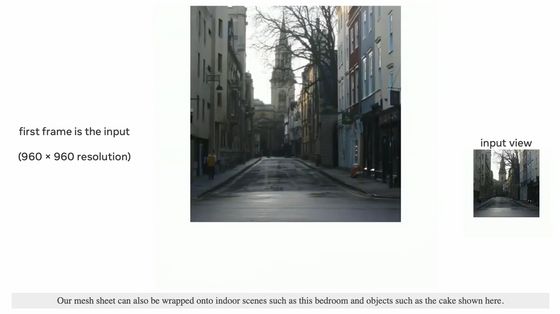

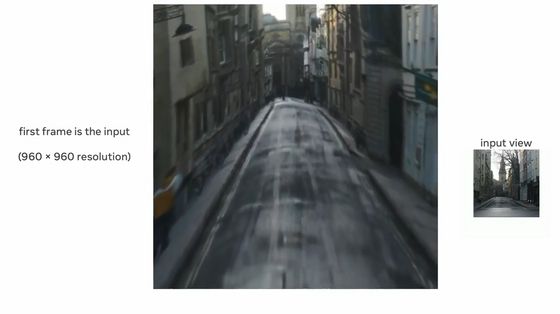

In the photo of the road in the town ...

It is possible to go deeper on the road like Street View.

The image may be distorted to some extent, but you can also turn to the side ...

I was also able to 'turn around' where the camera should be.

It is also possible to raise the viewpoint and make an angle that looks down on the road.

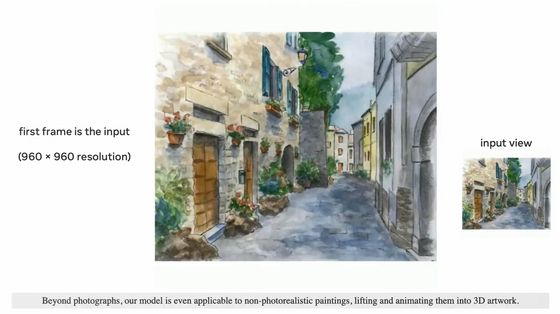

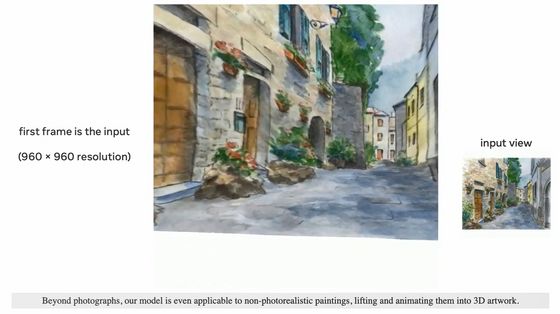

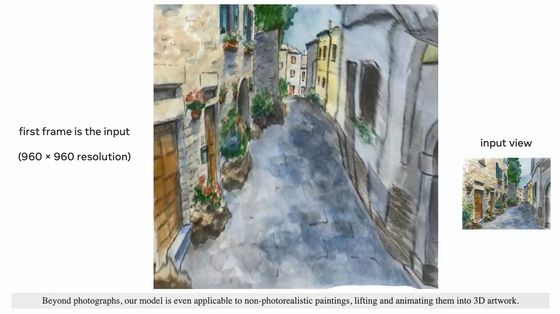

In addition, it is possible to generate 3D images by applying Worldsheet to illustrations as well as photographs.

Bring your point of view closer to the ground ...

You can look down on the alley from above.

In addition, it is said that there are cases where 3D images cannot be created well for objects with discontinuous depth boundaries such as flowers and trees.

Related Posts: