Introducing a smart speaker that can automatically deploy like a Roomba and pick up only the voice of a specific person

It is extremely difficult to distinguish who is saying what in a space where many different people are speaking. This is true not only for humans but also for microphones, and it is extremely difficult to distinguish voices emitted from various areas with microphones installed in one place. The results of a study were reported that solved this problem with a small robot microphone that automatically deploys.

Creating speech zones with self-distributing acoustic swarms | Nature Communications

UW team's shape-changing smart speaker lets u | EurekAlert!

https://www.eurekalert.org/news-releases/1001914

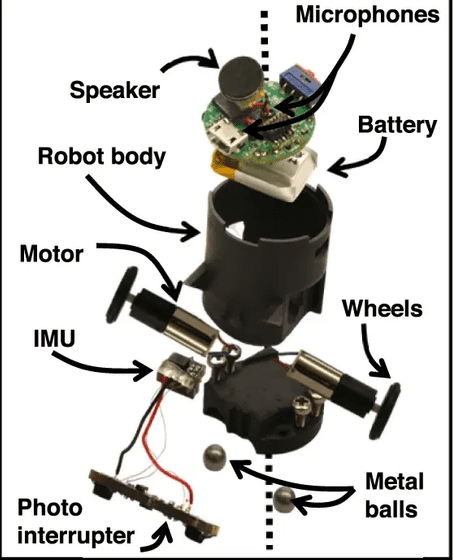

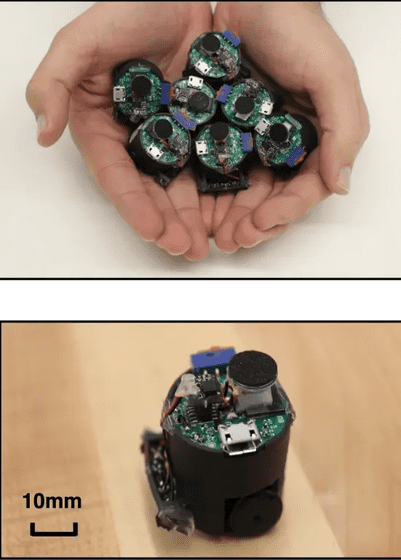

The robot prototype developed by Tuchao Chen and others at the University of Washington is as follows. It's built with speakers, microphones, batteries, wheels, motors, and more.

Dimensions are 3.0cm x 2.6cm x 3.0cm. It's small enough to fit multiple in the palm of your hand.

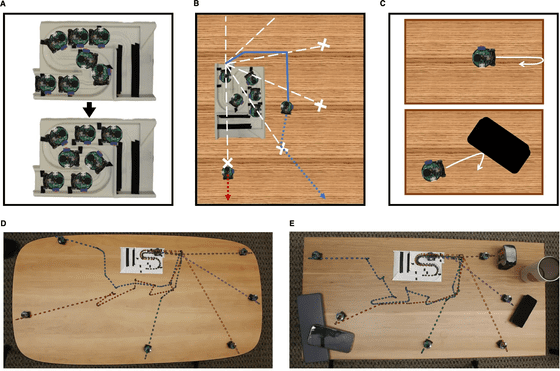

The robots are gathered at a base station, and from there they are deployed one by one like a Roomba.

You can see how the robot actually unfolds in the video below.

The robot emits a high-frequency sound similar to that produced by bats, and uses this frequency and sensors to avoid obstacles and disperse itself without falling off the table.

The robots placed in this way record audio from each area. If microphone A is located 1 meter away from you and microphone B is 2 meters away, your voice will first reach microphone A, and then reach microphone B a little later. On the other hand, if a person is near microphone B, their voice will be heard first. In this way, by investigating ``which microphone received the voice and at what timing,'' it seems possible to distinguish between the voices of people in a specific location or mute a specific area.

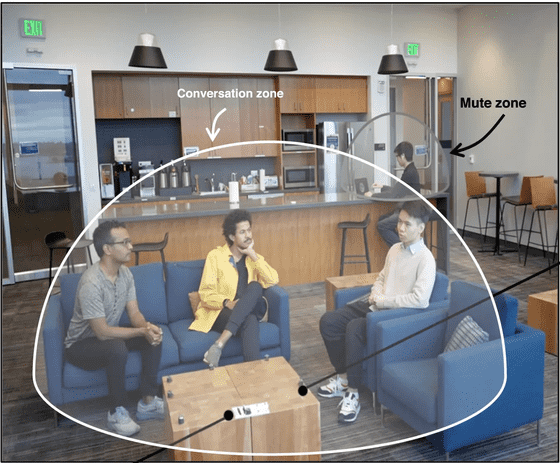

The research team recorded conversations in groups of three to five people in three spaces: an office, a living room, and a kitchen. In all of these environments, people reported being able to distinguish between people speaking next to each other. Also, thanks to deep learning algorithms, it is possible to distinguish between two neighboring voices even if they are similar.

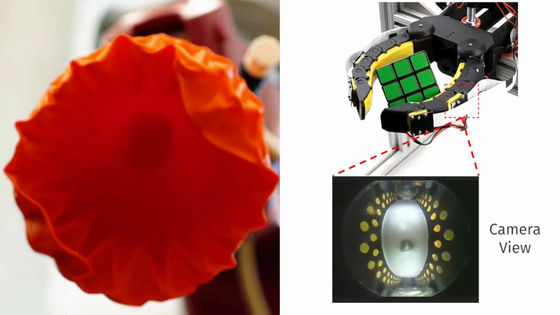

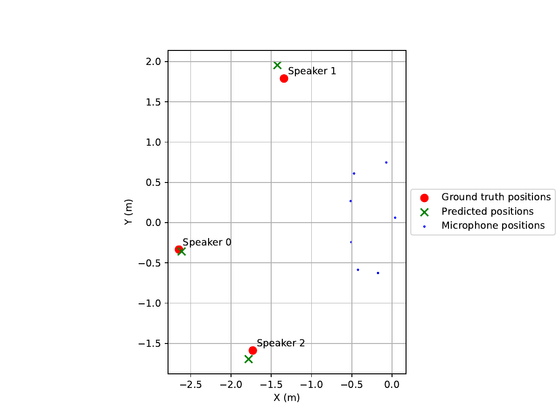

One scenario the researchers conducted was to have three speakers in separate locations speak at the same time. In this scenario, the speaker (red circle) remained at the position shown in the image below, and the robot dispersed to the dot positions. The cross mark is the speaker's position predicted by the robot that heard the sound.

The audio recorded in this state is below. After hearing this, I have no idea who is saying what.

Below are three excerpts from the above recording for each speaker.

speaker 1

speaker 2

speaker 3

You can also see how to cut out only the audio of a specific area from a conversation in the video below.

The average time to process audio from multiple microphones was 1.82 seconds for 3 seconds of audio. This is fast enough for one-way communications such as live streaming, but it feels a little long for real-time communications such as video calls.

Researchers say that as the technology advances, a system of multiple microphones could be introduced to distinguish between people talking on smart speakers. If this happens, for example, only the person sitting on the sofa in the 'active zone' will be able to control the TV by voice.

This time, the robots were dispersed on a single table, but the researchers said, ``Eventually, we plan to create microbots that can move around the room, not just on the table.'' , discovering the possibility of distinguishing between sounds in a room in the future.

Related Posts:

in Hardware, Posted by log1p_kr