``Self-improvement AI'' has appeared from Google DeepMind, and it is possible to learn how to use the robot arm in every situation

Google's AI development team, Google DeepMind, is a self-improving type that can learn to operate various robot arms with just 100 demonstrations and further refine their abilities using self-generated data. AI agent 'RoboCat' was announced.

RoboCat: A self-improving robotic agent

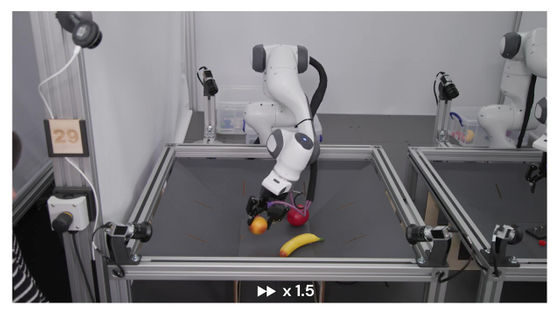

You can see how RoboCat moves the robot arm by watching the following movie.

RoboCat: A self-improving robotic agent-YouTube

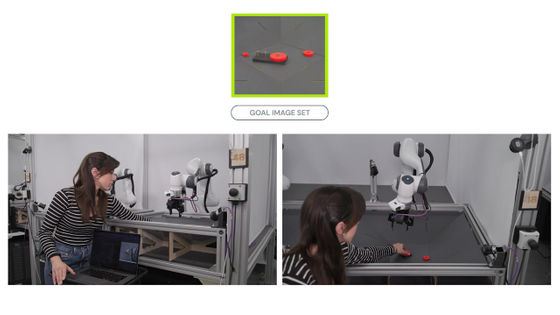

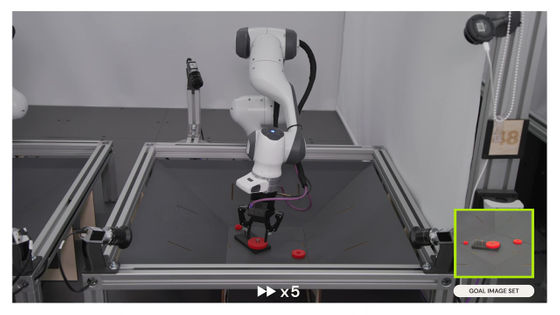

First, a human presents an image of the target completion drawing and instructs RoboCat to reproduce it. Here, a model was shown in which a gear was fitted to a plate with three pegs stuck in it.

Remove the gears and take the model apart before starting the task.

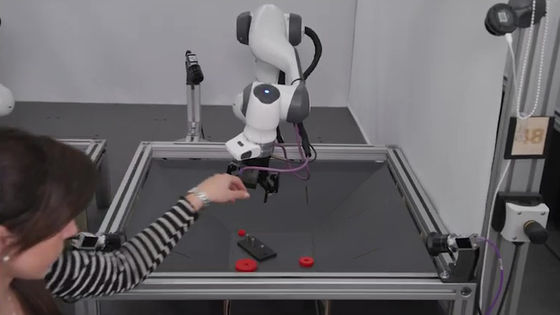

According to the first model, AI moved the robot arm and set the gear.

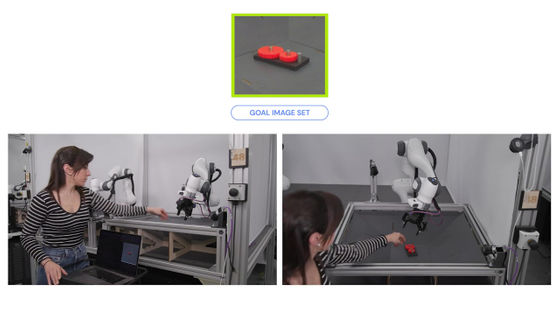

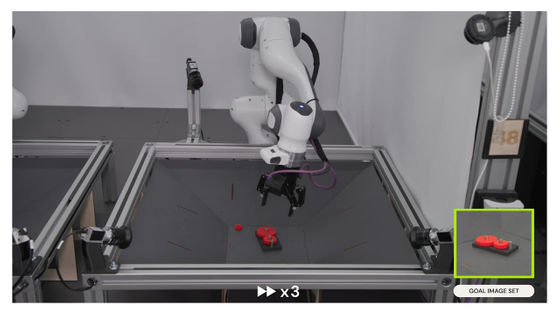

Setting different goals for the same task is also acceptable. This time, there are two gears.

There were some things that made me a little nervous, such as dropping the gear I lifted up, but I cleared it beautifully.

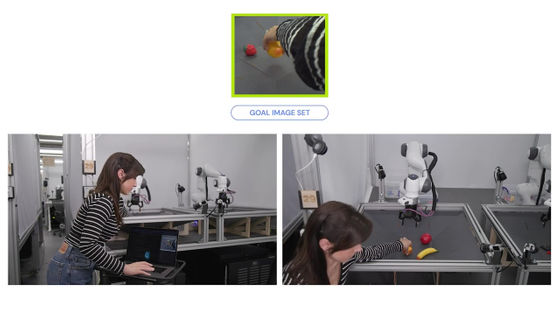

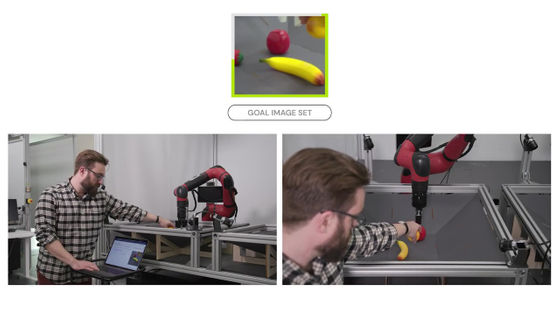

RoboCat also flexibly responds to goals outside of learning. The next experiment showed a human moving a fruit model by hand.

RoboCat had never been trained on data with human hands reflected in it, but it understood that you could just pick up an orange with your hand.

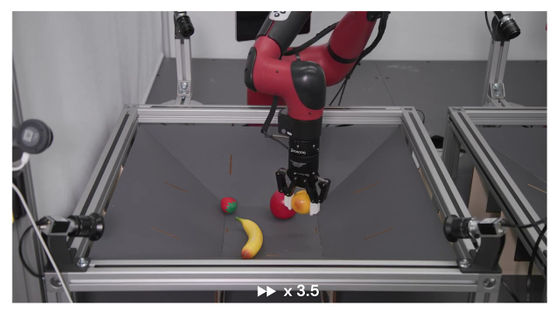

Compatible with robot arms of different models. Until now, it was a white robot arm named 'Panda', but the red robot arm to be tested next is another model called 'Sawyer'. RoboCat has never trained with this robotic arm.

After moving the arm from side to side for a while, I grabbed the orange properly and picked it up.

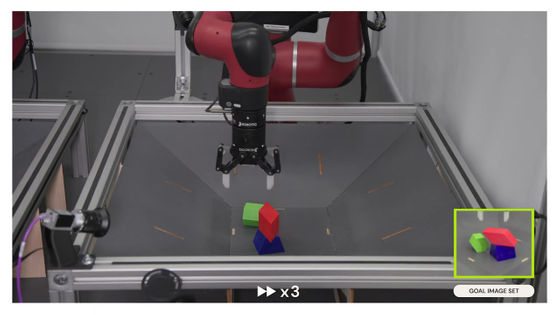

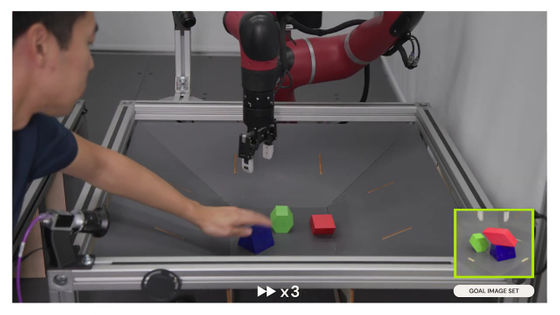

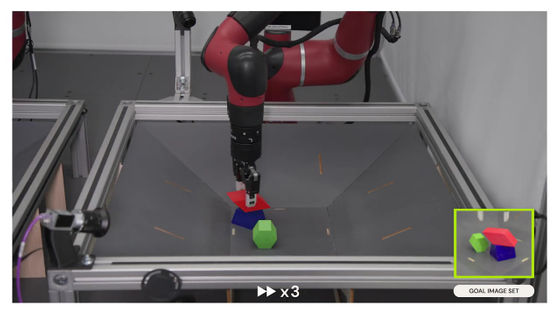

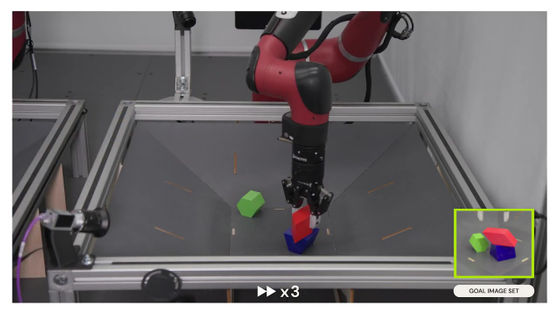

Respond quickly to changing situations. In the final test, we asked them to put a red block on top of a blue block.

Just when I thought it was complete, a human came out and broke the building blocks, causing them to fall apart.

After that, RoboCat quickly picked up the red blocks and restacked them.

Even if the building blocks are persistently broken, they are re-stacked again and again.

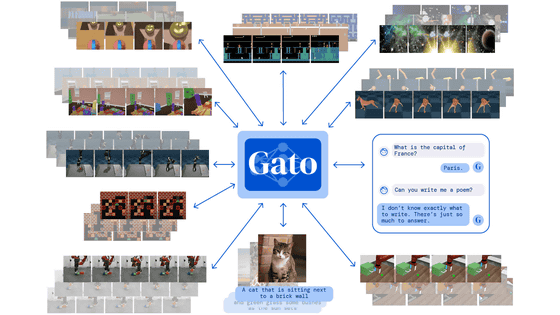

RoboCat is based on the multimodal model `` Gato (meaning cat in Spanish) '' developed by Google DeepMind.

For the development of RoboCat, Google DeepMind used Gato's architecture, which can process words, images, and actions, and a huge amount of training data consisting of images that solve hundreds of different tasks with robot arms of various models, and action sequences that lead to them. used the set.

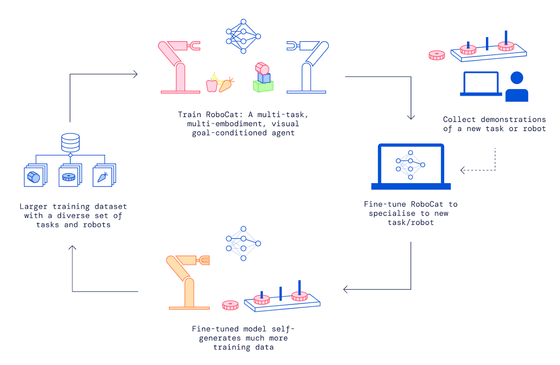

After that, Google DeepMind started a 'self-improvement training cycle' on RoboCat to master unexperienced tasks.

Mastering a new task is divided into five steps:

・Demonstrate a new task 100-1000 times with a robot arm operated by a human.

・Fine-tune RoboCat with new tasks and robot arms, and create spin-off agents specialized for new tasks.

A spin-off agent trains new tasks and arms an average of 10,000 times and self-generates more training data.

Incorporate demo data and self-generated data into RoboCat's existing training dataset.

- Train a new version of RoboCat on the new training dataset.

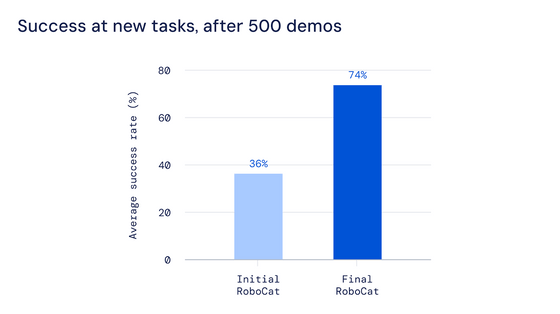

RoboCat realizes a cycle in which the more you learn the task, the better your ability to learn new tasks. An early version of RoboCat showed a 36% success rate in testing after learning 500 demonstrations of a single task. However, after learning on a variety of tasks, we were able to more than double the success rate on the same task.

Google DeepMind says, 'Just as humans acquire more diverse skills as they deepen their learning in a certain field, RoboCat can gain experience and improve their abilities. This ability to learn and rapidly self-improve will be a stepping stone to a useful new generation of general-purpose robotic agents that can be applied to different robots.'

Related Posts: