Anthropic's text generation AI ``Claude'' supports 100,000 tokens, which is nearly three times as large as about 32,000 tokens of OpenAI's GPT-4, and allows long text input

Conversational AI such as ChatGPT can generate highly accurate text, but there is a limit to the 'token', which is the smallest unit of language that can be input. OpenAI's GPT-4 can input up to about 32,000 tokens, but AI research startup

Anthropic | Introducing 100K Context Windows

https://www.anthropic.com/index/100k-context-windows

Anthropic's latest model can take 'The Great Gatsby' as input | TechCrunch

AI startup Anthropic unveils moral principles behind chatbot Claude | Computerworld

Conventional AI models such as ChatGPT and GPT-4 have the problem of forgetting the first human instruction and deciding actions from the latest information in the sentence when thousands of tokens are input. AI development organizations such as OpenAI are developing and researching to deal with this problem, but even with the large-scale language model GPT-4 by OpenAI, the upper limit is about 32,000 tokens, and the summaries of books and research papers and large-scale Such as coding is still considered difficult.

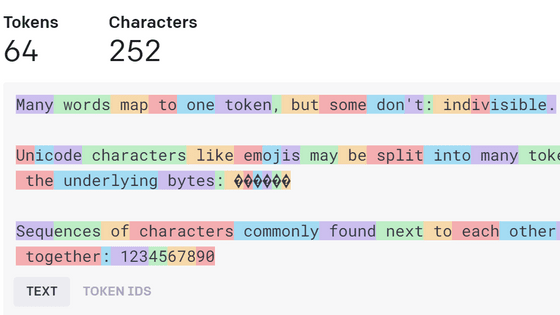

The concept of 'tokens' for sentence recognition by AI is summarized in detail in the following article.

'Tokenizer' that you can see at a glance how chat AI such as ChatGPT recognizes sentences as tokens - GIGAZINE

If the number of tokens that can be entered increases, not only will it be possible to summarize materials such as books and papers, but it will also be possible to have constructive conversations with AI over hours or days, which was previously difficult. increase.

Anthropic, an AI research startup, has expanded the maximum number of tokens for its text generation AI model 'Claude' from 9,000 to 100,000 tokens, supporting approximately 75,000 English sentences. announced that it had Anthropic revealed that Claude's increased number of compatible tokens has made it possible to handle long sentences, and the new Claude has a function to input and analyze hundreds of pages of materials, and information from multiple documents and books. It describes it as being able to acquire and answer questions that require 'knowledge synthesis'.

Introducing 100K Context Windows! We've expanded Claude's context window to 100,000 tokens of text, corresponding to around 75K words. Submit hundreds of pages of materials for Claude to digest and analyze. Conversations with Claude can go on for hours or days . twitter.com/4WLEp7ou7U

—Anthropic (@AnthropicAI) May 11, 2023

In addition, Anthropic introduces various use cases for Claude with long-form correspondence, such as 'understanding, summarizing, and explaining materials such as financial reports and research papers,' and 'explaining strategic risks and opportunities of companies based on annual reports.' ``analyze'', ``evaluate the strengths and weaknesses of the law'', and ``read hundreds of pages of developer documentation and answer technical questions appropriately''.

Below is Claude's demo video released by Anthropic. When I asked Claude, 'Tell me about LangChain ,' he replied, 'I can't answer due to my lack of knowledge.' So when I read a PDF file about LangChain, it shows that Claude understood LangChain.

Anthropic said, ``The average person can read 100,000 tokens worth of text in about 5 hours, but it takes a lot of time to understand, memorize, and analyze that information.However, Claude We can do these things in less than a minute.'

In fact, Claude read the entire novel ' The Great Gatsby ' by F. Scott Fitzgerald , rewrote one sentence, and asked Claude, 'What is the difference from the original sentence?' Anthropic reports that the correct answer was returned in 22 seconds.

Regarding Claude's training, Anthropic said, 'Claude is equipped with a system that uses a set of principles to determine whether toxic or discriminatory outputs may be output that may cause a person to engage in illegal or unethical activities. It is done.” Conventional conversational AI requires human feedback in its training, but Claude uses an established set of principles such as the Universal Declaration of Human Rights , the Apple Privacy Policy , and DeepMind's Principles for Conversational AI. Answers have been critiqued and corrected by Claude himself according to the principles of As a result, Claude is 'less likely to generate answers that encourage or support harmful, racist, sexist, illegal, violent, or unethical behavior' compared to traditional conversational AI. I'm here.

Anthropic reports , ``The company's principles for conversational AI run the gamut from common sense to philosophical ones that avoid AI's ego.'

Claude is in the preview stage at the time of article creation, and a request to Anthropic is required to use Claude.

Related Posts: