Experts are concerned about the appearance of people who argue that 'this is a deep fake' against the evidence video submitted to the trial

In recent years, the development of AI has made it possible to easily create

People are arguing in court that real images are deepfakes : NPR

https://www.npr.org/2023/05/08/1174132413/people-are-trying-to-claim-real-videos-are-deepfakes-the-courts-are-not-amused

In March 2018, Apple engineer Walter Huang died in a crash caused by a Tesla car . Regarding this accident, Mr. Fan's bereaved family sued Tesla, claiming that `` the autopilot function of the Tesla car malfunctioned '', but Tesla's lawyers said, `` Mr. Fan played a game on his smartphone before the collision. and ignored the vehicle's warning,' he argued.

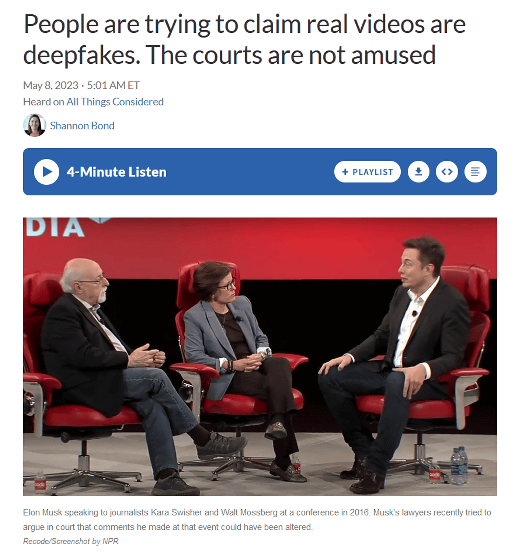

In this trial, the lawyers of Mr. Fan's bereaved family submitted as evidence the voice that Mr. Musk, CEO of Tesla, said, ``Currently, Model S and Model X can drive autonomously more safely than humans.'' . This remark came out during an interview at a technology event called Code Conference held in 2016, and a video containing this remark has also been published on YouTube since June 2016. The statement can be heard at 1:19:25 in the video below.

Elon Musk | Full interview | Code Conference 2016-YouTube

However, Mr. Mask claimed through his defense team that he had no memory of whether he made the remark in question, and the defense team said, ``CEO Mask, like many public figures, actually It is often the case that remarks and actions that have not been made are subject to deepfakes,' he said, questioning the credibility of the evidence video.

Elon Musk's remarks ``may have been made with deep fakes,'' Tesla's defense team claims-GIGAZINE

Santa Clara County Superior Court Judge Yvette Pennypacker said in response to the defense team's allegations, 'Musk's fame makes him an easy target for deepfakes, and his public remarks may be impunity. The claim is very troublesome, ”he ordered CEO Mask to testify after swearing whether he made the remark.

Judge Pennypacker said, 'In other words, Mr. Musk and others like him said whatever they wanted in public and did so under the cover of the possibility that the recorded statements were deepfakes. It's possible to avoid liability for what you say and do, and we don't want to set a precedent by condoning Tesla's behavior in this way.'

Since the development of AI has made it possible to create fairly sophisticated deep fakes, it is becoming more likely that various evidences will be countered by saying, 'This is a deep fake.' “We were concerned that in the age of deepfakes, anyone would be able to deny reality,” said Hany Farid , a deepfake and digital manipulation expert at the University of California, Berkeley. ” commented.

Fareed points out that countering court evidence as a deepfake is a classic `` liar's dividend ''. The liar dividend is a concept put forward by law professors Robert Chesney and Danielle Citron in 2018. The fact that deepfakes can easily create fake videos and images has led to the spread of the idea that 'that is It makes it easier to believe the lie that it's a deep fake. ``Simply put, the skeptical public begins to doubt the authenticity of real audio and video,'' Chesney et al.

Musk isn't the first person to claim that the behavior that served as evidence in the trial 'might be a deepfake.' Two of the people arrested in

In a 2020 article , Liana Pfefferkorn, a researcher at the Stanford University Internet Observatory, pointed out that at that stage, there were no cases where the ``this is a deepfake'' objection to the evidence was valid. . On the other hand, the court argued that it would be forced to deal with the issue of parties claiming 'this evidence is a deepfake' rather than attempting to turn over deepfake evidence. bottom.

Mr. Pfefferkorn hopes that the professional code of practice as a lawyer will help curb unreasonable claims in court. However, Rebecca Delfino , a professor at Loyola Law School, believes that the evidence in the trial does not have a problem that can be argued that 'this is a deep fake' and that stronger standards are needed. .

And even if the court were able to address the issue, the affected jurors could say, 'I want you to show me proof that this is not a deepfake.' If a lawyer induces a jury to demand that all the evidence be 'provide evidence that this is not a deepfake', the other side will have to spend a huge amount of time and money to collect evidence. I am pressed. Therefore, people who do not have sufficient resources to hire experts are less likely to be judged properly in court.

In addition, there is concern that the effects of deepfakes will extend beyond the courtroom, and people will begin to deny what happened in the real world as 'this is a deepfake.' Farid said, 'All of a sudden, police violence and human rights violations, and politicians' inappropriate remarks and illegal actions, all of a sudden there is no reality. This is really worrying because it makes us lose our sense of how to think about the world.' That's what it is,' he said.

Related Posts:

in Note, Posted by log1h_ik