Does a large-scale language model-based AI such as the interactive AI ``ChatGPT'' lie to humans?

ChatGPT, an interactive AI developed based on OpenAI's large-scale language model GPT, is able to interact with sentences as natural as humans, but it is pointed out that the content often contains mistakes. It has been. Engineer Simon Wilson explains whether such ChatGPT will lie.

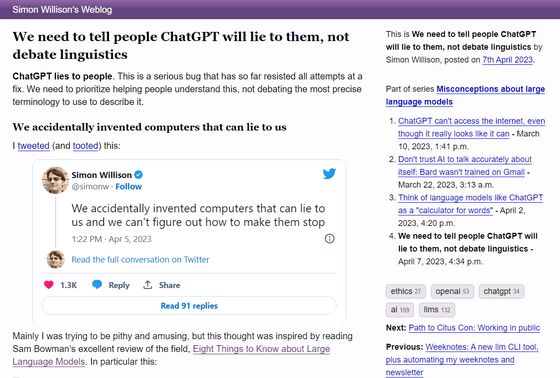

We need to tell people ChatGPT will lie to them, not debate linguistics

So far, Mr. Wilson has said in various places that ``the large-scale language model is lying'', but he has been pointed out that ``it is inappropriate to call it lying''. Mr. Wilson said, ``I certainly agree that anthropomorphism is bad.These models are complex matrix operations, not intentions or opinions.'' He argues that there are cases in which people say things that are not true.

From New York University Visiting Scholar

In other words, based on Mr. Bowman's report, it can be said that ``AI may decide to tell what is not true according to the situation.'' Wilson said that phenomena such as sycophancy and sandbagging indicate that the system isn't working as intended, and that it's a very problematic behavior, and that he hasn't found a reliable way to fix this behavior. is.

On top of that, Mr. Wilson said, ``You can't trust that 'ChatGPT provides factual information.' A system that has no senses, is not an intelligent system, has no opinions, no feelings, no egos. The most direct harm caused by is that ``large models mislead users''.

'If tools like ChatGPT are unreliable and are clearly deceiving people, then should we encourage people not to use ChatGPT or anything like that at all, or campaign to ban them? Should we?' said Mr. Wilson. It is possible that the problems that have been solved by the advent of ChatGPT could have been solved without ChatGPT, but there is no doubt that ChatGPT is useful in terms of time and cost.

``I'm not worried about AI taking people's jobs,'' Wilson said. I feel it's ethical and I want everyone to do what I can in a safe and responsible way.'

``Tools such as ChatGPT are very powerful, but using them effectively is much more difficult than it seems at first glance,'' Wilson said. I invented and I haven't found a way to stop it, 'he argued that it is important to learn how to use AI like ChatGPT correctly and carefully.

Related Posts:

in Software, Posted by log1i_yk