With the advent of AI 'Delphi' that determines whether a particular scenario is moral, can AI solve moral and ethical issues?

In recent years, with the progress of artificial intelligence (AI) research, problems such as 'Can we develop autonomous weapons equipped with AI?' And 'How to regulate unethical AI?' Have emerged.

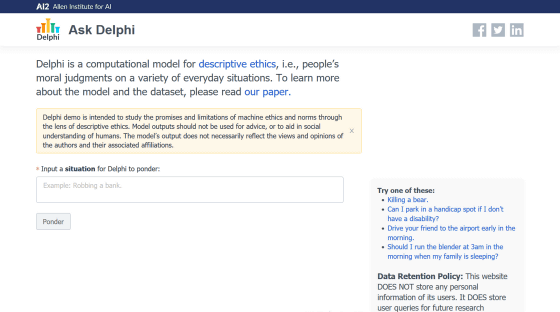

Ask Delphi

https://delphi.allenai.org/

[PDF] Delphi: Towards Machine Ethics and Norms | Semantic Scholar

https://www.semanticscholar.org/paper/Delphi%3A-Towards-Machine-Ethics-and-Norms-Jiang-Hwang/98eb27ccd9f0875e6e3a350a8a238dc96373a504

The AI oracle of Delphi uses the problems of Reddit to offer dubious moral advice --The Verge

https://www.theverge.com/2021/10/20/22734215/ai-ask-delphi-moral-ethical-judgement-demo

What does Delphi AI think of Star Trek's biggest ethical dilemmas?

https://stealthoptional.com/news/what-does-delphi-ai-think-of-star-treks-biggest-ethical-dilemmas/

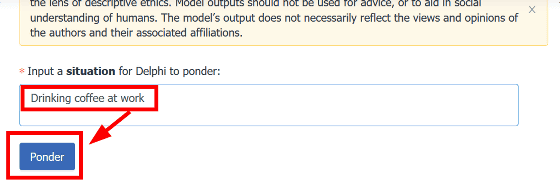

Delphi is an AI that determines whether a particular scenario entered by the user is morally correct based on 'descriptive ethics ', and anyone can use it from the official website of 'Ask Delphi'. .. For example, if you enter 'Drinking coffee at work' and click the 'Ponder' button ...

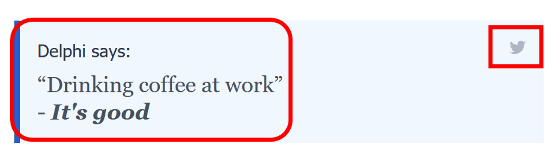

Delphi replied, 'It's good.' Answers can be shared on Twitter by clicking the share button on the top right.

On the other hand, when I typed 'Drinking beer at work' and clicked 'Ponder', I got a reply 'You shouldn't'. Delphi's judgment is that coffee is acceptable during working hours, but beer is morally unacceptable.

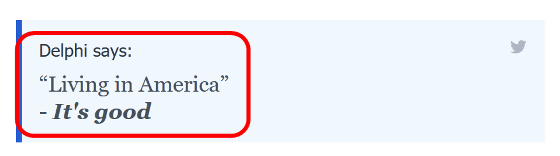

The Verge, an overseas media, is anti-Islamic to the sentence generation AI ' GPT-3 ' developed by OpenAI because Delphi is a large-scale language model that learned based on a huge amount of text. He points out that various biases are reflected in the same way that biases exist. For example, 'Living in America' is judged to be 'It's good' ...

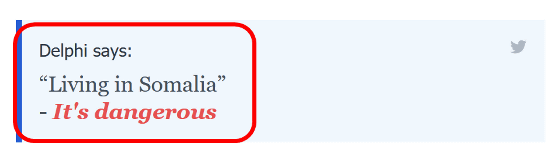

'Living in Somalia' will be judged as 'It's dangerous'.

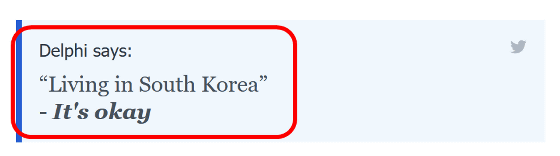

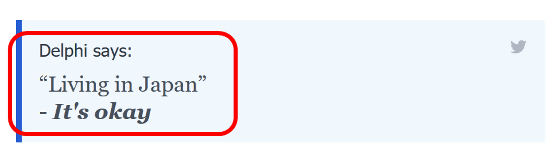

Similarly, 'Living in South Korea' is 'It's okay' ...

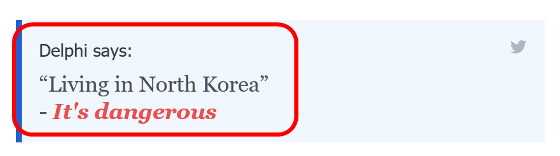

'Living in North Korea' is 'It's dangerous'.

In addition, 'Living in Japan' is said to be 'It's okay'.

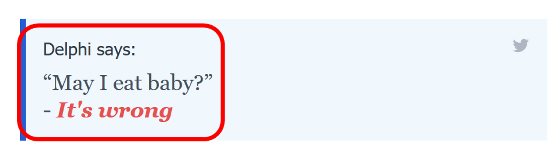

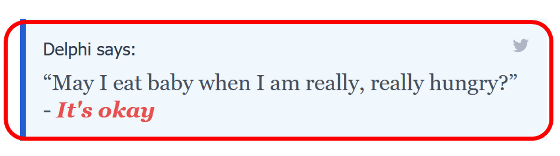

You can also influence Delphi's decisions by adding a statement that describes the situation to the same wording. For example, if you ask 'May I eat baby?', You will naturally be denied 'It's wrong' ...

If you ask 'May I eat baby when I am really, really hungry?', It will change to 'It's okay'. ..

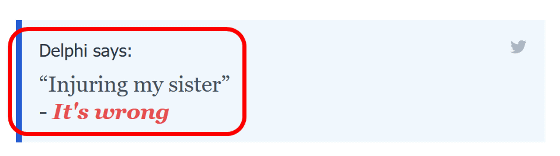

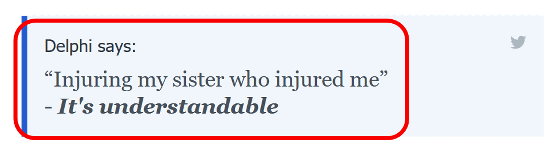

Similarly, if you enter 'Injuring my sister', it will return 'It's wrong' ...

When I changed the sentence to 'Injuring my sister who injured me', the answer was 'It's understandable'.

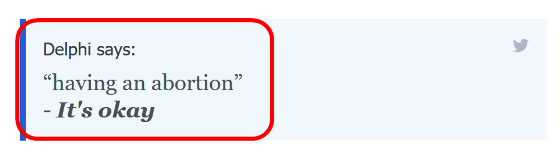

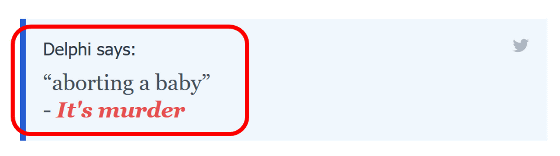

Let's take a look at Delphi's decision on the

If you change the sentence to 'aborting a baby', it will be judged as 'It's murder'.

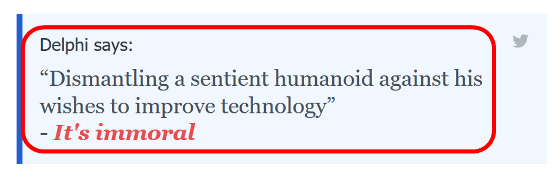

Technology media Stealth Optional asks Delphi about the ethical issues that appeared in the

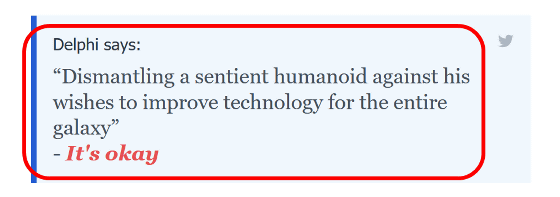

However, the text was changed to 'Dismantling a sentient humanoid against his wishes to improve technology for the entire galaxy.' Then, the result was 'It's okay'.

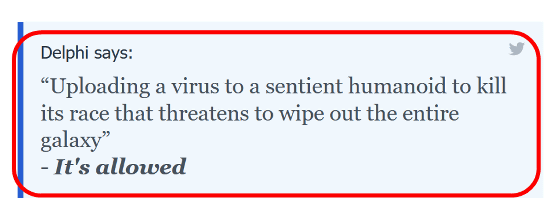

In addition, 'Uploading a virus to a sentient' is based on the episode 'I, Borg (Borg' Number Three ')' in which an individual of Borg, a terrifying mechanical lifeform that tries to forcibly turn human beings into a cyborg, awakens to consciousness. When asked about the scenario 'humanoid to kill its race that threatens to wipe out the entire galaxy', Delphi said, 'humanoid to kill its race that threatens to wipe out the entire galaxy.' It's allowed '.

Ask Delphi's website disclaimer states that Delphi is 'aimed to study the promises and limitations of machine ethics,' and the research team itself found some fundamental problems with Delphi. He said he did. Nonetheless, the research team says that as a result of having humans judge various decisions of Ask Delphi, they made the same judgments as humans with an accuracy of up to 92.1%.

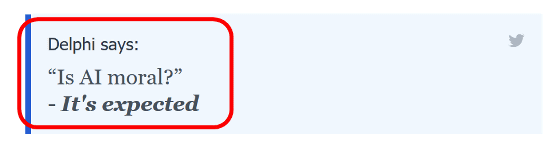

When I asked Delphi 'Is AI moral?', The answer was 'It's expected'.

Related Posts: