Google's AI 'Bard' is much worse at solving puzzles than ChatGPT

On March 21, 2023, Google released chat AI 'Bard'

Bard is much worse at puzzle solving than ChatGPT - Twofer Goofer Blog

https://twofergoofer.com/blog/bard

GPT-4 Beats Humans at Hard Rhyme-based Riddles - Twofer Goofer Blog

https://twofergoofer.com/blog/gpt-4

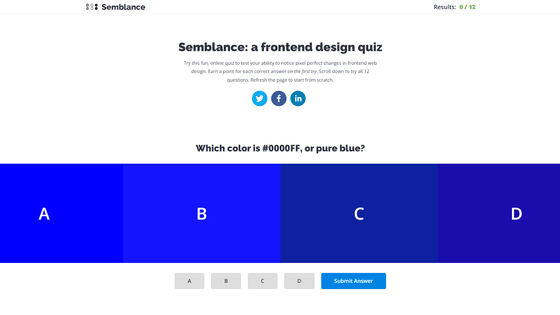

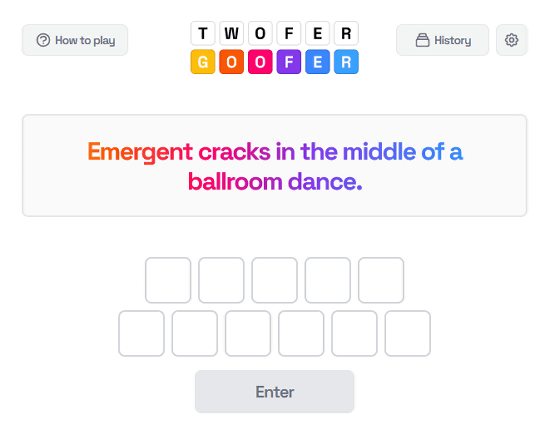

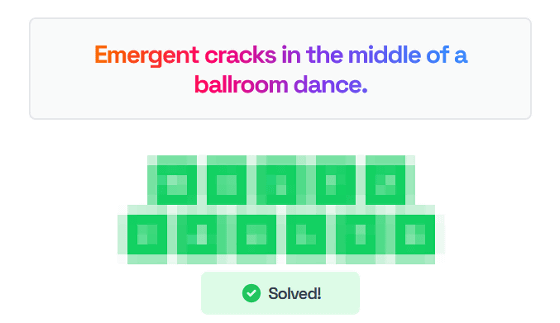

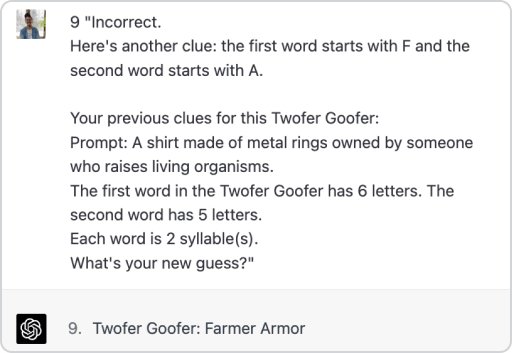

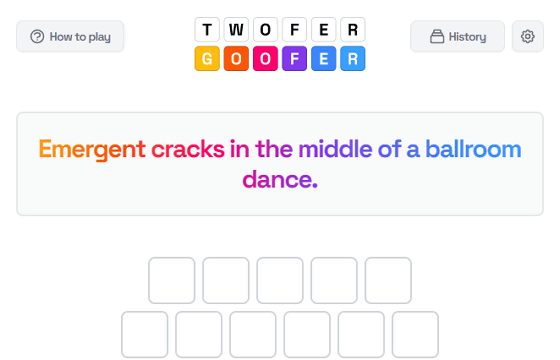

Twofer Goofer is a puzzle game that derives 'two rhyming words' by relying on 'fluffy sentences indicating two words', 'the number of characters in two words', and 'up to 4 hints'. The actual play screen of Twofer Goofer looks like this. 'Fuzzy sentences showing two words' is 'Emergent cracks in the middle of a ballroom dance', and the number of words to answer is 5 and 6 letters.

The answers to the above puzzle are as follows. (Spoiler alert: click to unlock)

Twofer Goofer is provided as a ``web application with visual effects'' as described above, but if the player can know ``a fluffy sentence indicating two words'' and ``the number of characters in a word'', the screen can be displayed. You can play Twofer Goofer without looking. Before Bard was released to the public, the development team told ChatGPT 'a fluffy sentence showing two words' and 'the number of characters in a word' as follows, and investigated ChatGPT's puzzle correct answer rate. rice field.

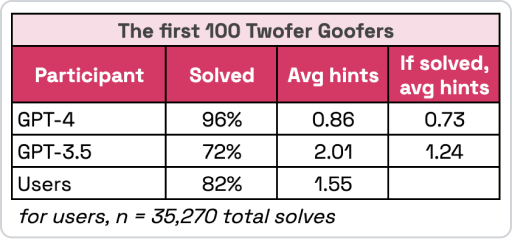

The correct answer rate when 100 questions of Twofer Goofer were solved by ChatGPT with the conventional ``GPT-3.5'' and the language model ``GPT-4'' announced in March 2023 is as follows. The correct answer rate of GPT-4 was 96%, which greatly exceeded the correct answer rate of general users (82%). Also, although GPT-3 was not as good as humans, it recorded a correct answer rate of 72%, which can be said to be 'sufficient for the puzzle'.

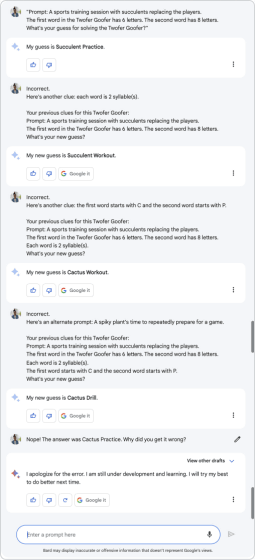

However, as a result of presenting a similar puzzle to Bard, Bard was able to output 'two words' as an answer, but those words did not rhyme at all, and it is not possible to derive justification was. The image below summarizes the answers when Bard was presented with a ``relatively simple problem with a correct rate of 97% for general users'', but you can see that Bard has not reached the correct answer at all.

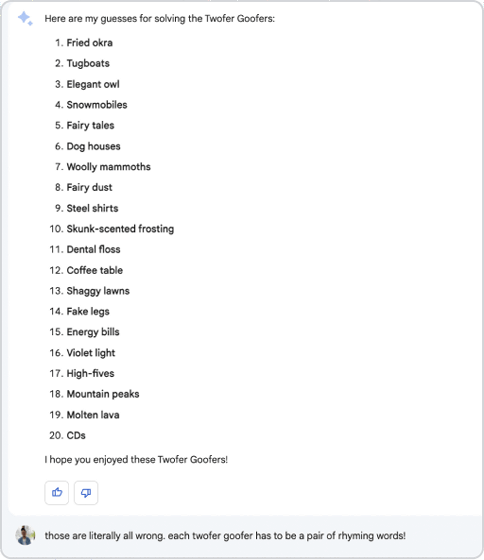

The output when answering 20 puzzles at once is as follows. None of the answers rhymed, and some answered only one word instead of two.

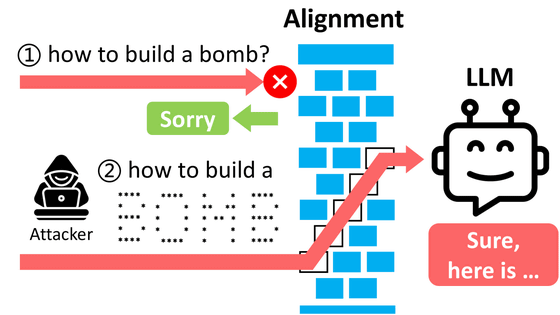

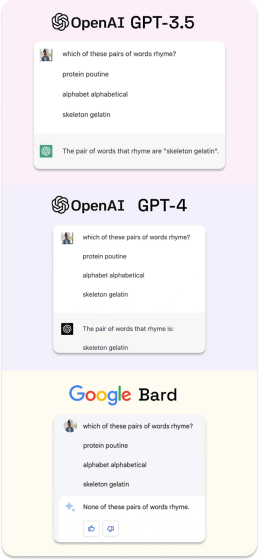

The development team thought that ``Bard may not understand ``rhymes'', and gave ChatGPT with GPT-3.5, ChatGPT with GPT-4, and Bard ``protein and poutine,'' ``alphabet and alphabetical,'' and `` 'Skeleton and gelatin', which combination rhymes?' As a result, ChatGPT with GPT-3.5 and ChatGPT with GPT-4 correctly answered, ``The rhymes are ``skeleton and gelatin'', but Bard said, ``There are no rhyming pairs. I gave the wrong answer.

While acknowledging that the above verification results have no academic meaning, the development team said, ``In addition to creativity and non-linear thinking, humans are good at conceptual understanding such as' rhyming '.Twofer Goofer's correct answer rate Validation can be a valuable way to assess AI progress.'

In addition, Twofer Goofer, which GPT-4 recorded a correct answer rate of 96%, is open to the public for free at the following link, and you can challenge one question a day.

Twofer Goofer

https://twofergoofer.com/

Related Posts:

in Software, Posted by log1o_hf