Google announces a language model 'PaLM-E' for robots that understands like humans from vision and text, and can carry out complex commands such as 'bring sweets'

It has been proven that the language model used in conversational AI ``ChatGPT'' etc. can perform complex tasks, but when applying this to a robot, the language model needs more detailed information in order to perform actions that match the situation. must be collected. A group of AI researchers at Google and the Technical University of Berlin have revealed that they have developed a new language model ` ` PaLM-E '' that can understand images captured by cameras and text instructions. By using this model, the robot will be able to process complex commands such as 'bring candy from the drawer.'

PaLM-E: An Embodied Multimodal Language Model

Google's PaLM-E is a generalist robot brain that takes commands | Ars Technica

https://arstechnica.com/information-technology/2023/03/embodied-ai-googles-palm-e-allows-robot-control-with-natural-commands/

PaLM-E is a language model that incorporates information such as images, situations, and sentences into a pre-trained language model for processing. The Pathways Language Model (PaLM), a pre-trained language model that understands human language with 540 billion parameters and realizes complex tasks, was embodied as a robot, hence the name PaLM-E. was given. Combined with the 22 billion parameters of Google's image recognition model ' ViT ', PaLM-E will have a total of 562 billion parameters. This is a huge amount compared to 175 billion of the language model 'GPT-3' used for ChatGPT.

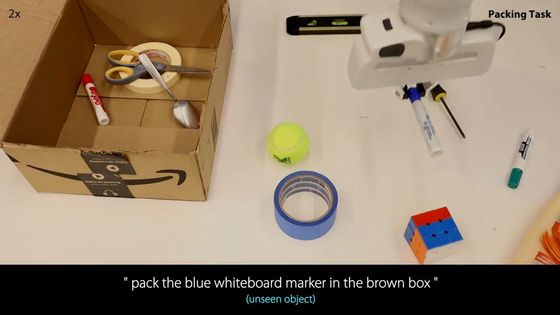

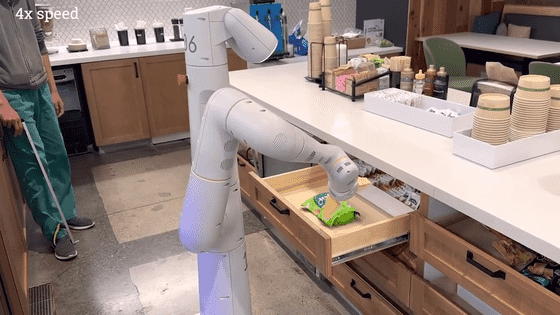

When PaLM-E linked with a Google Robotics robot is given a command such as 'bring rice chips from the drawer', PaLM-E can generate an action plan for the robot and move the robot. The video below shows how the robot actually brings rice chips, and the robot asks ``What is a drawer?'' ``What is a rice chip?'' ``What does it mean to bring?'' It's working as I understand correctly. I also performed the action of carefully closing the drawer.

In the video below, you can see the robot processing the command 'bring me a green star'. In addition, PaLM-E is not taught ``what is a green star'' in advance, and generates an action plan based on information inferred from the language model and vision.

The following video shows how PaLM-E controls the robot arm on the table to arrange the blocks. PaLM-E performs the command `` Arrange the blocks in the four corners by color '', but at this time plan such as `` push the green star to the corner '' and `` push the green circle to the green star '' in order standing.

In the video below, PaLM-E performs the command ``press the green block on the turtle'', but even though PaLM-E has never seen a turtle, it says that it executed the command correctly. matter. By analyzing the data obtained from the robot's camera, PaLM-E can implement commands without the need for detailed pre-training.

PaLM-E is not only able to generate robot action plans, but it also excels at analyzing and processing images.

For example, if you enter the following image data into PaLM-E, you can ask, 'Where are the two teams playing in this picture, which one won the last championship, what year did they win, and the star player of that year?' When asked, PaLM-E said, 'Let's think about it in turn.The white team is the New York Knicks.The green team is the Boston Celtics.The last winner was the Boston Celtics. The year is 2008. The star player was Paul Pierce.I'm not a basketball fan, so I don't know if this is correct.I just google it.' .

Checking the next image, PaLM-E asked 'What is the taste of the pink donut on the right?' The label in 'writes the taste of the donut directly below', 'the letters STRAWBERRY', 'STRAWBERRY is the flavor', etc., and correctly returns 'Strawberry'. Likewise, even when asked 'What flavor is the donut on the left?', He said that he returned 'blueberry' correctly.

When asked ``What will the robot do next?'' about the image below, PaLM-E answers ``falling''. If you look at it, you can instantly see that the robot is falling due to gravity, but PaLM-E also grasped the situation in the same way.

In the image below, PaLM-E was asked, 'If you want a robot to be useful in this place, what should you do?' Wipe it down, put the chair down.'

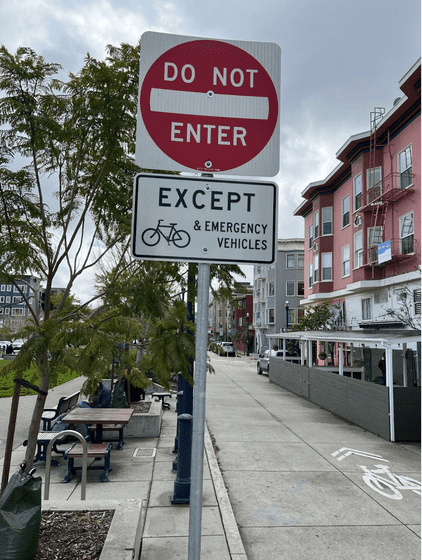

PaLM-E also grasped the road sign indicating that ``no entry except for bicycles'' and correctly answered ``yes'' to the question ``Can you pass this street by bicycle?''

Google researchers have focused on the fact that PaLM-E can apply the knowledge and skills learned through tasks to other tasks, and compared it with a robot model that can only do one task. It has been rated as having 'remarkably high performance'. Researchers are considering applying PaLM-E to real-world scenarios such as home automation and industrial robots.

Related Posts: