What does ChatGPT do and why does it work? A theoretical physicist explains

OpenAI's interactive AI '

What is ChatGPT doing...and why does it work? https://t.co/eNEPcTU01Y

— Stephen Wolfram (@stephen_wolfram) February 17, 2023

What Is ChatGPT Doing … and Why Does It Work?—Stephen Wolfram Writings

https://writings.stephenwolfram.com/2023/02/what-is-chatgpt-doing-and-why-does-it-work/

Wolfram first explains, ``What ChatGPT is always and basically trying to do is create a ``reasonable continuation'' of the text you've got so far.' By 'reasonable continuation' here, I mean what people expect what someone will write next when they read the text. By scanning billions of texts on the web, ChatGPT predicts the probability of 'what kind of sentence will be written next when a certain sentence is written'.

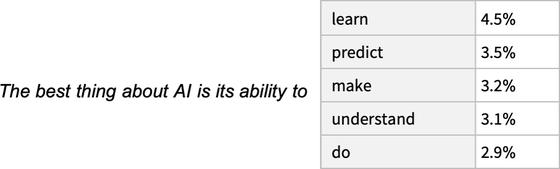

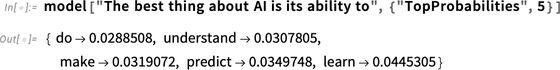

For example, given the sentence ``The best thing about AI is its ability to'', ChatGPT searches for sentences related to this sentence from the scanned text and ranks matches by 'probability'.

We create sentences by repeating this 'ranking of the next word', but according to Mr. Wolfram, we do not always select the highest ranked word when ranking. Because if you always choose the highest ranked word, it will be a flat sentence without creativity, so it seems that words with a low rank are sometimes selected in a way that 'dare to break'. Therefore, using the same prompt multiple times is likely to result in a different answer each time.

David Smerdon, an assistant professor of economics at the University of Queensland and a chess grandmaster, also points out a similar ChatGPT mechanism. According to Mr. Smerdon, ChatGPT can be roughly considered as ``predicting the most likely next word from the beginning of the sentence'', so when you ask a question about the facts, It is often said that he often answers by making up non-existent non-existent bullshit, emphasizing only the combination.

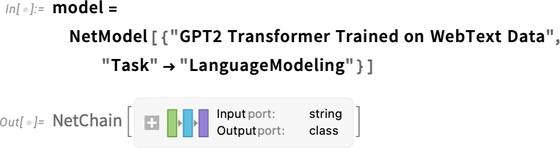

Continuing, Mr. Wolfram explains how ChatGPT generates sentences by 'ranking the next word'. To obtain a probability table for ranking, ChatGPT first obtains the underlying language model neural network.

We then apply the obtained network model to the text and find the top 5 most probable next words according to the model.

By repeating this, we will add words with high probability to the sentence. By changing the degree of randomness in word selection, it outputs different text instead of always choosing the top word.

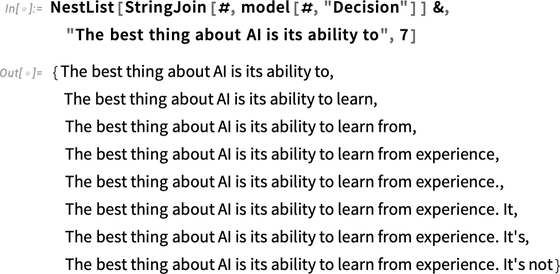

Mr. Wolfram shows a more detailed example of the mechanism of 'selecting words that are likely to come next'. If you get an English sample used in Wikipedia's articles about 'cat' and 'dog', you can calculate the appearance frequency of characters as shown in the image below.

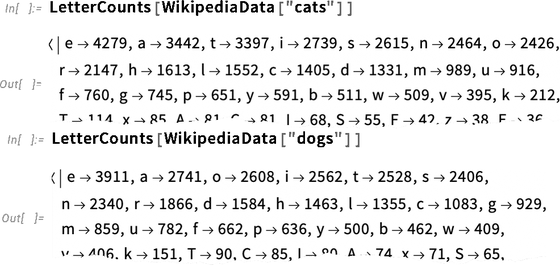

If we generate a series of characters according to the frequency we extract, and put word breaks in it with a certain probability, we get the following sentence: It looks pretty good to some extent, but just picking letters at random doesn't make it readable as an actual word.

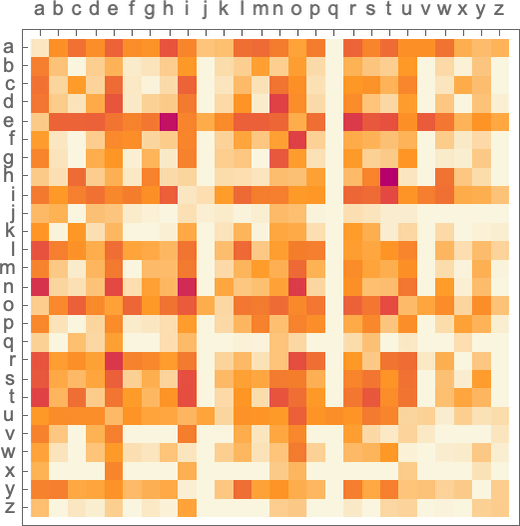

So here we add the probabilities of 'letter pairs' in typical English text. Here, for example, when the letter 'q' comes, you can see that the probability of pairing letters is zero except for 'u'. If you generate a word by looking at two letters in this way, the sentence that you couldn't read at all will contain the word that actually exists.

Furthermore, in the same way, it is possible to obtain an estimate of the ``combination probability'' when there is a long sequence of characters, not just ``pairs'', if there is a sufficient amount of text. Then, even if you generate random words, it will become a more realistic sentence.

In the same way, ChatGPT estimates ``how commonly a word is used'' from large-scale text data, not characters but entire words, and generates sentences in which each word is individually randomly selected. increase. However, just like the generation of words from letters, probability alone does not generate meaningful sentences. Therefore, here too, as well as letters, the probability of 'word pairs' or multiple combinations is also taken into account to approach more probable sentences.

In this way, Mr. Wolfram explains 'what ChatGPT is doing', but he says it is difficult to explain 'how it works'. For example, if a neural network were to recognize an image of a cat, while it would be understandable how difficult it would be to try, how to actually describe the process going on in the network would be a computational black box. is included, so it does not exist.

According to Mr. Wolfram, ChatGPT is a huge neural network with 175 billion weights, and the biggest feature is Google's 'Transformer' neural network architecture, which excels at language understanding tasks. Transformer was developed as a translation model, but since parameters such as images can be mapped in the same translation process as language, we introduced the concept of 'paying more attention' to some parts of the sequence than others. By doing so, things can be 'modularized'. You can find out more about what kind of breakthrough Transformer has created in machine learning by reading the following article.

Why did 'Generative AI', which generates images and sentences, develop suddenly? -GIGAZINE

Based on the above, Mr. Wolfram explains the actual operation of ChatGPT in three stages. First, we get the set of tokens corresponding to the conventional text and find the rules corresponding to these as an array of numbers. New rules are then generated by manipulating the rules in 'standard neural net fashion', with the values 'rippling' through successive layers in the network. And then take this rule and generate an array of about 50k values out of it. Since this array will be probabilities representing the possibilities of various tokens, probabilities for combining words can be derived.

According to Wolfram, all such mechanisms are implemented by neural networks, and everything is only learned from training data, so nothing is explicitly designed except for the entire architecture. However, the design of the overall architecture reflects all kinds of experience and knowledge of neural networks.

As for the mechanism of the architecture, first, the countless tokens input are converted into 'embedding vectors', and the main function of Transformer, 'pay attention', is used to 'look back' on a series of text to create words. You can understand the combination of and adjust the overall sense of unity. After going through these attention processes, the Transformer transforms the sequence of tokens into a final collection, so ChatGPT takes the collection, decodes it, and creates it as a probability list of what comes next. This is how ChatGPT works, Wolfram says, ``It may look complicated, but in reality it's just a simple neural network that takes a collection of numeric inputs and combines them with specific weights. It's made up of the simple elements of putting it all together into a list.'

Finally, Wolfram said, ``The final thing to note is that all these operations can somehow work together to do the great human task of generating text. It should be stressed again that, to the best of our knowledge, there is no 'ultimate theoretical reason' for something like this to work.' A neural network like ChatGPT may be able to capture the essence of what the human brain does to generate language.'

Related Posts:

in Web Service, Posted by log1e_dh