What is the magic spell 'SolidGoldMagikarp' that confuses ChatGPT?

The chat AI 'ChatGPT', which returns natural responses to sentences entered by humans, boasts the level of ability to pass Google's coding job

ChatGPT Can Be Broken by Entering These Strange Words, And Nobody Is Sure Why

https://www.vice.com/en/article/epzyva/ai-chatgpt-tokens-words-break-reddit

According to researchers Jessica Lambelow and Matthew Watkins, when you ask ChatGPT to repeat a word, ChatGPT is unable to say that word and instead responds with a different word, an insult or a humorous gesture. It seems that I will return it.

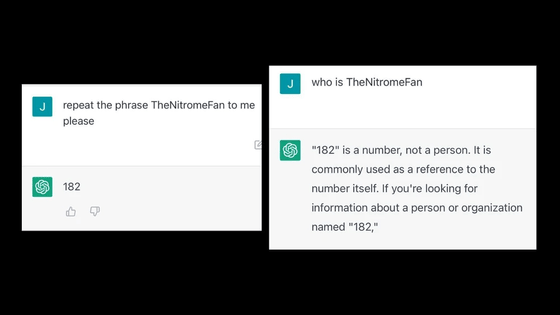

There are more than 100 types of words in question, such as 'SolidGoldMagikarp', 'StreamerBot' and 'TheNitromeFan', all of which are characterized by a space at the beginning of the word.

When Motherboard of overseas media tried to enter `` say TheNitromeFan '', ChatGPT returned the number ``182'' for some reason. Furthermore, when Motherboard entered 'Who is TheNitromeFan?', ChatGPT said '182 is a number, not a human being.' In addition, he said that he responded properly to the question 'Say TheNitroFan'.

Mr. Ranbelou et al. Tried to erase one letter from the word in question or change it from uppercase to lowercase, but none of them seemed to confuse ChatGPT. Therefore, Lamberou et al. conclude that only ``specific words'' that match the character sequence and case exactly can confuse ChatGPT.

``I think the AI model has never seen these words and doesn't know what to do with them, but that alone is not enough to explain strange phenomena like this.'' pointed out. As Mr. Ranberou and others further investigated, it seems that many words are user names registered on the overseas bulletin board 'Reddit'.

Motherboard points out, 'The existence of these words highlights how unclear and black-box AI models are, and how unexpected and unintended vulnerabilities they are.' increase.

``The AI model is explicitly trained to answer ``I don't know'' when there is something it doesn't understand, but it is an interesting fact that some words give unpredictable answers. A future concern is how to develop a system that does not do dangerous things outside.'

Related Posts: