Which GPU can execute image generation AI 'Stable Diffusion' fastest?

AI ``

Stable Diffusion Benchmarked: Which GPU Runs AI Fastest (Updated) | Tom's Hardware

https://www.tomshardware.com/news/stable-diffusion-gpu-benchmarks

Creating complex AI requires a server with a huge amount of training hardware, but if you just want to use already trained AI, you can use a general GPU that is also installed in consumer PCs. Is possible. Although Stable Diffusion is basically designed for NVIDIA GPUs, Walton points out, 'This does not mean that Stable Diffusion cannot be executed on GPUs other than NVIDIA.'

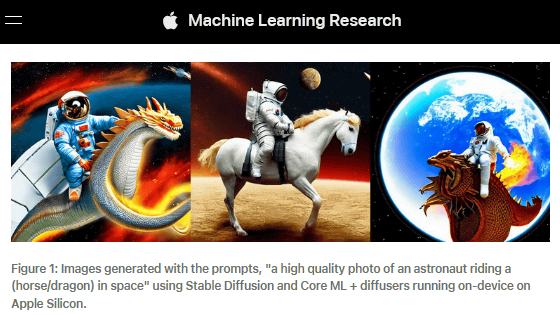

Already, a method to operate Stable Diffusion on Intel's GPU 'Intel Arc' and a method to run it on a Mac with M1 / M2 chips have also been released.

Succeeded in running image generation AI 'Stable Diffusion' with Intel Arc - GIGAZINE

How to run image generation AI 'Stable Diffusion' locally on Mac with M1 - GIGAZINE

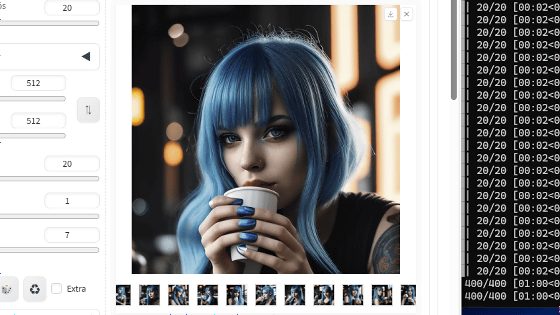

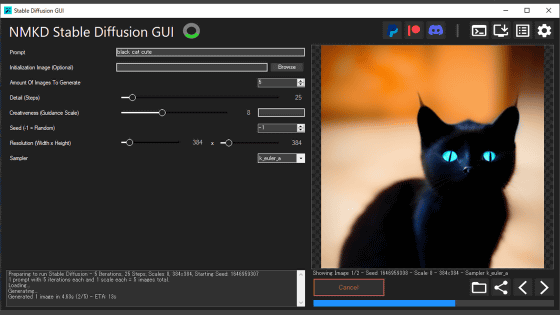

Walton, who measured the speed of running Stable Diffusion on various GPUs, used ' AUTOMATIC 1111 version Stable Diffusion web UI ' to test NVIDIA GPUs, ' Nod.ai's Shark version ' to test AMD GPUs, It is said that ' Stable Diffusion OpenVINO ' was used for testing Intel Arc, an Intel GPU.

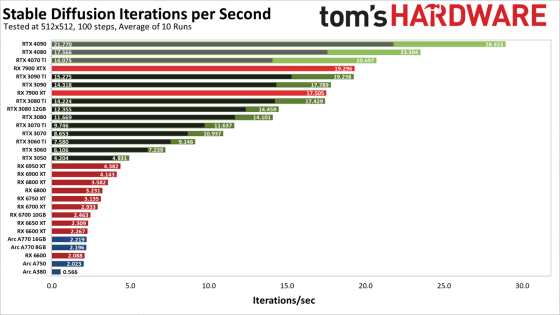

The graph below shows the average number of iterations per second for each GPU, using the same prompt, number of steps, and CFG (classifier-free guidance) to generate 10 512 x 512 pixel images. NVIDIA's RTX 40/30 series is shown in green, AMD's RX 7000/6000 series in red, and Intel Arc series in blue. Contains the result of a version using the library ' xFormers ' which allows to Overall, NVIDIA GPUs demonstrate superior performance compared to AMD and Intel, and you can see that using xFormers improves performance by tens of percent.

In this survey, NVIDIA's RTX 40 series was the fastest, followed by AMD 7900 series and NVIDIA's RTX 30 series, with Intel Arc being considerably slower in comparison. However, there is still plenty of room for Stable Diffusion to run even faster on AMD and Intel GPUs with the right optimizations, and Walton said there will be versions that perform better on AMD and Intel GPUs. I think it's just a matter of time.

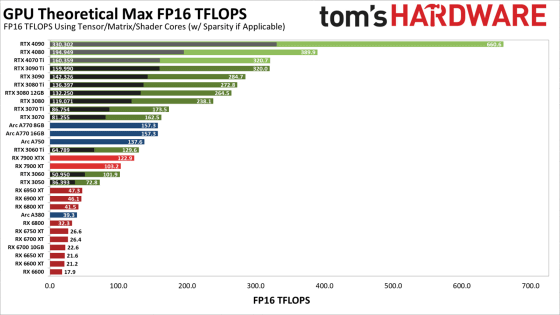

In addition, the graph below shows the theoretical maximum performance of

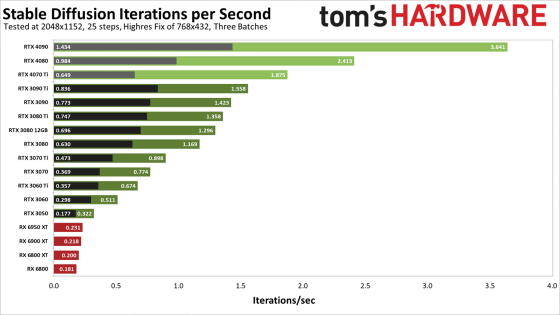

In addition, Walton also publishes the results of generating and testing high-resolution images of 2048 x 1152 pixels. Looking at the graph below, we can see that the xFormers speedup is more pronounced for high resolution images. In addition, it was not tested because it could not be operated with AMD's GPU.

In addition, MosaicML , a cloud company that provides a system for machine learning training, uses its own system for 13 days and less than $ 160,000 (about 20.7 million yen) for the time and cost of training Stable Diffusion from scratch. reported to be trainable. The cost is 2.5 times lower than reported by StabilityAI, a developer of Stable Diffusion.

Training Stable Diffusion from Scratch Costs [$160k

https://www.mosaicml.com/blog/training-stable-diffusion-from-scratch-costs-160k

GitHub - mosaicml/diffusion-benchmark

https://github.com/mosaicml/diffusion-benchmark

Related Posts:

in Web Service, Hardware, Posted by log1h_ik